![]()

CHAPTER 1

THE ROLE OF MORAL THEORY

WHENEVER WE CONSIDER WHAT WE OUGHT TO DO, WHAT WE SHOULD do, what it is our duty to do, whether we would be a bad person if we did something, whether something is right or wrong, or whether the results of a decision will be fair to all concerned, then we are making an ethical decision. Most of us live good enough lives without ever really thinking about how we treat others. Moral decisions are often not very difficult. Yet some important decisions about how to live require a great deal of thought.

Ethical decision-making signals itself by the presence of code words such as “ought,” “should,” “good,” “duty,” or “fair.” The words, “ought,” “should,” and “good,” however, are also used in a non-moral sense. Sometimes what appears to be ethical vocabulary is a prediction, as in, “The meteorologist says that it should rain tomorrow.” Sometimes what appears to be ethical vocabulary is a rule of inferences, as in, “From the fact that A is larger than B and B is larger than C, you ought to infer that A is larger than C.” Sometimes what appears to be ethical vocabulary is advice about game strategy, as in “In chess, it is wrong to get your queen out early,” or “Moving your pawn is a good play.” Sometimes it occurs in other sorts of “strategic” advice, as in “To arrive by 9 am, you should catch the 7:30 train.” The moral and non-moral uses of this vocabulary are determined by the context. Each of us must, at times, examine our decisions about how to live, and it is this ethical vocabulary that we use in our decision-making.

Human beings are a cooperative species. Without their exceptional ability to work together, humans would still be living in small family groups gathering plants and hunting animals. Ethical principles attempt to systematise a set of recommendations about how moral agents should treat one another and the world around them so that they can successfully cooperate. In this respect, moral systems resemble the legal systems which human beings have created to enable social cooperation. One major difference is that legal systems depend on government power (courts, judges, police, jails, etc.) to make them work, whereas ethical systems depend on people, first, reasoning their way to fair terms of cooperation and, second, having the psychological make-up that is required to stick to those terms.

Each of us has an intuitive understanding of the psychological force, or moral suasion, of an ethical judgment. We understand what someone is saying when she tells us that we ought not to do such-and-such. Ethical judgments have a normative force that motivates us to obey the rules of social cooperation. We learned to understand this action-guiding force of ethical judgments when we were children. In fact, we have understood the force of ethical judgments a lot longer than we have understood the judgments of mathematics, science, or economics. Whereas scientific statements are purely descriptive, ethical judgments contain both a descriptive and a motivating component. For example, advice against non-cooperative behavior, such as “Stealing from Tommy is wrong,” implicitly contains both a description of an action about to be performed on Tommy, and either some sort of imperative, “Don’t steal from Tommy!” or an expression of emotion, “How terrible of you to steal from Tommy!”

Ethical judgments thus have a dual aspect. On the one hand, they involve the head and its beliefs and reasons. On the other hand, they involve the heart and its feelings and emotions. It turns out that neither head nor heart, reason nor emotion, is enough, by itself, to bring about optimal social cooperation. To properly examine our ethical lives, we must examine both our rational thinking and our emotional responses.

1.1 COOPERATION, REASON, AND EMOTION

For morality to work in enabling social cooperation, people must have both reasoning abilities and the right sort of emotional capacities. This is demonstrated by the interactions involved in two types of games. These games abstract from regular life to illustrate important structural features of ethical situations. The inadequacy of reasoning alone to produce cooperative moral behavior is demonstrated in a well-known bit of game theory, the Prisoner’s Dilemma Game. It demonstrates how agents who can reason well, but who lack any of the prosocial emotions such as empathy, compassion, guilt, shame, admiration, or trustworthiness, will face a cooperation dilemma. The reasoning is tricky, but an understanding of this game should be in everyone’s intellectual toolkit. The fact that human beings do have the right sort of prosocial emotions to enable fair cooperation is demonstrated in another game, the Ultimatum Game.

First let us examine how good reasoning alone cannot always produce mutually advantageous cooperation. Imagine two hunters living in a state of nature back in a time before any moral rules had become established. The two hunters must decide whether to trade or fight over their catch. They are from different clans, and each has no sympathy, compassion, or fellow feeling for members of another clan. Though they treat their own families well, they see members of other clans as being members of an alien species. They have no sense of fair treatment for others outside of their own clan. They are neither trusting nor trustworthy. They are, however, totally rational.

In some situations, they will cooperate well enough. Suppose that Gog has caught a live rabbit. Gog does not like rabbit, but he does like partridge. Gog would get zero satisfaction from eating a rabbit, but he would get 2 units of satisfaction from eating a partridge. Conversely, Magog has caught a live partridge, but he prefers rabbit. Magog would get zero satisfaction from eating a partridge, but he would get 2 units of satisfaction from eating a rabbit. In this situation, both hunters could see that their rational course of action is to cooperate by trading their respective catches. If Gog, for example, simply stole Magog’s animal, he would not be any better off food-wise than if he traded. Stealing may result in a fight, or at least, in aggravation. In a positive-sum, win-win game such as this, rationality is enough to create cooperation for mutual advantage.

Unfortunately, there are other situations where good reasoning, by itself, is not enough to produce cooperation for mutual advantage. For example, suppose the two hunters have the different preferences. Gog would get 1 unit of satisfaction from eating a rabbit, but he would get 2 units of satisfaction from eating a partridge, and 3 units from eating both. Magog would get 1 unit of satisfaction from a partridge, 2 from a rabbit, or 3 from having both. They can either cooperate by trading, or they can, one or the other or both, decide not to cooperate, and to hit the other man on the head and steal his animal. But if both of them try this last strategy, nobody gains anything; each goes home to his original unsatisfactory catch. In a situation such as this one, it would be mutually advantageous for both hunters to effect a peaceful trade. However, as we shall see, their reasoning processes will rationally result in neither hunter’s gaining anything.

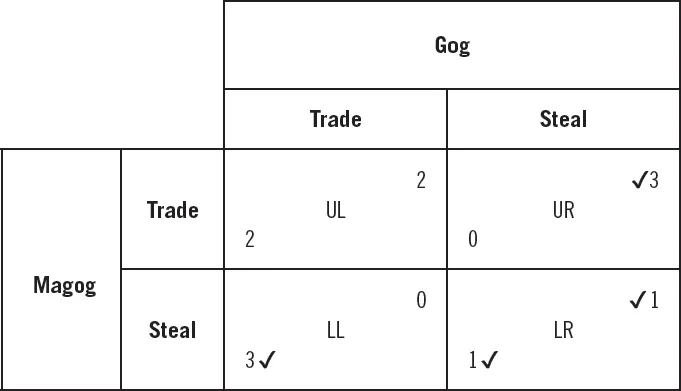

To see better what is likely to happen, we can represent their strategic choices in a payoff matrix, as shown in Figure 1.1. A payoff matrix for a game is a table that shows each player’s payoff for every possible combination of strategies. In this case, the game has two players, Magog and Gog, who each have a choice of two strategies, either to cooperate and try to trade with the other player, or to defect and hit the other player on the head and steal his animal. We represent Magog’s two strategies as the two rows of the table, and Gog’s two strategies as the two columns of the table. This gives four possible outcomes that we represent by the four cells of the table. Each of these cells shows the payoffs to Magog and Gog of a specific combination of strategies. We show Magog on the left side of the payoff matrix, so we show Magog’s payoffs in the lower left of each cell. We show Gog on the upper side of the payoff matrix, so we show Gog’s payoffs in the upper right of each cell. For example, if Magog offers to trade but Gog hits him on the head and steals his animal, then their payoffs are in the upper right cell (UR) of the payoff matrix. Magog will end up with 0 units of satisfaction and Gog will get 3. The payoffs in the matrix correspond to the payoffs given in the verbal description of the situation.

Let us see how this works. Suppose Magog shows up holding his animal and offers to trade. Then what is relevant is the first row of the table. If Gog also shows up willing to trade, then they both do so, and each ends up with 2 satisfaction units (Cell UL). But if Gog shows up intending to steal, he takes Magog’s animal, and now has 3, leaving Magog with 0 (Cell UR).

Suppose instead that Magog shows up intending to steal. Now the second row of the table is relevant. If Gog shows up intending to trade, Magog takes his animal, and now has 3 units, leaving Gog with nothing (Cell LL). But, lastly, if Gog shows up also intending to steal, nobody gets anything, and each goes home to his original 1-satisfaction animal (Cell LR). Now consider Magog’s reasoning process.

1. First he thinks to himself, “Suppose Gog’s going to show up intending to trade. If I came intending to trade, we’d trade, and I’d wind up (UL) with 2 units; but if I hit-and-stole, I’d get his animal without losing my own; so I’d wind up (LL) with 3 units. So, if Gog’s going to show up to trade, then I’m better off hitting and stealing than trying to trade.”

2. Then he thinks to himself, “But suppose Gog’s going to show up intending to hit-and-steal. If I came intending to trade, I’d lose my animal and wind up (UR) with 0 units, but if I came also intending to hit-and-steal, nothing would change hands, and I’d wind up (LR) with 1. Not good, but better than nothing. So, if Gog’s going to show up to hit-and-steal, then I’m better off hitting and stealing than trying to trade.”

3. Finally, he thinks to himself, “Gee, no matter what Gog does, I’m better off hitting and stealing.”

As far as Magog is concerned, LL is better than UL, so we mark his payoff in LL with a checkmark. Similarly, for Magog, LR is better than UR, so we mark his payoff in LR with a checkmark. Magog will conclude that no matter what Gog does, it is rational for him to try to steal. Magog has a dominant strategy. A dominant strategy in game theory is a strategy that yields a higher payoff regardless of the strategy chosen by the other player. Figure 1.1 shows that Magog’s strategy of stealing has a check mark beside the payoff no matter whether Gog tries to steal or trade. Stealing is Magog’s dominant strategy.

Now let us look at Gog’s reasoning. Figure 1.1 shows that, no matter which strategy Magog plays, Gog’s payoff (also checked) will be higher if he, Gog, attempts to hit and steal. The situation is symmetric, and the reasoning is the same for both Gog and Magog. Therefore, Gog too has a dominant strategy, with both payoffs checked, which is to attempt to steal. It is rational for Gog to attempt to steal as well.

Yet see what happens. If Magog and Gog both follow their totally rational dominant strategy, then they will end up in cell LR, where each will receive a payoff of 1 unit of satisfaction. Their dilemma is that this outcome is not the one that would maximize their rational self-interest. They would both be better off with the outcome shown in cell UL, where they each get 2 units of satisfaction. They can obtain this outcome if they cooperate and both offer to trade, but, by reason alone, they cannot reach this better outcome. Because of the structure of the situation, individually rational decisions will lead to an outcome that is irrational for both parties.

A game is a Prisoner’s Dilemma Game when both players have dominant strategies that, when played, result in an outcome with payoffs smaller than if each had played another strategy. It gets this name from a strategy sometimes employed by the police to get two criminal accomplices to inform on one another. Even though Magog and Gog are highly intelligent, they do not have the necessary emotional repertoire to trust one another. Because of this they will be unable to achieve the benefits of cooperation. This situation is a paradigm example of a dilemma of cooperation.

Dilemmas of cooperation, such as the Prisoner’s Dilemma, show that everyone will be better off if everyone cooperates. They do not show any specific self-interested person that he must cooperate. Instead, they show that people must have cooperative attitudes towards one another if social interactions are to work for everyone. The structure of these cooperative attitudes is the subject matter of ethics. Not every cooperative attitude is fair. For example, if Gog had the attitude of always submitting to Magog’s authority, then Magog would do well, but Gog would suffer, and this would be unjust. There could not be a society that worked for everyone if people were incapable of cooperative prosocial attitudes.

In fact, people are capable of cooperative, prosocial attitudes. Evidence for this claim comes from another economic game, the Ultimatum Game. The rules of the Ultimatum Game are as follows: The game has two players and a referee. First, the Referee passes some amount of money, for example $10, to Player 1. Player 1 must then issue an ultimatum to Player 2 by offering Player 2 some amount between $1 and $10. If Player 2 accepts the ultimatum, then Player 2 keeps the offer and Player 1 keeps the balance. However, if Player 2 rejects the ultimatum, then the Referee takes back all the money and both players get nothing. For example, Player 1 might offer $3 to Player 2. If Player 2 accepts the ultimatum, then Player 2 gets to keep $3 and Player 1 gets to keep $7. If Player 2 rejects the offer, then neither player gets anything. The game is played just this one time.

The Ultimatum Game appears trivial, but the results are enlightening. Most people, if they imagine themselves in the role of Player 1, would probably offer $5 or a 50/50 split. Most people, if they imagine themselves in the role of Player 2 would probably reject anything but a roughly 50/50 offer because they would consider a lopsided offer to be unfair. Players mostly offer an approximately even split, and players mostly reject offers less than an approximately even split. Perhaps ultimatum-issuing players feel ashamed to offer less than what seems fair or feel compassion for the other player and want to share with them. Perhaps, ultimatum-receiving players feel indignant at unfair offers and angry enough to punish the other players, even at some cost to themselves, and perhaps ultimatum-issuing players know this. It seems that because human beings experience such prosocial emotions, it is possible for them to act ethically.

Notice that if Player 2 is purely rational, she will accept any offer, because even $1 is better than $0. It will cost Player 2 money to punish Player 1 for his unfairness, and according to reasoned self-interest, it would be irrational for Player 2 to take on the cost of punishing Player 1. If she does take on the cost of punishing Player 1, then it must be that she is motivated by the prosocial emotions of indignation and resentment that underlie her sense of injustice.

Ethical systems are stabilized by the prosocial emotions of the agent, emotions such as compassion, sympathy, shame, guilt, indignation, or disgust. When these emotional responses are internalized as character traits, or stable dispositions to react in certain ways, players can count on one another to behave cooperatively. (It may seem strange to say that an emotion like indignation at uncooperative behavior is a prosocial emotion, since indignation can sometimes lead to antisocial behavior. But its role in implementing an ethical system underlying social cooperation justifies this usage.) The prosocial emotions, however, are not all that there is to morality. In the Ultimatum game, it seems obvious that an equal division is a fair division. But in more complicated situations, such as deciding on the division of income in a modern post-industrial economy, what is fair is much less obvious. People do feel indignation at unjust situations, but which situations are unjust is a challenging task to figure out.

Ethical systems can help make social cooperation work. Ethics is only possible because the psychological make-up of human beings enables them to internalize and obey normative rules. However, the prosocial emotions of human beings do not, by themselves, determine the content of the moral rules that agents use in a scheme of cooperation. After all, people can feel indignation at all sorts of situations, not all of which deserve moral condemnation. Morality thus requires the use of ethical reasoning to determine which actions of others m...