eBook - ePub

Software Source Code

Statistical Modeling

This is a test

- 358 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

Software Source Code

Statistical Modeling

Book details

Book preview

Table of contents

Citations

About This Book

This book will focus on utilizing statistical modelling of the software source code, in order to resolve issues associated with the software development processes. Writing and maintaining software source code is a costly business; software developers need to constantly rely on large existing code bases. Statistical modelling identifies the patterns in software artifacts and utilize them for predicting the possible issues.

Frequently asked questions

At the moment all of our mobile-responsive ePub books are available to download via the app. Most of our PDFs are also available to download and we're working on making the final remaining ones downloadable now. Learn more here.

Both plans give you full access to the library and all of Perlego’s features. The only differences are the price and subscription period: With the annual plan you’ll save around 30% compared to 12 months on the monthly plan.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 1000+ topics, we’ve got you covered! Learn more here.

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more here.

Yes, you can access Software Source Code by Raghavendra Rao Althar, Debabrata Samanta, Debanjan Konar, Siddhartha Bhattacharyya in PDF and/or ePUB format, as well as other popular books in Computer Science & Software Development. We have over one million books available in our catalogue for you to explore.

Information

Chapter 1 Software development processes evolution

1.1 Introduction

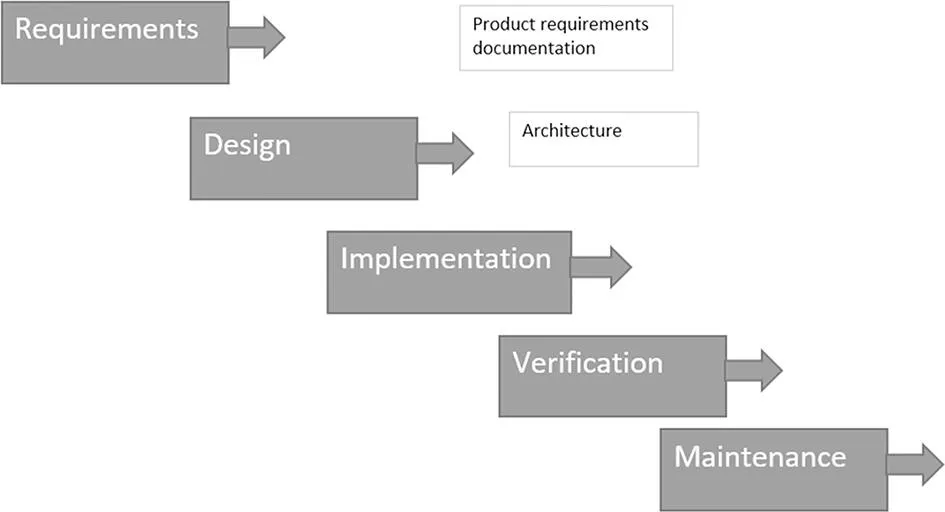

Advanced extreme programming concepts are an exploration area, which popped out of the need for software development advancements. Following this, it calls for the community to look for advanced approaches built on traditional methods. Software systems development began in the 1940s, with the issues resulting from software; there was a need to organize the software development processes. This field is dynamic and will be up to date with the latest technology advancements. With efficiency as the focus area, multiple models of software development evolve. The waterfall and Agile model of products are well-known ones. The agile model of growth seems to be catching attention recently. Figure 1.1 shows the depiction of waterfall model of software development.

Figure 1.1: Waterfall model of software development.

During 1910, Henry Gantt and Frederick Taylor put together a methodology for effective project management, particularly from handling repetitive tasks. It was a game-changer for the industry to enhance their productivity. Working as a team was another critical factor unearthed by the industry, which is also a backbone of current agile methodology. The waterfall model helped to bring in structure into software development in the late 1980s. Design, development, unit testing, and integration testing were the critical phases involved. Development operations, also called DevOps, was a later advancement that focused on integrating the software modules into production. The waterfall model follows a sequential approach in development with the customer requirements flowing down from a high level to a lower level. The output of one stage is dependent on another location. It helps by having a structured approach, accessible communication with customers, and clarity in delivering the project. But it brings in the challenge of difficulties involved in less flexible structure, and, if there are any issues, they are costly to be fixed. The approach works well if there are unexpected drastic changes in requirements. A V-shaped model develops with the waterfall model’s spirit, where the flow bends up after the coding phase. Early testing involved makes it the most reliable approach. A V-shaped model is useful as every stage involves deliverables, with the success rate being high compared to the waterfall model. But V-models do not facilitate change in scope, even though scope changed; it is expensive. Also, the solutions are not exact. Only in case of clearly defined requirements can this model work reasonably even if the technology involved is well understood.

The agile model works based on collaboration among all the parties involved. Figure 1.2 shows Agile model of software development. Here the customer is delivered with the expected product on an incremental basis. In this way, the risk involved is minimal. The focus is to create a cumulative effect that customers can visualize and confirm if the development team is in line with their requirements. Throughout the exploration of best methodologies, the focus has been to build efficiency and effectiveness in the software development process. From this point of view, the Agile and DevOps model has been the most preferred one. Scrum, Crystal, and XP (extreme programming) are some versions of the Agile methodology. With a focus on DevOps being to facilitate faster time to market, Agile and DevOps have preferred software development (Wadic 2020). Figure 1.3 depicts V-shaped model of software development.

Figure 1.2: Agile model of software development.

Figure 1.3: V-shaped model.

1.2 Data science evolution

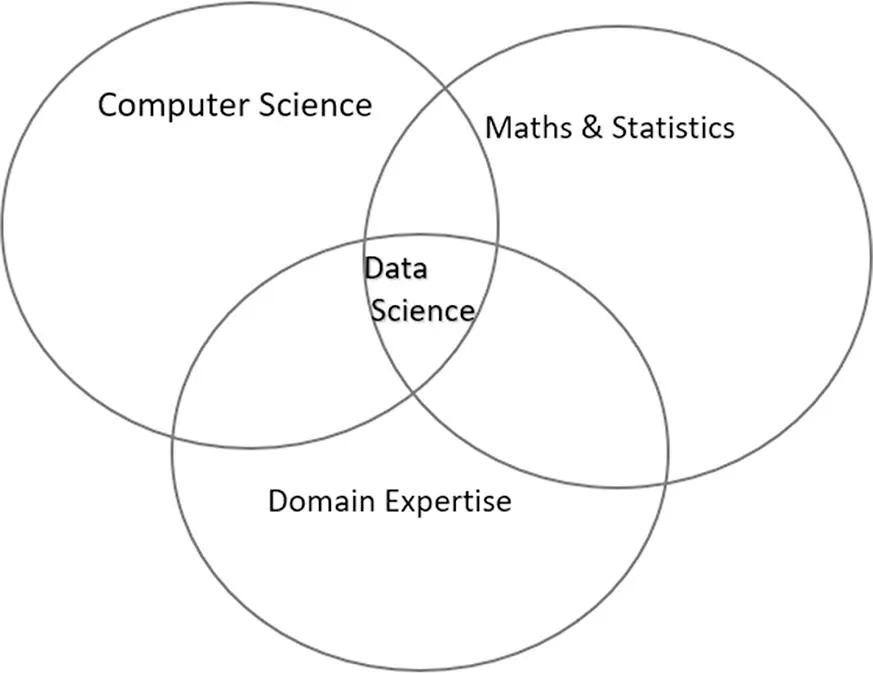

As it is perceived today, data science is mostly influenced by what has evolved after the year 2000. The original form of data science dates to 800 AD, where the Iraq mathematician Al Kindi used cryptography to break the code. It was one of the initial frequency analysis mechanism that inspired many other thought processes in the area. In the twentieth century, statistics became an important area for the quantification of a variety of community in the society. Though the start of data science was in statistics, it has evolved itself into artificial intelligence, machine learning, and the Internet of Things (IoT). With a better understanding of customer data and an extensive collection of the same, there have been revolutionary ways to derive useful information from the data. As the industries started demonstrating the value created with the data, applications extended to medical and social science fields. Data science practitioners have the upper hand over the traditional statistician regarding the knowledge over software architecture and exposure to various programming languages. Data scientist’s involvement is across all the activities of defining the problem, data sources, and data collection and cleaning mechanism. Deriving the deeper insights hidden in the data is the critical area of focus in data science. Operations research was also a lead toward optimizing the problems in the way of mathematical equations. Digital age introduction with supercomputers paved the way for information storing and processing on a large scale. The intelligence associated with computing and mathematical models led the way for advancements in artificial intelligence. A more extraordinary ability to understand the underlying patterns in data is to use it as a base for prediction kept getting better with more data available. There was always a disconnect between the data analysis and software program, which was bridged by the visualizing dashboard solution to make it simple to consume for the core data science team. Business intelligence provided business insight from data without worrying much about how the data transformed into wisdom. Big data was another central area of explosion for handling complex and large data that would be run on extensive computation to understand the underlying data pattern. Data science was mostly about managing this extensive knowledge hidden in the complex data forms. Putting the best of computer science, mathematics, statistics is the primary focus of data science. The massive data flowing in the field helps the decision-makers focus on the critical part of the data landscape for their decision making. Figure 1.4 depicts disciplines of data science.

Figure 1.4: Disciplines of data science.

1.3 Areas and applications of data science

Data engineering and data warehousing are some of the areas. Data engineering is the transformation of data into a useful format that will help the critical analysis. Making data usable by an analyst for their required analytics is the objective. Data mining provides an experimental basis for data analysis to provide the required insights. It will help the expert to formulate the statistical problem from the business concerns. Cloud computing is another area that provides a platform across the enterprise for large scale solutions. It takes care of securely connecting with business systems. Database management also finds an important place in the view of extensive data that happens to be part of the ecosystem. Business intelligence improves data accuracy, a dashboard for stakeholders, reporting, and other related activities. Data visualization is another area of focus that strives to convey critical messages in visuals. It also closely associates with the Business intelligence area for providing the required dashboard based on business needs. The data science life cycle also can be viewed as the data discovery phase, data preparation phase, mathematical models, deriving actionable outcomes and communication associated with the process. Data science areas are also spread across machine learning, cluster analysis, deep learning, deep active learning, and cognitive computing.

Drug discovery fields with its complex processes are assisted by the mathematical model that can process how the drugs behave based on biological aspects. It will be the simulation of the experiments conducted in the lab. Virtual assistants have been in the peak of business support, and rapid progress is happening to improve that area’s experience. Advance in mobile computing backed up with data helps to take the data knowledge to a large population. Extensive advancements in the search engines are another worth noting area. Digital marketing opened large-scale optimizations like a targeted advertisement, cutting down on the expense of advertising and reducing the possibility of large-scale dissatisfaction that would creep up with large-scale promotions. All this is possible by tracking data of the users based on their online behavior. Recommender systems have a prominent part in the business to effectively utilize the customer data and recommend back most useful things to help the customer experience the best they deserve. Advancements in image recognitions are seen in social collaboration platforms that focus on building social networks to establish a network connection. It further leads to improvements in object detection that have a significant role in various use cases. Speech recognition capabilities are seen in voice support products like Google Voice and others, enhancing customer experience. This capability includes converting voice to text instead of a customer requiring typing the text data. Data science capabilities are leveraged by the airline industry, struggling to cope with the competition. They must balance the spiking up air fuel price, and also provide significant discounts to the customers. Analysis of flight delay, the decision of procurement of air tickets, decision on direct and multipoint flights, and managing other customer experience with the data analytics capability have enhanced the industry performance. Machine learning in the gaming industry had made a significant mark, with the players experiencing the game complexity based on their progression in the earlier level. Developments also include the computer playing against human players, analyzing previous moves, and competing.

Augmented reality seems to be at its exciting point. Data science collaborates with augmented reality with computing knowledge and data being managed by algorithms for a more significant viewing experience. Pokémon Go is an excellent example of advancements in this area. But the progress will pick up once the economic viability of the augmented reality is worked out.

1.4 Focus areas in software development

The software development industry focuses on the loss of efficiency due to its unpredictability throughout the value stream of software development. People involved in the processes are not confident about their deliverables at any phase of the life cycle. There is a lot of effort to unearth the code’s issues at a much earlier stage of the life cycle to optimize the fixing effort and cost. There is also a lack of focus on an initial analysis of the requirements based on the business needs. Both domain and technical analysis matured enough to demonstrate end-to-end knowledge needed for the customers. Establishing the traceability of the requirements across the life cycle needs to be scientifically based on this. The big picture is missing for the development community, as they do not understand customers’ real needs. The focus is more on the structure of code, and the holistic design view is missing. There is a gap in the association of technical details to users’ needs in the real world. This concern calls for understanding the domain of the business. management teams are contemplating the need for the system to make development communities experience the customer world. Though business system analysts intended to build this gap, the objective is not satisfied due to a robust system that can facilitate this business knowledge maturity. It points to the need for a meaningful design that makes all the value stream elements of software development, traceable end to end, and intelligent platforms to integrate this knowledge across.

The importance of unit testing as part of building a robust construction phase of the software is not shared with all parties concerned. In the context of the Continuous Integration and Continuous Deployment (CICD) model of software development and integration, quality assurance activities are defocused. Bugs are looked at as a new requirement, resulting in low quality not being realized by team members. On the other end, the CICD model’s maturity is not sufficient to ensure good quality to the end users, which points back to people transitioning from traditional software development methods to the CICD model. Also, there is a need to understand the product and software development landscape well. Though there is recognition of the importance of “First Time Right” as a focus, there is a lack of real, practical sense of first time right in the software development value stream, resulting in the suboptimal deliverables in all the phases. Lack of focus in the initial analysis also extends to nonfunctional requirements and leads to security vulnerabilities costing the organization its reputation and revenue.

...Table of contents

- Title Page

- Copyright

- Contents

- Chapter 1 Software development processes evolution

- Chapter 2 A probabilistic model for fault prediction across functional and security aspects

- Chapter 3 Establishing traceability between software development domain artifacts

- Chapter 4 Auto code completion facilitation by structured prediction-based auto-completion model

- Chapter 5 Transfer learning and one-shot learning to address software deployment issues

- Chapter 6 Enabling intelligent IDEs with probabilistic models

- Chapter 7 Natural language processing–based deep learning in the context of statistical modeling for software source code

- Chapter 8 Impact of machine learning in cognitive computing

- Chapter 9 Predicting rainfall with a regression model

- Chapter 10 Understanding programming language structure at its full scale

- Index