- 288 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

About this book

At the beginning of the twentieth century, H. G. Wells predicted that statistical thinking would be as necessary for citizenship in a technological world as the ability to read and write. But in the twenty-first century, we are often overwhelmed by a baffling array of percentages and probabilities as we try to navigate in a world dominated by statistics.

Cognitive scientist Gerd Gigerenzer says that because we haven't learned statistical thinking, we don't understand risk and uncertainty. In order to assess risk -- everything from the risk of an automobile accident to the certainty or uncertainty of some common medical screening tests -- we need a basic understanding of statistics.

Astonishingly, doctors and lawyers don't understand risk any better than anyone else. Gigerenzer reports a study in which doctors were told the results of breast cancer screenings and then were asked to explain the risks of contracting breast cancer to a woman who received a positive result from a screening. The actual risk was small because the test gives many false positives. But nearly every physician in the study overstated the risk. Yet many people will have to make important health decisions based on such information and the interpretation of that information by their doctors.

Gigerenzer explains that a major obstacle to our understanding of numbers is that we live with an illusion of certainty. Many of us believe that HIV tests, DNA fingerprinting, and the growing number of genetic tests are absolutely certain. But even DNA evidence can produce spurious matches. We cling to our illusion of certainty because the medical industry, insurance companies, investment advisers, and election campaigns have become purveyors of certainty, marketing it like a commodity.

To avoid confusion, says Gigerenzer, we should rely on more understandable representations of risk, such as absolute risks. For example, it is said that a mammography screening reduces the risk of breast cancer by 25 percent. But in absolute risks, that means that out of every 1,000 women who do not participate in screening, 4 will die; while out of 1,000 women who do, 3 will die. A 25 percent risk reduction sounds much more significant than a benefit that 1 out of 1,000 women will reap.

This eye-opening book explains how we can overcome our ignorance of numbers and better understand the risks we may be taking with our money, our health, and our lives.

Cognitive scientist Gerd Gigerenzer says that because we haven't learned statistical thinking, we don't understand risk and uncertainty. In order to assess risk -- everything from the risk of an automobile accident to the certainty or uncertainty of some common medical screening tests -- we need a basic understanding of statistics.

Astonishingly, doctors and lawyers don't understand risk any better than anyone else. Gigerenzer reports a study in which doctors were told the results of breast cancer screenings and then were asked to explain the risks of contracting breast cancer to a woman who received a positive result from a screening. The actual risk was small because the test gives many false positives. But nearly every physician in the study overstated the risk. Yet many people will have to make important health decisions based on such information and the interpretation of that information by their doctors.

Gigerenzer explains that a major obstacle to our understanding of numbers is that we live with an illusion of certainty. Many of us believe that HIV tests, DNA fingerprinting, and the growing number of genetic tests are absolutely certain. But even DNA evidence can produce spurious matches. We cling to our illusion of certainty because the medical industry, insurance companies, investment advisers, and election campaigns have become purveyors of certainty, marketing it like a commodity.

To avoid confusion, says Gigerenzer, we should rely on more understandable representations of risk, such as absolute risks. For example, it is said that a mammography screening reduces the risk of breast cancer by 25 percent. But in absolute risks, that means that out of every 1,000 women who do not participate in screening, 4 will die; while out of 1,000 women who do, 3 will die. A 25 percent risk reduction sounds much more significant than a benefit that 1 out of 1,000 women will reap.

This eye-opening book explains how we can overcome our ignorance of numbers and better understand the risks we may be taking with our money, our health, and our lives.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

PART I

DARE TO KNOW

. . . in this world there is nothing certain but death and taxes.

Benjamin Franklin

1

UNCERTAINTY

Susan’s Nightmare

During a routine medical visit at a Virginia hospital in the mid-1990s, Susan, a 26-year-old single mother, was screened for HIV. She used illicit drugs, but not intravenously, and she did not consider herself at risk of having the virus. But a few weeks later the test came back positive—which at the time amounted to a terminal diagnosis. The news left Susan shocked and distraught. Word of her diagnosis spread, her colleagues refused to touch her phone for fear of contagion, and Susan eventually lost her job. Finally, she moved into a halfway house for HIV-infected patients. While there, she had unprotected sex with another resident, thinking, “Why take precautions if the virus is already inside of you?” Out of concern for her 7-year-old son’s health, Susan decided to stop kissing him and began to worry about handling his food. The physical distance she kept from him, intended to be protective, caused her intense emotional suffering. Months later, she developed bronchitis, and the physician who treated her for it asked her to have her blood retested for HIV. “What’s the point?” she thought.

The test came back negative. Susan’s original blood sample was then retested and also showed a negative result. What had happened? At the time the data were entered into a computer in the Virginia hospital, Susan’s original blood test result seems to have been inadvertently exchanged with those of a patient who was HIV positive. The error not only gave Susan false despair, but it gave the other patient false hope.

The fact that an HIV test could give a false positive result was news to Susan. At no point did a health care provider inform her that laboratories, which run two tests for HIV (the ELISA and Western blot) on each blood sample, occasionally make mistakes. Instead, she was told repeatedly that HIV test results are absolutely conclusive—or rather, that although one test might give false positives, if her other, “confirmatory” test on her initial blood sample also came out positive, the diagnosis was absolutely certain.

By the end of her ordeal, Susan had lived for 9 months in the grip of a terminal diagnosis for no reason except that her medical counselors believed wrongly that HIV tests are infallible. She eventually filed suit against her doctors for making her suffer from the illusion of certainty. The result was a generous settlement, with which she bought a house. She also stopped taking drugs and experienced a religious conversion. The nightmare had changed her life.

Prozac’s Side Effects

A psychiatrist friend of mine prescribes Prozac to his depressive patients. Like many drugs, Prozac has side effects. My friend used to inform each patient that he or she had a 30 to 50 percent chance of developing a sexual problem, such as impotence or loss of sexual interest, from taking the medication. Hearing this, many of his patients became concerned and anxious. But they did not ask further questions, which had always surprised him. After learning about the ideas presented in this book, he changed his method of communicating risks. He now tells patients that out of every ten people to whom he prescribes Prozac, three to five experience a sexual problem. Mathematically, these numbers are the same as the percentages he used before. Psychologically, however, they made a difference. Patients who were informed about the risk of side effects in terms of frequencies rather than percentages were less anxious about taking Prozac—and they asked questions such as what to do if they were among the three to five people. Only then did the psychiatrist realize that he had never checked how his patients understood what “a 30 to 50 percent chance of developing a sexual problem” meant. It turned out that many of them had thought that something would go awry in 30 to 50 percent of their sexual encounters. For years, my friend had simply not noticed that what he intended to say was not what his patients heard.

The First Mammogram

When women turn 40, their gynecologists typically remind them that it is time to undergo biennial mammography screening. Think of a family friend of yours who has no symptoms or family history of breast cancer. On her physician’s advice, she has her first mammogram. It is positive. You are now talking to your friend, who is in tears and wondering what a positive result means. Is it absolutely certain that she has breast cancer, or is the chance 99 percent, 95 percent, 90 percent, 50 percent, or something else?

I will give you the information relevant to answering this question, and I will do it in two different ways. First I will present the information in probabilities, as is usual in medical texts.1 Don’t worry if you’re confused; many, if not most, people are. That’s the point of the demonstration. Then I will give you the same information in a form that turns your confusion into insight. Ready?

The probability that a woman of age 40 has breast cancer is about 1 percent. If she has breast cancer, the probability that she tests positive on a screening mammogram is 90 percent. If she does not have breast cancer, the probability that she nevertheless tests positive is 9 percent. What are the chances that a woman who tests positive actually has breast cancer?

Most likely, the way to an answer seems foggy to you. Just let the fog sit there for a moment and feel the confusion. Many people in your situation think that the probability of your friend’s having breast cancer, given that she has a positive mammogram, is about 90 percent. But they are not sure; they don’t really understand what to do with the percentages. Now I will give you the same information again, this time not in probabilities but in what I call natural frequencies:

Think of 100 women. One has breast cancer, and she will probably test positive. Of the 99 who do not have breast cancer, 9 will also test positive. Thus, a total of 10 women will test positive. How many of those who test positive actually have breast cancer?

Now it is easy to see that only 1 woman out of 10 who test positive actually has breast cancer. This is a chance of 10 percent, not 90 percent. The fog in your mind should have lifted by now. A positive mammogram is not good news. But given the relevant information in natural frequencies, one can see that the majority of women who test positive in screening do not really have breast cancer.

DNA Tests

Imagine you have been accused of committing a murder and are standing before the court. There is only one piece of evidence against you, but it is a potentially damning one: Your DNA matches a trace found on the victim. What does this match imply? The court calls an expert witness who gives this testimony:

“The probability that this match has occurred by chance is 1 in 100,000.”

You can already see yourself behind bars. However, imagine that the expert had phrased the same information differently:

“Out of every 100,000 people, 1 will show a match.”

Now this makes us ask, how many people are there who could have committed this murder? If you live in a city with 1 million adult inhabitants, then there should be 10 inhabitants whose DNA would match the sample on the victim. On its own, this fact seems very unlikely to land you behind bars.

Technology Needs Psychology

Susan’s ordeal illustrates the illusion of certainty; the Prozac and DNA stories are about risk communication; and the mammogram scenario is about drawing conclusions from numbers. This book presents tools to help people to deal with these kinds of situations, that is, to understand and communicate uncertainties.

One simple tool is what I call “Franklin’s law”: Nothing is certain but death and taxes.2 If Susan (or her doctors) had learned this law in school, she might have asked immediately for a second HIV test on a different blood sample, which most likely would have spared her the nightmare of living with a diagnosis of HIV. However, this is not to say that the results of a second test would have been absolutely certain either. Because the error was due to the accidental confusion of two test results, a second test would most likely have revealed it, as later happened. If the error, instead, had been due to antibodies that mimic HIV antibodies in her blood, then the second test might have confirmed the first one. But whatever the risk of error, it was her doctor’s responsibility to inform her that the test results were uncertain. Sadly, Susan’s case is not an exception. In this book, we will meet medical experts, legal experts, and other professionals who continue to tell the lay public that DNA fingerprinting, HIV tests, and other modern technologies are foolproof—period.

Franklin’s law helps us to overcome the illusion of certainty by making us aware that we live in a twilight of uncertainty, but it does not tell us how to go one step further and deal with risk. Such a step is illustrated, however, in the Prozac story, where a mind tool is suggested that can help people understand risks: When thinking and talking about risks, use frequencies rather than probabilities. Frequencies can facilitate risk communication for several reasons, as we will see. The psychiatrist’s statement “You have a 30 to 50 percent chance of developing a sexual problem” left the reference class unclear: Does the percentage refer to a class of people such as patients who take Prozac, to a class of events such as a given person’s sexual encounters, or to some other class? To the psychiatrist it was clear that the statement referred to his patients who take Prozac, whereas his patients thought that the statement referred to their own sexual encounters. Each person chose a reference class based on his or her own perspective. Frequencies, such as “3 out of 10 patients,” in contrast, make the reference class clear, reducing the possibility of miscommunication.

My agenda is to present mind tools that can help my fellow human beings to improve their understanding of the myriad uncertainties in our modern technological world. The best technology is of little value if people do not comprehend it.

Dare to know!

Kant

2

THE ILLUSION OF CERTAINTY

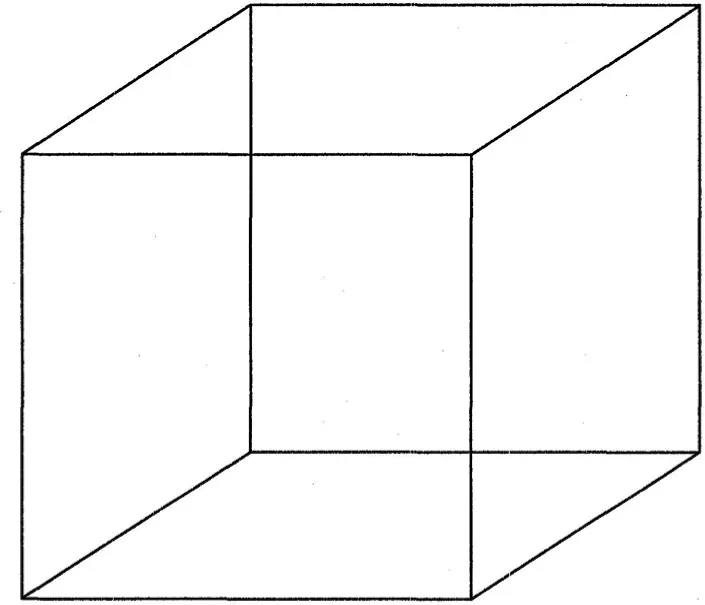

The creation of certainty seems to be a fundamental tendency of human minds.1 The perception of simple visual objects reflects this tendency. At an unconscious level, our perceptual systems automatically transform uncertainty into certainty, as depth ambiguities and depth illusions illustrate. The Necker cube, shown in Figure 2-1, has ambiguous depth because its two-dimensional lines do not indicate which face is in front and which is in back. When you look at it, however, you do not see an ambiguous figure; you see it one way or the other. After a few seconds of fixating on the cube, however, you experience a gestalt switch—that is, you see the other cube instead, but again unambiguously.

FIGURE 2-1. The Necker cube. If you fixate on the drawing, your perceptual impression shifts between two different cubes—one projecting into and the other out of the page.

FIGURE 2-2. Turning the tables. These two tables are of identical size and shape. This illusion was designed by Roger Shepard (1990). (Reproduced with permission of W. H. Freeman and Company.)

Roger Shepard’s “Turning the Tables,” a depth illusion shown in Figure 2-2, illustrates how our perceptual system constructs a single, certain impression from uncertain cues. You probably see the table on the left as having a more elongated shape than the one on the right. The two surfaces, however, have exactly the same shape and area, which you can verify by tracing the outlines on a piece of paper. I once showed this illustration in a presentation during which I hoped to make an audience of physicians question their sense of certainty (“often wrong but never in doubt”). One physician simply did not believe that the areas were the same shape. I asked him how much he wanted to bet, and he offered me $250. By the end of my talk, he had disappeared.

What is going on in our minds? Unconsciously, the human perceptual system constructs representations of three-dimensional objects from incomplete information, in this case from a two-dimensional drawing. Consider the longer sides of each of the two tables. Their projections on the retina have the same length. But the perspective cues in the drawings indicate that the longer side of the left-hand table extends into depth, whereas that of the right-hand table does not (and vice versa for their shorter sides). Our perceptual systems assume that a line of a given length on the retina that extends into depth is actually longer than one that does not and corrects for that. This correction makes the left-hand table surface appear longer and narrower.

Note that the perceptual system does not fall prey to illusory certainty— our conscious experience does. The perceptual system analyzes incomplete and ambiguous information and “sells” its best guess to conscious experience as a definite product. Inferences about depth, orientation, and length are provided automatically by underlying neural machinery, which means that any understanding we gain about the nature of the illusion is virtually powerless to overcome the illusion itself. Look back at the two tables; they will still appear to be different shapes. Even if one understands what is happening, the unconscious continues to deliver the same perception to the conscious mind. The great nineteenth-century scientist Hermann von Helmholtz coined the term “unconscious inference” to refer to the inferential nature of perception.2 The illusion of certainty is already manifest in our most elementary perceptual experiences of size and shape. Direct perceptual experience, however, is not the only kind of belief where certainty is manufactured.

Technology and Certainty

Fingerprinting ...

Table of contents

- Cover

- Dedication

- Acknowledgments

- Part I: Dare to Know

- Part II: Understanding Uncertainties in the Real World

- Part III: From Innumeracy to Insight

- Glossary

- References

- Notes

- Index

- Copyright

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access Calculated Risks by Gerd Gigerenzer in PDF and/or ePUB format, as well as other popular books in Social Sciences & Education Theory & Practice. We have over one million books available in our catalogue for you to explore.