![]()

1 The Formal Language Hierarchy

1.1. Formal Languages

A current Google search for “Chomsky Hierarchy” yields some 60,000 entries. They go beyond linguistics into computer science, algebra, statistics, communication theory, molecular biology, and even biophysics. Wikipedia reminds us that, within “the area of formal languages, the Chomsky Hierarchy (occasionally referred to as Chomsky-Schützenberger hierarchy) is a containment hierarchy a of classes of formal grammars … described by Noam Chomsky in 1956.” It then cites Chomsky’s famous 1956Three Models for the Description of Language, and acknowledges Marco Schützenberger for having played a pivotal role in the development of the theory of formal languages. So as not to personalize matters, let’s refer to this notion as the Formal Language Hierarchy (FLH).1

Let’s examine examples of formal languages that are progressively more complex. Consider mere vowel combinations, starting with the English five in (1a):

- (1) a. a, e, i, o, u, … b. a, aa, aaa, aaaa, … an c… . an, em, il, ok, uj, …

Should English have more vowels (say, seven) or less (e.g., three), we could just list that as well, since a list is as good as any other. (1b) is slightly more nuanced, in that we repeat token vowels of type a any number of times. The notation an is meant to represent just that: a number n of identical vowels. Of course, we can repeat the other vowels too, arbitrarily, as in (1c). It is easy to show, as we do below, that grammars capable of describing strings as in (1a) are also good at generating strings as in (1b) or (1c).

Next consider (2), where the number of a’s in strings in the relevant language is supposed to be the same as the number of b’s in other strings in the language:

- (2) … an, en …

It is not possible to generate formal languages with the format in (2) with the simple grammars responsible for generating structures as in (1). This is the type of game we are interested in: What sorts of strings can a given grammar generate? And if it can only generate those as in (1), what sort of grammar is necessary to generate strings as in (2)?

(3) is similar to (2), except now with three—not just two—repeated sequences:

- (3) … an, … in, … un…

Devices that naturally capture languages with the general format in (2) cannot generate more complex languages with the format in (3). This same formal push can be taken to the extreme of generating strings as in (4), where not only are given vowel strings arbitrarily distant from one another, but in fact the number of token vowels in each case is any function f of the number of token vowels in the previous situation signaled:

- (4) … an, … if(n), … uf(f(n)) …

While this is still a computable process, it may actually be so complex that it never terminates. The point of this exercise is to track complexity by seriously examining computational outputs and the sorts of devices that generate them.

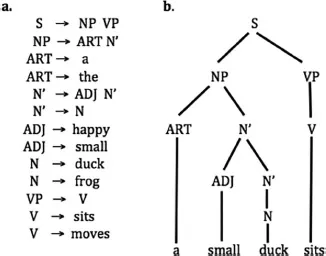

A (Chomsky) grammar is a procedure that operates on a finite set of symbols indicating, through an explicit set of rules of production, how to concatenate (link together) symbols, like the vowels above, into a string. A formal language thus generated need not have any meaning. We say that a formal (Chomsky) grammar generates a formal language, understood as a set of symbol strings exhaustively described under certain admissibility conditions. For a system of this sort to work, a finite collection of rules, relating the admissible strings in the language, can be produced and result in a computation that ideally halts at some point. By beginning with a start symbol and repeatedly selecting and applying rules until the system stops, we generate strings/sentences in the language. Such a rule application sequence is called a derivation. Grammars of this kind are finite, but they generate languages (string sets) that can be of infinite size—in fact, only such languages are interesting. Figure 1.1 gives an example of a formal grammar and some examples of strings in the language it generates.

Grammatical rules are expressed in terms of rewritable “non-terminals” (in upper case) and “terminals” (in lower case), or symbols that cannot be rewritten further—the actual elements in the sentences of the language that one ultimately observes. In a Chomsky grammar, each rule has (at least) a non-terminal symbol on the left, followed by an arrow that indicates how this symbol is to be rewritten using the non-terminal and/or terminal symbol(s) on the right. For example, the first rule in Figure 1.1 indicates that a sentence S in this language consists of a noun phrase NP followed by a verb phrase VP. (Those terms, S, NP, VP, etc. are meant as mere mnemonics; we could use any symbols we please, so long as we do it consistently.) Rules apply one at a time, so either NP or VP is rewritten next (but not both). Other non-terminals are for articles ART, parts of noun phrases N’, adjectives ADJ, nouns N and verbs V, etc., while terminals are lowercase English words. The set of strings/sentences this grammar generates is its formal language. This particular language includes a small duck sits because that can be generated by starting with S and using eight of the listed rules to generate a “parse tree” as illustrated in (b), in Figure 1.1, as readers can see for themselves. This specific language is infinite due to the recursive rule N’→ ADJ N’, which rewrites an N’ in terms of itself. Thus, the language includes sentences with an arbitrary number of adjectives, such as the happy small duck sits or the happy happy small duck sits.

Figure 1.1 (a) A small, simple, grammar consisting of 13 rules or production that collectively defines or generates a specific language; (b) illustration of a parse tree generated by this grammar.

1.2. The Turing Architecture and the Basic Formal Language Types

Alan Turing famously proposed a model of computation to study some classical logic and mathematical problems. He provided a precise description of the simplest device capable of carrying out a computation of arbitrary complexity. He described a machine that manipulates symbols on an infinite tape divided into discrete cells, according to finite rule tables: a “Turing Machine”. As its Wikipedia entry states:

The machine positions its head over a cell and “reads” (scans) the symbol there. Then, as per the symbol and its present place in a finite table of user-specified instructions, the machine (i) writes a symbol (e.g. a digit or a letter from a finite alphabet) in the cell, then (ii) either moves the tape one cell left or right … then (iii) (as determined by the observed symbol and the machine’s place in the table) either proceeds to a subsequent instruction or halts the computation.

The Church/Turing Thesis essentially conjectures that any task that can be step-wise specified can be executed by a Turing machine arranged so as to simulate the task’s logic.

None of that is to say that all formal problems are computable or decidable, the questions Turing sought to answer.2 Nor is it the case that Turing’s abstract machine could be used as an actual computer—there cannot be an infinite tape, nor would it make much sense, for effective computations, to access memory in the extremely limited way that Turing chose for his formal concerns. At the same time, what matters when considering this architecture is that it provides a rigorous way of characterizing the complexity of computational problems. The memory tape in a Turing device is a way to store instructions to be used in later computational steps. Different “memory regimes” crucially allow us to determine formal languages of different nuance, as we see next.

Chomsky and Schützenberger showed how to divide formal languages/grammars into four types, corresponding to examples (1) through (4). Table 1.1 lists these types ordered in terms of increasing formal complexity, just as examples (1) through (4) do. Each type is characterized by restrictions on the form that its rules can have. Again, in these rules, we distinguish terminal symbols (duck, the, etc.)—usually represented in lowercase—from capitalized non-terminal ones, which designate abstract groupings of other symbols. To repeat, these are non-terminal in that they are not meant to be pronounced. Greek letters are used to represent arbitrary strings of symbols, terminal or non-terminal (by convention we take α, β to also include the null string, unlike γ).

Regular grammars contain only rules in which a non-terminal X is replaced by just one terminal a or a terminal followed by an additional non-terminal Y. The examples in (1) can be described with a regular grammar, which I leave as an exercise for readers. In contrast, the grammar in Figure 1.1 is not regular, as it contains rules like S → NP VP, where the non-terminal S is rewritten as two non-terminals. The range of (regular, also called Type 3) languages that regular grammars generate is very limited.

Table 1.1 The Chomsky Hierarchy of Languages | Type of Grammar | Form of Rules | Corresponding machines |

| Regular | X → a, X → a Y | Finite state machines |

| Context-free | X → γ | Pushdown stack automata |

| Context-sensitive | α X β → α γ β | Linear bounded automata |

| Recursively enumerable | a → β | Turing machines |

Context-free grammars contain rules in which any non-terminal X can be rewritten as an arbitrary string of terminals or non-terminals γ. For example, the grammar in Figure 1.1.a is context-free. The term “context-free” indicates that replacing X with whatever string γ appears on the right side of a rule can be done regardless of the context in which X occurs. Note that “context” here is a syntactic notion. Context-free (or Type 2) languages are, in a formal sense, more complex—in terms of their structure and the computations needed to produce them—than regular languages.

As its name indicates, a context-sensitive rule involves rewriting non-terminal X in the context of string α to its left and a string β to its right (in both instances including the empty string). It may seem as if adding such a structural description for the rule to apply limits its power. While it is true that a context-sensitive rule can only apply if its context, as described, is met, the consequence of this for the sorts of (Type 1) formal languages it allows is that said languages are more complex in descriptive possibilities. In particular, examples as in (3) above require a context-sensitive grammar. So being picky with the contextual conditions in the structural description of the rule has the curious consequence of liberating the structural changes that such rules allow, thus resulting in less restrictive languages.

Continuing with progressively more elaborate rules that yield less and less restrictive languages, we finally have recursively enumerable grammars. For rules of the relevant sort at this level, any string of terminals or non-terminal α (including the empty string) can be rewritten as any other such string β (again including the empty string), resulting in totally unrestricted (if still computable) Type 0 languages.3 The artificial example in (4) is meant to be of this sort, where the functions invoked in the superscripts could be literally anything that is computable. For example, imagine eliminating every single vowel in this sentence, yielding the string mgn lmntng vry sngl vwl n ths sntnc. That is surely a computable task, which a rule of Type 0 would allow us to perform.

1.3. Natural Language Examples

In the 1950s and early 1960s, Chomsky considered a theory of syntax based on identifying possible human languages among the formally existing ones. Intuitively, while human languages are rich and intricate in structure, it is not the case that literally any computable function that can be characterized as a formal language shows up as a natural language. Many intuitive examples make this point. For instance, no language that linguists have discovered accepts only palindromes like (5a) (ignoring spaces), or other bizarre concoctions like a Fibonacci growth as in (5b):

- (5)

- a. Was it a car or a cat I saw?

- b. Black,

Then,

White are,

All I see,

In my infancy.

Red and yellow then came to be.

Of course, poets or songwriters can impose restrictions as in (5a) or (5b) (from the song Lateralus by Tool)—or many other such restrictions, including infinitely many that are probably not aesthetically pleasi...