![]()

1

CALIBRATION PRINCIPLES

After completing this chapter, you should be able to:

Define key terms relating to calibration and interpret the meaning of each.

Understand traceability requirements and how they are maintained.

Describe characteristics of a good control system technician.

Describe differences between bench calibration and field calibration. List the advantages and disadvantages of each.

Describe the differences between loop calibration and individual instrument calibration. List the advantages and disadvantages of each.

List the advantages and disadvantages of classifying instruments according to process importance—for example, critical, non-critical, reference only, OSHA, EPA, etc.

1.1 WHAT IS CALIBRATION?

There are as many definitions of calibration as there are methods. According to ISA’s The Automation, Systems, and Instrumentation Dictionary, the word calibration is defined as “a test during which known values of measurand are applied to the transducer and corresponding output readings are recorded under specified conditions.” The definition includes the capability to adjust the instrument to zero and to set the desired span. An interpretation of the definition would say that a calibration is a comparison of measuring equipment against a standard instrument of higher accuracy to detect, correlate, adjust, rectify and document the accuracy of the instrument being compared.

Typically, calibration of an instrument is checked at several points throughout the calibration range of the instrument. The calibration range is defined as “the region between the limits within which a quantity is measured, received or transmitted, expressed by stating the lower and upper range values.” The limits are defined by the zero and span values. The zero value is the lower end of the range. Span is defined as the algebraic difference between the upper and lower range values. The calibration range may differ from the instrument range, which refers to the capability of the instrument. For example, an electronic pressure transmitter may have a nameplate instrument range of 0–750 pounds per square inch, gauge (psig) and output of 4-to-20 milliamps (mA). However, the engineer has determined the instrument will be calibrated for 0-to-300 psig = 4-to-20 mA. Therefore, the calibration range would be specified as 0-to-300 psig = 4-to-20 mA. In this example, the zero input value is 0 psig and zero output value is 4 mA. The input span is 300 psig and the output span is 16 mA.

Different terms may be used at your facility. Just be careful not to confuse the range the instrument is capable of with the range for which the instrument has been calibrated.

1.2 WHAT ARE THE CHARACTERISTICS OF A CALIBRATION?

Calibration Tolerance: Every calibration should be performed to a specified tolerance. The terms tolerance and accuracy are often used incorrectly. In ISA’s The Automation, Systems, and Instrumentation Dictionary, the definitions for each are as follows:

Accuracy: The ratio of the error to the full scale output or the ratio of the error to the output, expressed in percent span or percent reading, respectively.

Tolerance: Permissible deviation from a specified value; may be expressed in measurement units, percent of span, or percent of reading.

As you can see from the definitions, there are subtle differences between the terms. It is recommended that the tolerance, specified in measurement units, is used for the calibration requirements performed at your facility. By specifying an actual value, mistakes caused by calculating percentages of span or reading are eliminated. Also, tolerances should be specified in the units measured for the calibration.

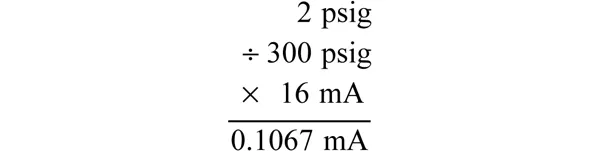

For example, you are assigned to perform the calibration of the previously mentioned 0-to-300 psig pressure transmitter with a specified calibration tolerance of ±2 psig. The output tolerance would be:

The calculated tolerance is rounded down to 0.10 mA, because rounding to 0.11 mA would exceed the calculated tolerance. It is recommended that both ±2 psig and ±0.10 mA tolerances appear on the calibration data sheet if the remote indications and output milliamp signal are recorded.

Note the manufacturer’s specified accuracy for this instrument may be 0.25% full scale (FS). Calibration tolerances should not be assigned based on the manufacturer’s specification only. Calibration tolerances should be determined from a combination of factors. These factors include:

• Requirements of the process

• Capability of available test equipment

• Consistency with similar instruments at your facility

• Manufacturer’s specified tolerance

Example: The process requires ±5°C; available test equipment is capable of ±0.25°C; and manufacturer’s stated accuracy is ±0.25°C. The specified calibration tolerance must be between the process requirement and manufacturer’s specified tolerance. Additionally the test equipment must be capable of the tolerance needed. A calibration tolerance of ±1°C might be assigned for consistency with similar instruments and to meet the recommended accuracy ratio of 4:1.

Accuracy Ratio: This term was used in the past to describe the relationship between the accuracy of the test standard and the accuracy of the instrument under test. The term is still used by those that do not understand uncertainty calculations (uncertainty is described below). A good rule of thumb is to ensure an accuracy ratio of 4:1 when performing calibrations. This means the instrument or standard used should be four times more accurate than the instrument being checked. Therefore, the test equipment (such as a field standard) used to calibrate the process instrument should be four times more accurate than the process instrument, the laboratory standard used to calibrate the field standard should be four times more accurate than the field standard, and so on.

With today's technology, an accuracy ratio of 4:1 is becoming more difficult to achieve. Why is a 4:1 ratio recommended? Ensuring a 4:1 ratio will minimize the effect of the accuracy of the standard on the overall calibration accuracy. If a higher level standard is found to be out of tolerance by a factor of two, for example, the calibrations performed using that standard are less likely to be compromised.

Suppose we use our previous example of the test equipment with a tolerance of ±0.25°C and it is found to be 0.5°C out of tolerance during a scheduled calibration. Since we took into consideration an accuracy ratio of 4:1 and assigned a calibration tolerance of ±1°C to the process instrument, it is less likely that our calibration performed using that standard is compromised.

The out-of-tolerance standard still needs to be investigated by reverse traceability of all calibrations performed using the test standard. However, our assurance is high that the process instrument is within tolerance. If we had arbitrarily assigned a calibration tolerance of ±0.25°C to the process instrument, or used test equipment with a calibration tolerance of ±1°C, we would not have the assurance that our process instrument is within calibration tolerance. This leads us to traceability.

Traceability: All calibrations should be performed traceable to a nationally or internationally recognized standard. For example, in the United States, the National Institute of Standards and Technology (NIST), formerly National Bureau of Standards (NBS), maintains the nationally recognized standards. Traceability is defined by ANSI/NCSL Z540-1-1994 (which replaced MIL-STD-45662A) as “the property of a result of a measurement whereby it can be related to appropriate standards, generally national or international standards, through an unbroken chain of comparisons.” Note this does not mean a calibration shop needs to have its standards calibrated with a primary standard. It means that the calibrations performed are traceable to NIST through all the standards used to calibrate the standards, no matter how many levels exist between the shop and NIST.

Traceability is accomplished by ensuring the test standards we use are routinely calibrated by “higher level” reference standards. ...