Hands-On Deep Learning with Go

A practical guide to building and implementing neural network models using Go

Gareth Seneque, Darrell Chua

- 242 páginas

- English

- ePUB (apto para móviles)

- Disponible en iOS y Android

Hands-On Deep Learning with Go

A practical guide to building and implementing neural network models using Go

Gareth Seneque, Darrell Chua

Información del libro

Apply modern deep learning techniques to build and train deep neural networks using Gorgonia

Key Features

- Gain a practical understanding of deep learning using Golang

- Build complex neural network models using Go libraries and Gorgonia

- Take your deep learning model from design to deployment with this handy guide

Book Description

Go is an open source programming language designed by Google for handling large-scale projects efficiently. The Go ecosystem comprises some really powerful deep learning tools such as DQN and CUDA. With this book, you'll be able to use these tools to train and deploy scalable deep learning models from scratch.

This deep learning book begins by introducing you to a variety of tools and libraries available in Go. It then takes you through building neural networks, including activation functions and the learning algorithms that make neural networks tick. In addition to this, you'll learn how to build advanced architectures such as autoencoders, restricted Boltzmann machines (RBMs), convolutional neural networks (CNNs), recurrent neural networks (RNNs), and more. You'll also understand how you can scale model deployments on the AWS cloud infrastructure for training and inference.

By the end of this book, you'll have mastered the art of building, training, and deploying deep learning models in Go to solve real-world problems.

What you will learn

- Explore the Go ecosystem of libraries and communities for deep learning

- Get to grips with Neural Networks, their history, and how they work

- Design and implement Deep Neural Networks in Go

- Get a strong foundation of concepts such as Backpropagation and Momentum

- Build Variational Autoencoders and Restricted Boltzmann Machines using Go

- Build models with CUDA and benchmark CPU and GPU models

Who this book is for

This book is for data scientists, machine learning engineers, and AI developers who want to build state-of-the-art deep learning models using Go. Familiarity with basic machine learning concepts and Go programming is required to get the best out of this book.

Preguntas frecuentes

Información

Section 1: Deep Learning in Go, Neural Networks, and How to Train Them

- Chapter 1, Introduction to Deep Learning in Go

- Chapter 2, What is a Neural Network and How Do I Train One?

- Chapter 3, Beyond Basic Neural Networks - Autoencoders and Restricted Boltzmann Machines

- Chapter 4, CUDA - GPU-Accelerated Training

Introduction to Deep Learning in Go

- Why DL?

- DL—history applications

- Overview of ML in Go

- Using Gorgonia

Introducing DL

Why DL?

DL – a history

- The idea of AI

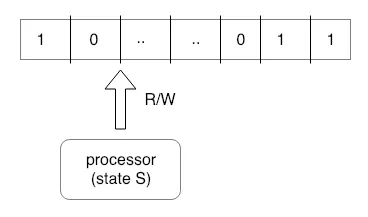

- The beginnings of computer science/information theory

- Current academic work about the state/future of DL systems

Índice

- Title Page

- Copyright and Credits

- About Packt

- Contributors

- Preface

- Section 1: Deep Learning in Go, Neural Networks, and How to Train Them

- Introduction to Deep Learning in Go

- What Is a Neural Network and How Do I Train One?

- Beyond Basic Neural Networks - Autoencoders and RBMs

- CUDA - GPU-Accelerated Training

- Section 2: Implementing Deep Neural Network Architectures

- Next Word Prediction with Recurrent Neural Networks

- Object Recognition with Convolutional Neural Networks

- Maze Solving with Deep Q-Networks

- Generative Models with Variational Autoencoders

- Section 3: Pipeline, Deployment, and Beyond!

- Building a Deep Learning Pipeline

- Scaling Deployment

- Other Books You May Enjoy