Deep Learning with Keras

Antonio Gulli, Sujit Pal

- 318 pages

- English

- ePUB (adapté aux mobiles)

- Disponible sur iOS et Android

Deep Learning with Keras

Antonio Gulli, Sujit Pal

À propos de ce livre

Get to grips with the basics of Keras to implement fast and efficient deep-learning modelsAbout This Book• Implement various deep-learning algorithms in Keras and see how deep-learning can be used in games• See how various deep-learning models and practical use-cases can be implemented using Keras• A practical, hands-on guide with real-world examples to give you a strong foundation in KerasWho This Book Is ForIf you are a data scientist with experience in machine learning or an AI programmer with some exposure to neural networks, you will find this book a useful entry point to deep-learning with Keras. A knowledge of Python is required for this book.What You Will Learn• Optimize step-by-step functions on a large neural network using the Backpropagation Algorithm• Fine-tune a neural network to improve the quality of results• Use deep learning for image and audio processing• Use Recursive Neural Tensor Networks (RNTNs) to outperform standard word embedding in special cases• Identify problems for which Recurrent Neural Network (RNN) solutions are suitable• Explore the process required to implement Autoencoders• Evolve a deep neural network using reinforcement learningIn DetailThis book starts by introducing you to supervised learning algorithms such as simple linear regression, the classical multilayer perceptron and more sophisticated deep convolutional networks. You will also explore image processing with recognition of hand written digit images, classification of images into different categories, and advanced objects recognition with related image annotations. An example of identification of salient points for face detection is also provided. Next you will be introduced to Recurrent Networks, which are optimized for processing sequence data such as text, audio or time series. Following that, you will learn about unsupervised learning algorithms such as Autoencoders and the very popular Generative Adversarial Networks (GAN). You will also explore non-traditional uses of neural networks as Style Transfer.Finally, you will look at Reinforcement Learning and its application to AI game playing, another popular direction of research and application of neural networks.Style and approachThis book is an easy-to-follow guide full of examples and real-world applications to help you gain an in-depth understanding of Keras. This book will showcase more than twenty working Deep Neural Networks coded in Python using Keras.

Foire aux questions

Informations

Additional Deep Learning Models

- The Keras functional API

- Regression networks

- Autoencoders for unsupervised learning

- Composing complex networks with the functional API

- Customizing Keras

- Generative networks

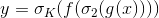

Keras functional API

from keras.models import Sequential

from keras.layers.core import dense, Activation

model = Sequential([

dense(32, input_dim=784),

Activation("sigmoid"),

dense(10),

Activation("softmax"),

])

model.compile(loss="categorical_crossentropy", optimizer="adam")

from keras.layers import Input

from keras.layers.core import dense

from keras.models import Model

from keras.layers.core import Activation

inputs = Input(shape=(784,))

x = dense(32)(inputs)

x = Activation("sigmoid")(x)

x = dense(10)(x)

predictions = Activation("softmax")(x)

model = Model(inputs=inputs, outputs=predictions)

model.compile(loss="categorical_crossentropy", optimizer="adam")

sequence_predictions = TimeDistributed(model)(input_sequences)

- Models with multiple inputs and outputs

- Models composed of multiple submodels

- Models that used shared layers

model = Model(inputs=[input1, input2], outputs=[output1, output2])

Regression networks

Table des matières

- Title Page

- Credits

- About the Authors

- About the Reviewer

- www.PacktPub.com

- Customer Feedback

- Preface

- Neural Networks Foundations

- Keras Installation and API

- Deep Learning with ConvNets

- Generative Adversarial Networks and WaveNet

- Word Embeddings

- Recurrent Neural Network — RNN

- Additional Deep Learning Models

- AI Game Playing

- Conclusion