Neural Networks with Keras Cookbook

Over 70 recipes leveraging deep learning techniques across image, text, audio, and game bots

V Kishore Ayyadevara

- 568 pages

- English

- ePUB (adapté aux mobiles)

- Disponible sur iOS et Android

Neural Networks with Keras Cookbook

Over 70 recipes leveraging deep learning techniques across image, text, audio, and game bots

V Kishore Ayyadevara

À propos de ce livre

Implement neural network architectures by building them from scratch for multiple real-world applications.

Key Features

- From scratch, build multiple neural network architectures such as CNN, RNN, LSTM in Keras

- Discover tips and tricks for designing a robust neural network to solve real-world problems

- Graduate from understanding the working details of neural networks and master the art of fine-tuning them

Book Description

This book will take you from the basics of neural networks to advanced implementations of architectures using a recipe-based approach.

We will learn about how neural networks work and the impact of various hyper parameters on a network's accuracy along with leveraging neural networks for structured and unstructured data.

Later, we will learn how to classify and detect objects in images. We will also learn to use transfer learning for multiple applications, including a self-driving car using Convolutional Neural Networks.

We will generate images while leveraging GANs and also by performing image encoding. Additionally, we will perform text analysis using word vector based techniques. Later, we will use Recurrent Neural Networks and LSTM to implement chatbot and Machine Translation systems.

Finally, you will learn about transcribing images, audio, and generating captions and also use Deep Q-learning to build an agent that plays Space Invaders game.

By the end of this book, you will have developed the skills to choose and customize multiple neural network architectures for various deep learning problems you might encounter.

What you will learn

- Build multiple advanced neural network architectures from scratch

- Explore transfer learning to perform object detection and classification

- Build self-driving car applications using instance and semantic segmentation

- Understand data encoding for image, text and recommender systems

- Implement text analysis using sequence-to-sequence learning

- Leverage a combination of CNN and RNN to perform end-to-end learning

- Build agents to play games using deep Q-learning

Who this book is for

This intermediate-level book targets beginners and intermediate-level machine learning practitioners and data scientists who have just started their journey with neural networks. This book is for those who are looking for resources to help them navigate through the various neural network architectures; you'll build multiple architectures, with concomitant case studies ordered by the complexity of the problem. A basic understanding of Python programming and a familiarity with basic machine learning are all you need to get started with this book.

Foire aux questions

Informations

Text Analysis Using Word Vectors

- Building a word vector from scratch in Python

- Building a word vector using skip-gram and CBOW models

- Performing vector arithmetic using pre-trained word vectors

- Creating a document vector

- Building word vectors using fastText

- Building word vectors using GloVe

- Building sentiment classification using word vectors

Introduction

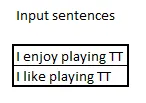

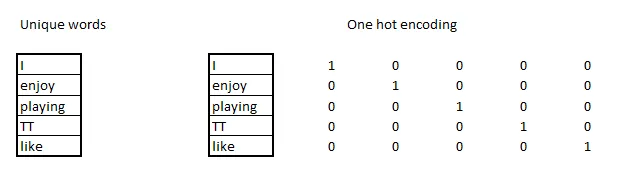

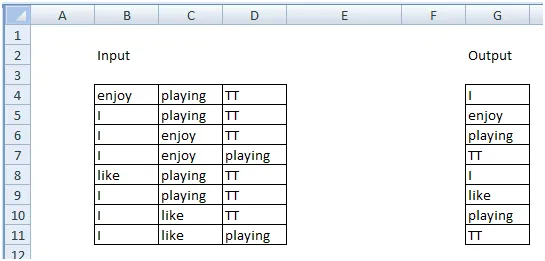

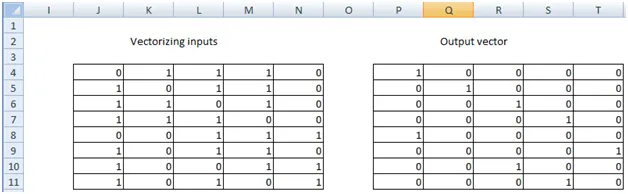

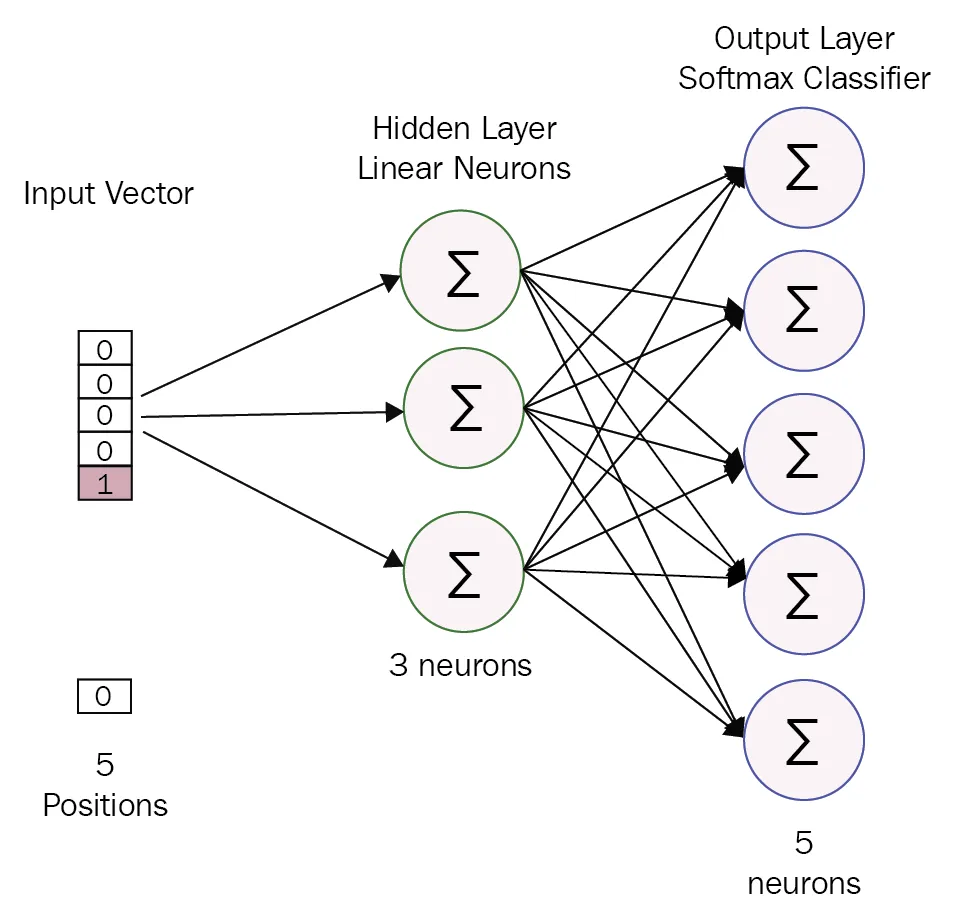

Building a word vector from scratch in Python

Getting ready

| Layer | Shape of weights | Commentary |

| Input layer | 1 x 5 | Each row is multiplied by five weights. |

| Hidden layer | 5 x 3 | There are five input weights each to the three neurons in the hidden layer. |

| Output of hidden layer | 1 x 3 | This is the matrix multiplication of the input and the hidden layer. |

| Weights from hidden to output | 3 x 5 | Three output hidden units are mapped to five output columns (as there are five unique words). |

| Output layer | 1 x 5 | This is the matrix multiplication between the output of the hidden layer and the weights from the hidden to the output layer. |

Table des matières

- Title Page

- Copyright and Credits

- Dedication

- About Packt

- Contributors

- Preface

- Building a Feedforward Neural Network

- Building a Deep Feedforward Neural Network

- Applications of Deep Feedforward Neural Networks

- Building a Deep Convolutional Neural Network

- Transfer Learning

- Detecting and Localizing Objects in Images

- Image Analysis Applications in Self-Driving Cars

- Image Generation

- Encoding Inputs

- Text Analysis Using Word Vectors

- Building a Recurrent Neural Network

- Applications of a Many-to-One Architecture RNN

- Sequence-to-Sequence Learning

- End-to-End Learning

- Audio Analysis

- Reinforcement Learning

- Other Books You May Enjoy