Psychology

Visual Perception

Visual perception refers to the process by which the brain interprets and makes sense of visual information received from the eyes. It involves the organization, identification, and interpretation of visual stimuli to understand the surrounding environment. This process encompasses various aspects such as depth perception, color perception, and motion perception.

Written by Perlego with AI-assistance

Related key terms

1 of 5

9 Key excerpts on "Visual Perception"

- eBook - PDF

Cognitive Psychology

A Student's Handbook

- Michael W. Eysenck, Mark T. Keane(Authors)

- 2020(Publication Date)

- Psychology Press(Publisher)

Visual Perception and attention What is “perception”? According to Twedt and Parfitt (2018, p. 1), “Perception is the study of how sensory information is processed into per- ceptual experience . . . all senses share the common goal of picking up sensory information from the external environment and processing that information into a perceptual experience.” Our main emphasis in this section of the book is on Visual Perception, which is of enormous importance in our everyday lives. It allows us to move around freely, to see other people, to read magazines and books, to admire the wonders of nature, to play sports and to watch movies and television. It also helps to ensure our survival. If we misperceive how close cars are to us as we cross the road, the consequences could be fatal. Unsurprisingly, far more of the cortex (especially the occipital lobes at the back of the head) is devoted to vision than to any other sensory modality. Visual Perception seems so simple and effortless, we typically take it for granted. In fact, however, it is very complex, and numerous processes transform and interpret sensory information. Relevant evidence comes from researchers in artificial intelligence who tried to program computers to “perceive” the environment. In spite of their best efforts, no computer can match more than a fraction of the skills of Visual Perception that we possess. For example, humans are much better than computer programs when deciphering distorted interconnected characters (commonly known as CAPTCHAs) to gain access to an internet website. There is a rapidly growing literature on Visual Perception (especially from the cognitive neuroscience perspective). The next three chapters provide detailed coverage of the main issues. Chapter 2 focuses on basic processes in Visual Perception with an emphasis on the great advances made in under- standing the underlying brain mechanisms. - eBook - PDF

Interactive Data Visualization

Foundations, Techniques, and Applications, Second Edition

- Matthew O. Ward, Georges Grinstein, Daniel Keim(Authors)

- 2015(Publication Date)

- A K Peters/CRC Press(Publisher)

CHAPTER 3 Human Perception and Information Processing This chapter deals with human perception and the different ways in which graphics and images are seen and interpreted. The early approach to the study of perception focused on the vision system and its capabilities. Later approaches looked at cognitive issues and recognition. We discuss each ap- proach in turn and provide details. Significant parts of this chapter, in- cluding many of the figures, are based on the work of Christopher G. Healey (http://www.csc.ncsu.edu/faculty/healey/PP/index.html) [174], who has kindly granted permission for their reuse in this book. 3.1 What Is Perception? We know that humans perceive data, but we are not as sure of how we perceive. We know that visualizations present data that is then perceived, but how are these visualizations perceived? How do we know that our visual representations are not interpreted differently by different viewers? How can we be sure that the data we present is understood? We study perception to better control the presentation of data, and eventually to harness human perception. There are many definitions and theories of perception. Most define per- ception as the process of recognizing (being aware of), organizing (gathering and storing), and interpreting (binding to knowledge) sensory information. Perception deals with the human senses that generate signals from the envi- ronment through sight, hearing, touch, smell, and taste. Vision and audition 81 82 3. Human Perception and Information Processing Figure 3.1. Two seated figures, making sense at a higher, more abstract level, but still dis- turbing. On closer inspection, these seats are not realizable. (Image courtesy N. Yoshigahara.) are the most well understood. Simply put, perception is the process by which we interpret the world around us, forming a mental representation of the environment. - eBook - PDF

- Andrew Fabian, Janet Gibson, Mike Sheppard, Simone Weyand, Andrew Fabian, Janet Gibson, Mike Sheppard, Simone Weyand(Authors)

- 2021(Publication Date)

- Cambridge University Press(Publisher)

These percepts may occur in the context of healthy processing, as, for example, in sensory deprivation, during which hallucinations may be strong and vivid. They may also occur in the setting of disturbances to our cognitive or neural responses, as, for example, with drugs or neuropsychiatric impairment. By understanding the normal role and function of the visual system, and how it represents reality on the basis of its inputs, we may come to understand visions and to see them not as incomprehensible dislocations from reality and rationality, but as the products of a highly constructive and creative system that has been altered, unbalanced, or disturbed in some way. In this chapter, therefore, I examine Visual Perception and consider the ways in which it may be perturbed. I shall begin by considering the idea, one that emerged in the 1940s and 1950s, in cybernetics and information theory, of the need for an agent to construct a model of its world in order to interact with it fruitfully and optimally. I then consider the necessity that perception is a process not merely of passive reception but of inference. Crucially, it is inference that draws on the predictability in the signal and requires that the organism carries within it some prior knowledge of the world: a prior knowledge that can be drawn upon to enhance efficiency and resolve ambiguity. I discuss the ways in which this Paul Fletcher 36 principle – using prior knowledge to make predictions – may be enacted within the brain and I then apply these ideas to the central question: what might cause a person to experience visions? Starting Ideas: Cybernetics, Models and Prediction Before considering key principles of perception, it is worth thinking briefly and broadly about brain processes more generally. Across current cognitive neuroscience, one discerns a pervasive influence of the ideas of the mid-twentieth-century cyberneticists [2]. - eBook - PDF

- Koen Lamberts, Rob Goldstone, Koen Lamberts, Rob Goldstone(Authors)

- 2004(Publication Date)

- SAGE Publications Ltd(Publisher)

Human Visual Perception starts with two-dimensional (2-D) arrays of light falling on our retinae. The task of the Visual Perception is to enable us to use the information provided in the array of light in order to react appropriately to the objects surrounding us. One way to try and view the process of vision is to divide the problem into three parts: first, how the visual information falling on the retinae is encoded; second, how it is represented; and finally, how it is interpreted. The next chapter, ‘Visual Perception II: High-Level Vision’, speaks more directly to the third issue; that is, how visual signals of objects are interpreted and represented (the two are obviously connected). In the first half of this chapter we are mainly concerned with the early stages of visual processing: How is information encoded and extracted in the first place? Can we find general, basic principles according to which our visual system appears to function, both in how it encodes and represents visual information? And, if so, can we find, from an engineering or information-theoretic point of view, reasons why the visual system may be organized the way we think it is? The second half of this chapter looks at ways of going beyond the first encoding of visual informa-tion. What additional processing is required? What experimental findings do we need to accommodate? Both the latest insights from neurophysiological research as well as classic findings from psycho-physics will be reviewed that speak to issues going beyond early vision. With half our brain devoted to visual areas, vision is clearly our most important sense. We usually know about the world, where objects are, what they are, how they move, and how we can manipulate them, through vision. Because we are so incredibly good at perceiving the world through vision, and because vision usually feels effortless, the visual world is often almost treated as a ‘given’. - eBook - ePub

- Terry McMorris(Author)

- 2014(Publication Date)

- Wiley(Publisher)

The organization, integration and interpretation of sensory information is thought to take place primarily in the prefrontal cortex, but it draws upon the sensory information held in the specific sensory areas of the cortex and information from LTM contained in several areas of the brain. It should, therefore, be of no surprise to find that fMRI and PET studies have shown considerable activation of the prefrontal cortex during perceptual tasks. The parietal cortex has also been shown to play a role in perception and is particularly active in tasks where the individual switches attention for one part of the display to another, for example, a defender in hockey switching between attending to her/his immediate opponent and the runs of other attackers.Definition of perception

Based on the above, we can define perception, according to information processing theory, as being the organization, interpretation and integration of sensory information. Kerr (1982) provides a similar definition but includes the word ‘conscious’. Although information processing theorists would argue that, most of the time, perception is a conscious process, recent research on learning and anticipation has shown that it can take place at a subconscious level.Signal detection theory

As information processing theorists claim that perception is inferred, a number of theories have been developed to explain different aspects of the cognitive processes taking place. One of the first theories was Swets’ (1964; Swets and Green, 1964) signal detection theory. Swets realized that people live in an environment that is full of sensory information. He reckoned that the individual receives over 100 000 signals per second. These may be signals from the environment and/or from within the person themselves. Sport provides many examples of this and the problems it can cause. Think of a tennis player about to serve in a game on Centre Court at Wimbledon. What kinds of signals do you think the player will be receiving visually and auditorally? What kinds of internal signals might the player be receiving: e.g. will I win, will I play well? The problem facing Swets was how to explain how anyone can recognize relevant information against this background of signals, which he termed ‘noise - eBook - PDF

- Hugh Davson(Author)

- 2012(Publication Date)

- Academic Press(Publisher)

We may begin by illustrations of the perceptual process, as revealed by psycho-physical studies, and later we shall consider some electrophysiological studies that illustrate the possible neural basis for some of these higher integrative functions. THE PERCEPTUAL PATTERN Essentially we shall be concerned with the modes in which visual sensations are interpreted; the eyes may gaze at an assembly of objects and we know that a fairly accurate image of the assembly is formed on the retinae of the two eyes, and this is 'transferred' by a 'point-to-point' projection to the occipital cortex. This is the basis for the visual sensation evoked by the objects but there is a large variety of evidence, derived from everyday experience, which tells us that the final aware-ness of the assembly of objects involves considerably more nervous activity than a mere point-to-point pro-jection of the retina on the cortex would require. As a result of this activity we appreciate a perceptual pattern; the primary visual sensation, resulting from stimulating the visual cortex, is integrated with sensations from other sources presented simultaneously and, more im-portant still, with the memory of past experience. For example, a subject looks at a box; a plane image is produced on each retina which may be represented as a number of lines, but the perceptual pattern evoked in the subject's mind is something much more complex. As a result of past experience the lines are interpreted as edges orientated in different directions; the light and shade and many other factors to be discussed later are all interpreted in accordance with experience; its hard-ness is remembered and its uses, and the original HIGHER INTEGRATIVE ACTIVITY INADEQUATE REPRESENTATION Schroeder's staircase (Fig. 22.1) shows how the same visual stimulus may evoke different meanings in the same subject; to most people the first impression created by Figure 22.1 is that of a staircase; on gazing at it Fig. - John Weinman(Author)

- 2013(Publication Date)

- Butterworth-Heinemann(Publisher)

Such conclusions are essentially speculative but it is also known that patients with damage to the inferotemporal regions may have disorders of visual recognition in which they may be able to see all the components of a stimulus but still not be able to recognize it (visual object agnosia). The visual experiences of these patients have been described as percepts stripped of their meaning. This raises the critical question as to how we make sense of visual images and are able to know with great accuracy what information is present. This process of assigning 19 Human information processing meaning to a visual image has been a preoccupation of psychologists studying perception and this is the next approach to be considered. b. Psychological aspects of pattern recognition The way in which our visual systems are able to combine all the elements of a visual stimulus into meaningful units was extensively studied by the Gestalt school of psychology which flourished in the earlier part of this century. They demonstrated that there were certain basic organizational tendencies in perception which make the parts of the visual world form the units which we are then able to recognize as complete objects (e.g. the Dalmatian in Fig. 3). They were able to demonstrate that if components of an image were close to each other (proximity) or had a similar texture or colour (similarity) then those components were automatically grouped together. They also showed that the overriding tendency of the visual system to form perceptual units in this way was facilitated if the figures were simple and regular (goodness). Once this initial organization has taken place then the perceived objects have an immediate salience or presence against an undifferentiated background (figure/ground resolution). These Gestalt principles of early perceptual organization have remained influential although current theories of these early pattern recognition processes are much more complex.- eBook - PDF

Displays

Fundamentals & Applications, Second Edition

- Rolf R. Hainich, Oliver Bimber(Authors)

- 2016(Publication Date)

- A K Peters/CRC Press(Publisher)

In this chapter, we will give an overview of the most important aspects of human Visual Perception insofar as they concern display technology. We will start with an outline of the human visual system in Section 4.2, and its essential sensory properties, including resolu- tion, contrast, dynamic range, and temporal response. Section 4.3 at page 97 will explain the basics of colorimetry, which is the science of color perception, measurement, and represen- tation, and its relationships to various color models being used in displays. One major topic in this book is 3D displays (Chapters 5 and 9); Section 4.4 at page 107 summarizes various depth cues that are more or less relevant for depth perception. Later, we will see that most 91 92 Displays displays will support only a subset of these depth cues, which of course affects depth percep- tion with regards to 3D displays. Finally in Section 4.5 at page 119 we will explain certain perception pitfalls for motion picture creation and display and common solutions that are implemented in consumer displays. The appendix at the end of this book provides an additional coverage of several aspects of human perception, in the context of perceptive display calibration. 4.2 THE HUMAN VISUAL SYSTEM In the following sections, we will briefly review the most important sensory properties of the Visual Perception system that are relevant to the design of many display systems and we will present their driving algorithms. 4.2.1 The Eye as an Optical System The human eye is a complex optical system, resembling, in principle, a camera but built rather differently (Figure 4.1). Its diameter is about 25 mm and it is filled with two different fluids (both having a refraction index of ≈1.336). The iris regulates the amount of incident light by expanding or shrinking, changing the eye’s aperture. - eBook - PDF

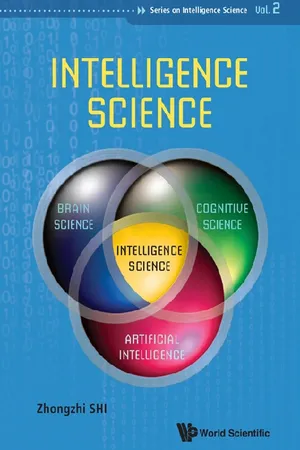

- Zhongzhi Shi(Author)

- 2012(Publication Date)

- World Scientific(Publisher)

This kind of representation divided by the feeling passway, just have a relative meaning. For most people (including musicians, painters), feeling representation all has the mixed properties. In people’s activity, generally speaking, we do not have single visual representation and single hearing or movement representation, but often the mixing of different representation, because people should always use various sense organs when they perceive things. Only in a relative sense, we can say that some people’s visual representation is a bit more developed, while others’ hearing representation is a bit more developed. The form is a mental process with particular characteristics. It is noticeable in the early development of modern psychology. But as behaviorism psychology dominated, representation had begun to become quiet in the twenties of the 20th century. After cognitive psychology rises, the research of representation is paid attention to and developed rapidly again, and the achievement is very abundant too. The research of cognitive psychology on “the psychological rotation” and “the psychological scanning” also makes attractive achievement. In the 162 Intelligence Science psychological consultation and psychotherapy, the function of representation also plays an important role. 5.7 Attention in the Perceptual Cognition Attention is the status of psychological activity or perception at a certain moment. It is shown as direction or centralization of certain target. People can control one’s own attention direction consciously most of the time. Attention has two obvious characteristics: directivity and centrality. The directivity of attention means people have chosen a certain target in psychological activity or perception in a moment, and has neglected other targets. In the boundless universe, a large amount of information act on us all the time, but we unable to make reflection to all information, and can only direct our perception to something.

Index pages curate the most relevant extracts from our library of academic textbooks. They’ve been created using an in-house natural language model (NLM), each adding context and meaning to key research topics.