Machine Learning for OpenCV

Michael Beyeler

- 382 pagine

- English

- ePUB (disponibile sull'app)

- Disponibile su iOS e Android

Machine Learning for OpenCV

Michael Beyeler

Informazioni sul libro

Expand your OpenCV knowledge and master key concepts of machine learning using this practical, hands-on guide.About This Book• Load, store, edit, and visualize data using OpenCV and Python• Grasp the fundamental concepts of classification, regression, and clustering• Understand, perform, and experiment with machine learning techniques using this easy-to-follow guide• Evaluate, compare, and choose the right algorithm for any taskWho This Book Is ForThis book targets Python programmers who are already familiar with OpenCV; this book will give you the tools and understanding required to build your own machine learning systems, tailored to practical real-world tasks.What You Will Learn• Explore and make effective use of OpenCV's machine learning module• Learn deep learning for computer vision with Python• Master linear regression and regularization techniques• Classify objects such as flower species, handwritten digits, and pedestrians• Explore the effective use of support vector machines, boosted decision trees, and random forests• Get acquainted with neural networks and Deep Learning to address real-world problems• Discover hidden structures in your data using k-means clustering• Get to grips with data pre-processing and feature engineeringIn DetailMachine learning is no longer just a buzzword, it is all around us: from protecting your email, to automatically tagging friends in pictures, to predicting what movies you like. Computer vision is one of today's most exciting application fields of machine learning, with Deep Learning driving innovative systems such as self-driving cars and Google's DeepMind.OpenCV lies at the intersection of these topics, providing a comprehensive open-source library for classic as well as state-of-the-art computer vision and machine learning algorithms. In combination with Python Anaconda, you will have access to all the open-source computing libraries you could possibly ask for.Machine learning for OpenCV begins by introducing you to the essential concepts of statistical learning, such as classification and regression. Once all the basics are covered, you will start exploring various algorithms such as decision trees, support vector machines, and Bayesian networks, and learn how to combine them with other OpenCV functionality. As the book progresses, so will your machine learning skills, until you are ready to take on today's hottest topic in the field: Deep Learning.By the end of this book, you will be ready to take on your own machine learning problems, either by building on the existing source code or developing your own algorithm from scratch!Style and approachOpenCV machine learning connects the fundamental theoretical principles behind machine learning to their practical applications in a way that focuses on asking and answering the right questions. This book walks you through the key elements of OpenCV and its powerful machine learning classes, while demonstrating how to get to grips with a range of models.

Domande frequenti

Informazioni

Using Deep Learning to Classify Handwritten Digits

- How do I implement perceptrons and multilayer perceptrons in OpenCV?

- What is the difference between stochastic and batch gradient descent, and how does it fit in with backpropagation?

- How do I know what size my neural net should be?

- How can I use Keras to build sophisticated deep neural networks?

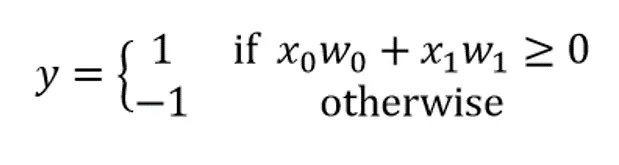

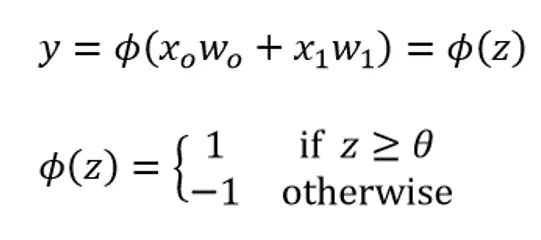

Understanding the McCulloch-Pitts neuron

- Training data: It is no surprise to learn that we need some data samples with which the effectiveness of our classifier can be verified.

- Cost function (also known as loss function): A cost function provides a measure of how good the current weight coefficients are. There is a wide range of cost functions available, which we will talk about towards the end of this chapter. One solution is to count the number of misclassifications. Another one is to calculate the su...

Indice dei contenuti

- Title Page

- Copyright

- Credits

- Foreword

- About the Author

- About the Reviewers

- www.PacktPub.com

- Customer Feedback

- Dedication

- Preface

- A Taste of Machine Learning

- Working with Data in OpenCV and Python

- First Steps in Supervised Learning

- Representing Data and Engineering Features

- Using Decision Trees to Make a Medical Diagnosis

- Detecting Pedestrians with Support Vector Machines

- Implementing a Spam Filter with Bayesian Learning

- Discovering Hidden Structures with Unsupervised Learning

- Using Deep Learning to Classify Handwritten Digits

- Combining Different Algorithms into an Ensemble

- Selecting the Right Model with Hyperparameter Tuning

- Wrapping Up