Hands-On Meta Learning with Python

Meta learning using one-shot learning, MAML, Reptile, and Meta-SGD with TensorFlow

Sudharsan Ravichandiran

- 226 pagine

- English

- ePUB (disponibile sull'app)

- Disponibile su iOS e Android

Hands-On Meta Learning with Python

Meta learning using one-shot learning, MAML, Reptile, and Meta-SGD with TensorFlow

Sudharsan Ravichandiran

Informazioni sul libro

Explore a diverse set of meta-learning algorithms and techniques to enable human-like cognition for your machine learning models using various Python frameworks

Key Features

- Understand the foundations of meta learning algorithms

- Explore practical examples to explore various one-shot learning algorithms with its applications in TensorFlow

- Master state of the art meta learning algorithms like MAML, reptile, meta SGD

Book Description

Meta learning is an exciting research trend in machine learning, which enables a model to understand the learning process. Unlike other ML paradigms, with meta learning you can learn from small datasets faster.

Hands-On Meta Learning with Python starts by explaining the fundamentals of meta learning and helps you understand the concept of learning to learn. You will delve into various one-shot learning algorithms, like siamese, prototypical, relation and memory-augmented networks by implementing them in TensorFlow and Keras. As you make your way through the book, you will dive into state-of-the-art meta learning algorithms such as MAML, Reptile, and CAML. You will then explore how to learn quickly with Meta-SGD and discover how you can perform unsupervised learning using meta learning with CACTUs. In the concluding chapters, you will work through recent trends in meta learning such as adversarial meta learning, task agnostic meta learning, and meta imitation learning.

By the end of this book, you will be familiar with state-of-the-art meta learning algorithms and able to enable human-like cognition for your machine learning models.

What you will learn

- Understand the basics of meta learning methods, algorithms, and types

- Build voice and face recognition models using a siamese network

- Learn the prototypical network along with its variants

- Build relation networks and matching networks from scratch

- Implement MAML and Reptile algorithms from scratch in Python

- Work through imitation learning and adversarial meta learning

- Explore task agnostic meta learning and deep meta learning

Who this book is for

Hands-On Meta Learning with Python is for machine learning enthusiasts, AI researchers, and data scientists who want to explore meta learning as an advanced approach for training machine learning models. Working knowledge of machine learning concepts and Python programming is necessary.

Domande frequenti

Informazioni

MAML and Its Variants

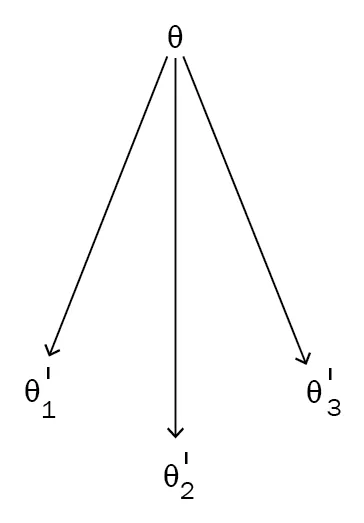

- MAML

- MAML algorithm

- MAML in supervised and reinforcement learning settings

- Building MAML from scratch

- ADML

- Building ADML from scratch

- CAML

MAML

Indice dei contenuti

- Title Page

- Copyright and Credits

- Dedication

- About Packt

- Contributors

- Preface

- Introduction to Meta Learning

- Face and Audio Recognition Using Siamese Networks

- Prototypical Networks and Their Variants

- Relation and Matching Networks Using TensorFlow

- Memory-Augmented Neural Networks

- MAML and Its Variants

- Meta-SGD and Reptile

- Gradient Agreement as an Optimization Objective

- Recent Advancements and Next Steps

- Assessments

- Other Books You May Enjoy