![]()

Chapter 1

Introduction and Role of Artificial Neural Networks

Artificial neural networks are, as their name indicates, computational networks which attempt to simulate, in a gross manner, the decision process in networks of nerve cell (neurons) of the biological (human or animal) central nervous system. This simulation is a gross cell-by-cell (neuron-by-neuron, element-by-element) simulation. It borrows from the neurophysiological knowledge of biological neurons and of networks of such biological neurons. It thus differs from conventional (digital or analog) computing machines that serve to replace, enhance or speed-up human brain computation without regard to organization of the computing elements and of their networking. Still, we emphasize that the simulation afforded by neural networks is very gross.

Why then should we view artificial neural networks (denoted below as neural networks or ANNs) as more than an exercise in simulation? We must ask this question especially since, computationally (at least), a conventional digital computer can do everything that an artificial neural network can do.

The answer lies in two aspects of major importance. The neural network, by its simulating a biological neural network, is in fact a novel computer architecture and a novel algorithmization architecture relative to conventional computers. It allows using very simple computational operations (additions, multiplication and fundamental logic elements) to solve complex, mathematically ill-defined problems, nonlinear problems or stochastic problems. A conventional algorithm will employ complex sets of equations, and will apply to only a given problem and exactly to it. The ANN will be (a) computationally and algorithmically very simple and (b) it will have a self-organizing feature to allow it to hold for a wide range of problems.

For example, if a house fly avoids an obstacle or if a mouse avoids a cat, it certainly solves no differential equations on trajectories, nor does it employ complex pattern recognition algorithms. Its brain is very simple, yet it employs a few basic neuronal cells that fundamentally obey the structure of such cells in advanced animals and in man. The artificial neural network’s solution will also aim at such (most likely not the same) simplicity. Albert Einstein stated that a solution or a model must be as simple as possible to fit the problem at hand. Biological systems, in order to be as efficient and as versatile as they certainly are despite their inherent slowness (their basic computational step takes about a millisecond versus less than a nanosecond in today’s electronic computers), can only do so by converging to the simplest algorithmic architecture that is possible. Whereas high level mathematics and logic can yield a broad general frame for solutions and can be reduced to specific but complicated algorithmization, the neural network’s design aims at utmost simplicity and utmost self-organization. A very simple base algorithmic structure lies behind a neural network, but it is one which is highly adaptable to a broad range of problems. We note that at the present state of neural networks their range of adaptability is limited. However, their design is guided to achieve this simplicity and self-organization by its gross simulation of the biological network that is (must be) guided by the same principles.

Another aspect of ANNs that is different and advantageous to conventional computers, at least potentially, is in its high parallelity (element-wise parallelity). A conventional digital computer is a sequential machine. If one transistor (out of many millions) fails, then the whole machine comes to a halt. In the adult human central nervous system, neurons in the thousands die out each year, whereas brain function is totally unaffected, except when cells at very few key locations should die and this in very large numbers (e.g., major strokes). This insensitivity to damage of few cells is due to the high parallelity of biological neural networks, in contrast to the said sequential design of conventional digital computers (or analog computers, in case of damage to a single operational amplifier or disconnections of a resistor or wire). The same redundancy feature applies to ANNs. However, since presently most ANNs are still simulated on conventional digital computers, this aspect of insensitivity to component failure does not hold. Still, there is an increased availability of ANN hardware in terms of integrated circuits consisting of hundreds and even thousands of ANN neurons on a single chip does hold [cf. Jabri et al., 1996, Hammerstrom, 1990, Haykin, 1994]. In that case, the latter feature of ANNs.

Furthermore, the development of Deep-Learning neural networks since the early 1990s resulted in a quantum jump of interest in neural networks and made them a major tool in a broad range of applications of artificial intelligence (AI) and Machine Learning (ML). Such networks (especially, Convolutional neural networks) are presently the prime method for any application of information technology to image or speech recognition and retrieval problem. A multitude of other application are rapidly being made, ranging from medicine to finance and beyond. Deep learning neural networks are based on the principles and the basic structures of earlier neural networks described below and compare very favorably (see Chap. 16) with other deep-learning methods in accuracy and in computational speed.

The excitement in ANNs should not be limited to its attempted resemblance to the decision processes in the human brain. Even its degree of self-organizing capability can be built into conventional digital computers using complicated artificial intelligence algorithms. The main contribution of ANNs is that, in its gross imitation of the biological neural network, it allows for very low level programming to allow solving complex problems, especially those that are non-analytical and/or nonlinear and/or nonstationary and/or stochastic, and to do so in a self-organizing manner that applies to a wide range of problems with no re-programming or other interference in the program itself. The insensitivity to partial hardware failure is another great attraction, but only when dedicated ANN hardware is used.

It is becoming widely accepted that the advent of ANN provides new and systematic architectures towards simplifying the programming and algorithm design for a given end and for a wide range of ends. It should bring attention to the simplest algorithm without, of course, dethroning advanced mathematics and logic, whose role will always be supreme in mathematical understanding and which will always provide a systematic basis for eventual reduction to specifics.

What is always amazing to many students and to myself is that after six weeks of class, first year engineering and computer science graduate students of widely varying backgrounds with no prior background in neural networks or in signal processing or pattern recognition, were able to solve, individually and unassisted, problems of speech recognition, of pattern recognition and character recognition, which could adapt in seconds or in minutes to changes (within a range) in pronunciation or in pattern. They would, by the end of the one-semester course, all be able to demonstrate these programs running and adapting to such changes, using PC simulations of their respective ANNs. My experience is that the study time and the background to achieve the same results by conventional methods by far exceeds that achieved with ANNs.

This demonstrates the degree of simplicity and generality afforded by ANN; and therefore the potential of ANNs.

Obviously, if one is to solve a set of well-defined deterministic differential equations, one would not use an ANN, just as one will not ask the mouse or the cat to solve it. But problems of recognition, diagnosis, filtering, prediction and control would be problems suited for ANNs.

All the above indicate that artificial neural networks are very suitable to solve problems that are complex, ill-defined, highly nonlinear, of many and different variables, and/or stochastic. Such problems are abundant in medicine, in finance, in security and beyond, namely problems of major interest and importance. Several of the case studies appended to the various chapters of this text are intended to give the reader a glimpse into such applications and into their realization.

Obviously, no discipline can be expected to do everything. And then, ANNs are certainly at their infancy. They started in the 1950s; and widespread interest in them dates from the early 1980s. Still, we can state that, by now, ANN serve an important role in many aspects of decision theory, information retrieval, prediction, detection, machine diagnosis, control, data-mining and related areas and in their applications to numerous fields of human endeavor.

![]()

Chapter 2

Fundamentals of Biological Neural Networks

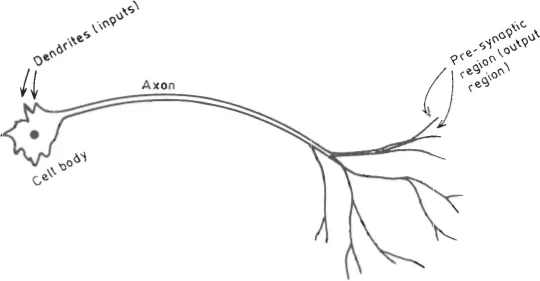

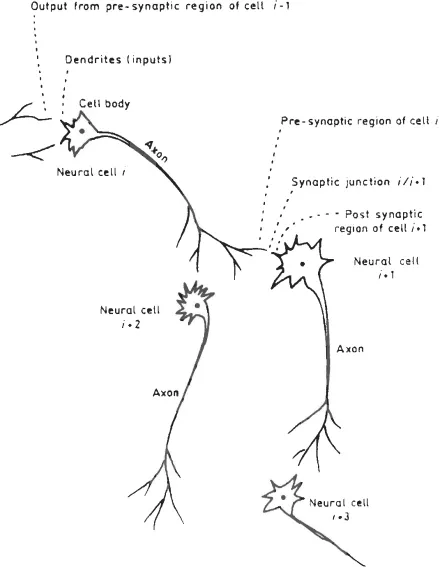

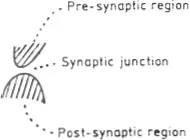

The biological neural network consists of nerve cells (neurons) as in Fig. 2.1, which are interconnected as in Fig. 2.2. The cell body of the neuron, which includes the neuron’s nucleus is where most of the neural “computation” takes place. Neural activity passes from one neuron to another in terms of electrical triggers which travel from one cell to the other down the neuron’s axon, by means of an electrochemical process of voltage-gated ion exchange along the axon and of diffusion of neurotransmitter molecules through the membrane over the synaptic gap (Fig. 2.3). The axon can be viewed as a connection wire. However, the mechanism of signal flow is not via electrical conduction but via charge exchange that is transported by diffusion of ions. This transportation process moves along the neuron’s cell, down the axon and then through synaptic junctions at the end of the axon via a very narrow synaptic space to the dendrites and/or soma of the next neuron at an average rate of 3 m/sec., as in Fig. 2.3.

Fig. 2.1. A biological neural cell (neuron).

Fig. 2.2. Interconnection of biological neural nets.

Fig. 2.3. Synaptic junction — detail (of Fig. 2.2).

Figures 2.1 and 2.2 indicate that since a given neuron may have several (hundreds of) synapses, a neuron can connect (pass its message/signal) to many (hundreds of) other neurons. Similarly, since there are many dendrites per each neuron, a single neuron can receive messages (neural signals) from many other neurons. In this manner, the biological neural network interconnects [Ganong, 1973].

It is important to note that not all interconnections, are equally weighted. Some have a higher priority (a higher weight) than others. Also some are excitory and some are inhibitory (serving to block transmission of a message). These differences are effected by differences in chemistry and by the existence of chemical transmitter and modulating substances inside and near the neurons, the axons and in the synaptic junction. This nature of interconnection between neurons and weighting of messages is also fundamental to artificial neural networks (ANNs).

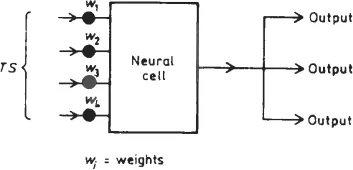

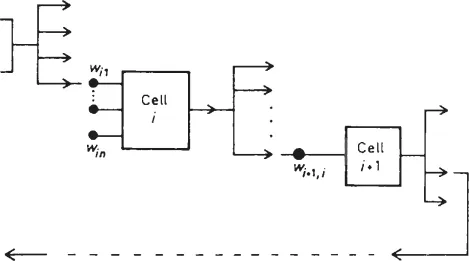

A simple analog of the neural element of Fig. 2.1 is as in Fig. 2.4. In that analog, which is the common building block (neuron) of every artificial neural network, we observe the differences in weighting of messages at the various interconnections (synapses) as mentioned above. Analogs of cell body, dendrite, axon and synaptic junction of the biological neuron of Fig. 2.1 are indicated in the appropriate parts of Fig. 2.4. The biological network of Fig. 2.2 thus becomes the network of Fig. 2.5.

Fig. 2.4. Schematic analog of a biological neural cell.

Fig. 2.5. Schematic analog of a biological neural network.

The details of the diffusion process and of charge∗ (signal) propagation along the axon are well documented elsewhere [Katz, 1966]. These are beyond the scope of this text and do not affect the design or the understanding of artificial neural networks, where electrical conduction takes place rather than diffusion of positive and negative ions.

This difference also accounts for the slowness of biological neural networks, where signals travel at velocities of 1.5 to 5.0 meters per seco...