![]()

Chapter 1

Task-Based Programming

What's in this Chapter?

- Working with shared-memory multicore

- Understanding the differences between shared-memory multicore and distributed-memory systems

- Working with parallel programming and multicore programming in shared-memory architectures

- Understanding hardware threads and software threads

- Understanding Amdahl's Law

- Considering Gustafson's Law

- Working with lightweight concurrency models

- Creating successful task-based designs

- Understanding the differences between interleaved concurrency, concurrency, and parallelism

- Parallelizing tasks and minimizing critical sections

- Understanding rules for parallel programming for multicore architectures

- Preparing for NUMA architectures

This chapter introduces the new task-based programming that allows you to introduce parallelism in applications. Parallelism is essential to exploit modern shared-memory multicore architectures. The chapter describes the new lightweight concurrency models and important concepts related to concurrency and parallelisms. It includes the necessary background information in order to prepare your mind for the next 10 chapters.

Working with Shared-Memory Multicore

In 2005, Herb Sutter published an article in Dr. Dobb's Journal titled “The Free Lunch Is Over: A Fundamental Turn Toward Concurrency in Software” (www.gotw.ca/publications/concurrency-ddj.htm). He talked about the need to start developing software considering concurrency to fully exploit continuing exponential microprocessors throughput gains. Microprocessor manufacturers are adding processing cores instead of increasing their clock frequency. Software developers can no longer rely on the free-lunch performance gains these increases in clock frequency provided.

Most machines today have at least a dual-core microprocessor. However, quad-core and octal-core microprocessors, with four and eight cores, respectively, are quite popular on servers, advanced workstations, and even on high-end mobile computers. More cores in a single microprocessor are right around the corner. Modern microprocessors offer new multicore architectures. Thus, it is very important to prepare the software designs and the code to exploit these architectures. The different kinds of applications generated with Visual C# 2010 and .NET Framework 4 run on one or many central processing units (CPUs), the main microprocessors. Each of these microprocessors can have a different number of cores, capable of executing instructions.

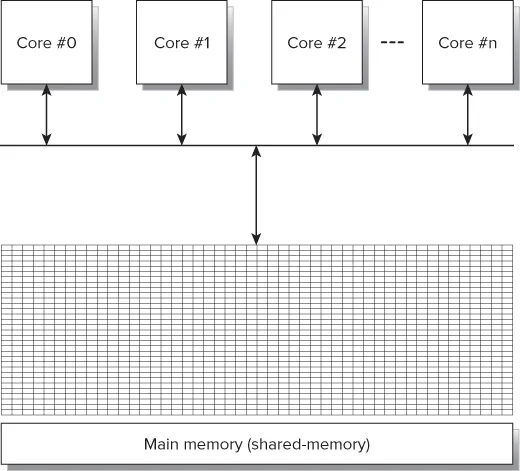

You can think of a multicore microprocessor as many interconnected microprocessors in a single package. All the cores have access to the main memory, as illustrated in Figure 1-1. Thus, this architecture is known as shared-memory multicore. Sharing memory in this way can easily lead to a performance bottleneck.

Multicore microprocessors have many different complex micro-architectures, designed to offer more parallel-execution capabilities, improve overall throughput, and reduce potential bottlenecks. At the same time, multicore microprocessors try to shrink power consumption and generate less heat. Therefore, many modern microprocessors can increase or reduce the frequency for each core according to their workload, and they can even sleep cores when they are not in use. Windows 7 and Windows Server 2008 R2 support a new feature called Core Parking. When many cores aren't in use and this feature is active, these operating systems put the remaining cores to sleep. When these cores are necessary, the operating systems wake the sleeping cores.

Modern microprocessors work with dynamic frequencies for each of their cores. Because the cores don't work with a fixed frequency, it is difficult to predict the performance for a sequence of instructions. For example, Intel Turbo Boost Technology increases the frequency of the active cores. The process of increasing the frequency for a core is also known as overclocking.

If a single core is under a heavy workload, this technology will allow it to run at higher frequencies when the other cores are idle. If many cores are under heavy workloads, they will run at higher frequencies but not as high as the one achieved by the single core. The microprocessor cannot keep all the cores overclocked a lot of time, because it consumes more power and its temperature increases faster. The average clock frequency for all the cores under heavy workloads is going to be lower than the one achieved for the single core. Therefore, under certain situations, some code can run at higher frequencies than other code, which can make measuring real performance gains a challenge.

Differences Between Shared-Memory Multicore and Distributed-Memory Systems

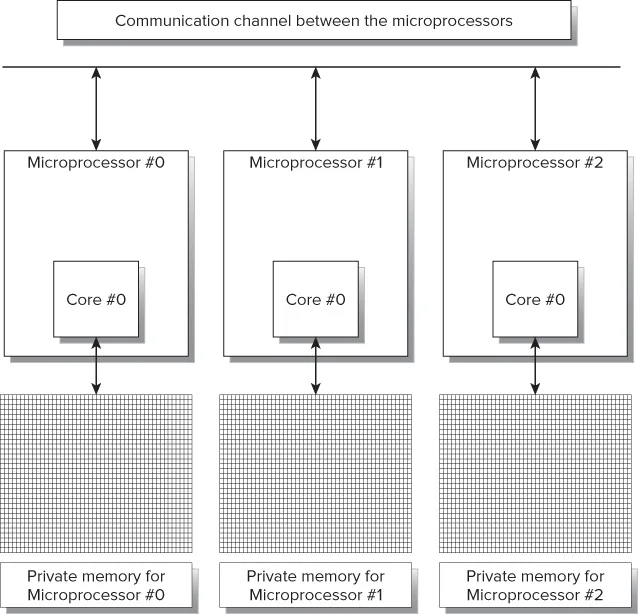

Distributed-memory computer systems are composed of many microprocessors with their own private memory, as illustrated in Figure 1-2. Each microprocessor can be in a different computer, with different types of communication channels between them. Examples of communication channels are wired and wireless networks. If a job running in one of the microprocessors requires remote data, it has to communicate with the corresponding remote microprocessor through the communication channel. One of the most popular communications protocols used to program parallel applications to run on distributed-memory computer systems is Message Passing Interface (MPI). It is possible to use MPI to take advantage of shared-memory multicore with C# and .NET Framework. However, MPI's main focus is to help developing applications run on clusters. Thus, it adds a big overhead that isn't necessary in shared-memory multicore, where all the cores can access the memory without the need to send messages.

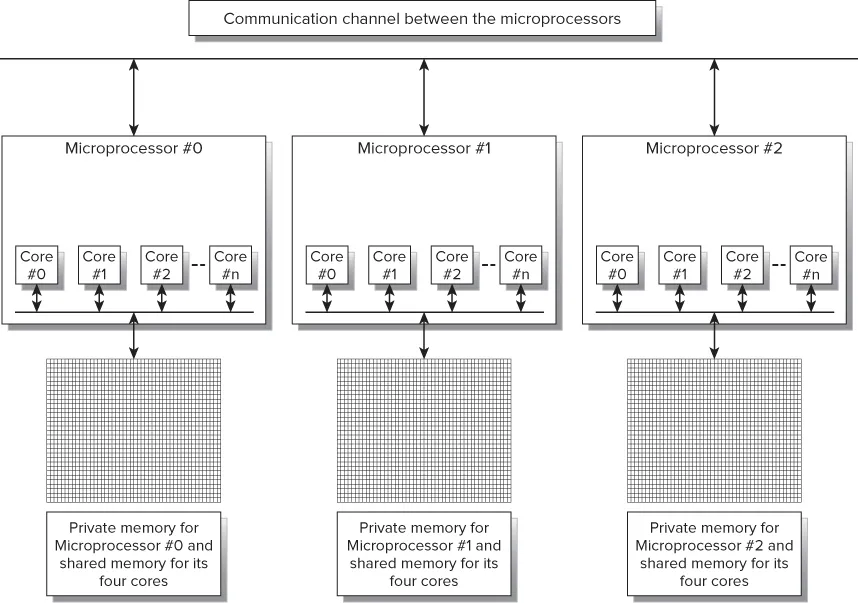

Figure 1-3 shows a distributed-memory computer system with three machines. Each machine has a quad-core microprocessor, and a shared-memory architecture for these cores. This way, the private memory for each microprocessor acts as a shared memory for its four cores.

A distributed-memory system forces you to think about the distribution of the data, because each message to retrieve remote data can introduce an important latency. Because you can add new machines (nodes) to increase the number of microprocessors for the system, distributed-memory systems can offer great scalability.

Parallel Programming and Multicore Programming

Traditional sequential code, where instructions run one after the other, doesn't take advantage of multiple cores because the serial instructions run on only one of the available cores. Sequential code written with Visual C# 2010 won't take advantage of multiple cores if it doesn't use the new features offered by .NET Framework 4 to split the work into many cores. There isn't an automatic parallelization of existing sequential code.

Parallel programming is a form of programming in which the code takes advantage of the parallel execution possibilities offered by the underlying hardware. Parallel programming runs many instructions at the same time. As previously explained, there are many different kinds of parallel architectures, and their detailed analysis would require a complete book dedicated to the topic.

Multicore programming is a form of programming in which the code takes advantage of the multiple execution cores to run many instructions in parallel. Multicore and multiprocessor computers offer more than one processing core in a single machine. Hence, the goal is to do more in less time by distributing the work to be done in the available cores.

Modern microprocessors can execute the same instruction on multiple data, something classified by Michael J. Flynn in his proposed Flynn's taxonomy in 1966 as Single Instruction, Multiple Data (SIMD). This way, you can take advantage of these vector processors to reduce the time needed to execute certain algorithms.

This book covers two areas of parallel programming in great detail: shared-memory multicore programming and the usage of vector-processing capabilities. The overall goal is to reduce the execution time of the algorith...