![]()

1 | Overview of Mixed and Augmented Reality in Medicine Terry M. Peters |

CONTENTS

1.1 Definitions

1.2 Why Use AR?

1.3 Beginnings

1.4 Psychophysical Issues

1.5 Conclusion

References

1.1 DEFINITIONS

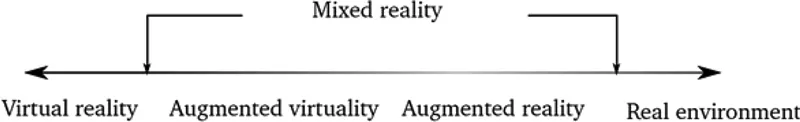

From the outset, let us define what we mean by “augmented and mixed reality” (as well as “virtual reality,” for that matter). The most concise definition, and one that has endured for several decades, is contained in the taxonomy introduced by Milgram and Kishino (1994), through which they established a “reality-virtuality continuum” between the real and virtual worlds (Figure 1.1). Milgram described an “augmented reality” (AR) as virtual images fused with the real world and “augmented virtuality” (AV) as a largely virtual (computer-generated) world augmented by additional components that represent the “real” world. Together, AR and AV form the “reality-virtuality continuum,” which is spanned by “mixed reality” (MR). In practice, however, we encounter examples at multiple points on this spectrum, and common usage has gravitated towards (perhaps somewhat loosely) using the term “augmented reality” to describe any technique that combines artificially generated information with information from the physical world, be it visual, tactile, or auditory, while the term “virtual reality” refers to an entirely “virtual” environment with no “real” components. In accordance with common usage, the term “augmented reality” is employed in this book sometimes according to Milgram’s strict definition and sometimes to encompass the more general term of “mixed reality.”

FIGURE 1.1 The reality-virtuality continuum. (Adapted from Milgram, P. and Kishino F., IEICE Trans Inform Sys., 77, 1321–1329, 1994.)

1.2 WHY USE AR?

The use of AR in medicine clearly has been driven by the ever-increasing power of computational resources that have made it possible to not only fuse images in real time but also to perform computational simulations for increasingly complex models. Its use has manifested in two distinct domains: simulation and intervention. AR is employed to add information to a visualized scene, most commonly to integrate images from some medical image modality with the patient—much like the science fiction concept of “x-ray vision”—to see organs and surgical targets inside the human body. Registering a simple x-ray projection with the patient, while helpful, is not optimal, and today’s imaging modalities allow so much more functionality, as cross-sectional, three-dimensional (3D), and dynamic imaging modalities are available. Within this context, we also consider the fusion of two to three imaging modalities, such as ultrasound (US) and video, US and CT, or even US, CT, and video as examples of AR. In this example, the “real” scene may not exist but is represented by a surrogate image (video, for example) that represents the real world. AR may also be used to provide expert annotation on images, in much the same manner that consumer AR applications can label buildings or items of interest in a digital image of a scene.

1.3 BEGINNINGS

The examples given below are far from exhaustive but represent the author’s view of the significant events that influenced his own research in the area. One of the earliest demonstrations of the value of AR in a surgical procedure was that outlined by Bajura et al. (1992), who demonstrated the methodology for fusing a US image with a patient using a head-mounted display (HMD). Shortly thereafter, the same researchers demonstrated the use of AR visualization during laparoscopic surgery, as reported in their MICCAI paper (Fuchs et al. 1998), which demonstrated that accurate calibration and tracking could achieve robust registration of the virtual scene (the surgical target) with the real-world environment. They explored the use of a custom-built video pass through an HMD device, which was a structured light projector combined with a laparoscope that enabled the capture of both depth and color data, and a “keyhole” display method that unambiguously placed the virtual image in the appropriate context of the real world. Their HMD preceded similar devices that have rapidly become commodity items in the gaming world in the past 30 years. Around the same time, Pisano et al. (1998), also affiliated with Fuchs’s laboratory, demonstrated the use of AR applied to US-guided breast cyst aspiration, wherein the operator also employed an HMD that displayed a US image in situ using the same keyhole approach described above to visualize the breast lesion (Figure 1.2).

FIGURE 1.2 Pisano and Fuchs’s pioneer demonstration of an AR application integrating a US image with the real world for a breast biopsy. (Courtesy of UNC Chapel Hill Department of Computer Science, Chapel Hill, NC.)

Shortly after this, Rosenthal et al. (2002) employed a similar US/AR setup and reported the results of a randomized, controlled trial that compared the accuracy of standard US-guided needle biopsies to that obtained using a 3D AR guidance system. Fifty core biopsies of breast phantoms were randomly assigned to one of the methods, the raw ultrasound data from each biopsy was recorded, and the distance of the biopsy from the ideal position was measured. These results demonstrated that the HMD used to provide an AR display led to a statistically significant smaller mean deviation from the desired target than did the standard display method. This was one of the first studies to suggest that AR systems can offer improved accuracy over traditional biopsy guidance methods.

An adaptation of this approach was developed by Stetten et al. (2001, 2002). In their system, the US image was viewed directly in situ without the need for additional visual aids. Rather, the image was viewed simply by reflecting the image, which was displayed on a small screen attached to the US probe, via a half-silvered mirror, so that the image appeared to be located in the same space as the actual US image. Ever since, this “Sonic Flashlight” approach has been the topic of multiple publications (Stetten et al. 2005; Shelton et al. 2007).

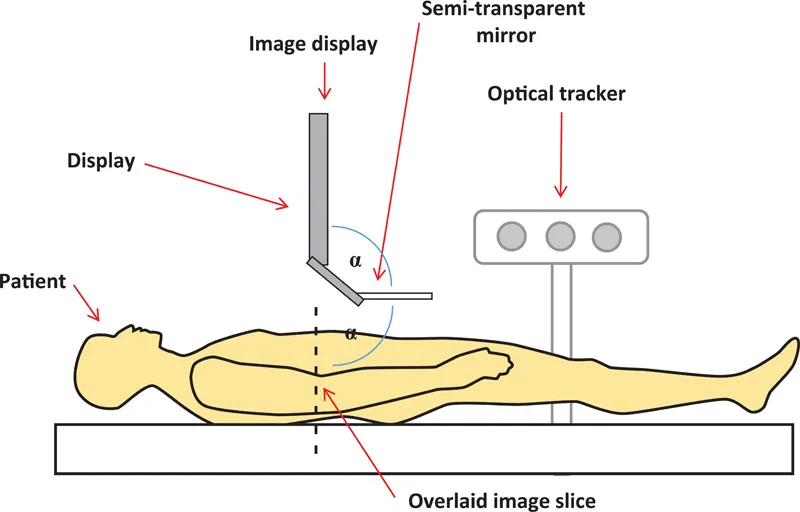

The concept of using a half-silvered mirror was also exploited by Fichtinger et al. (2005) and Baum et al. (2016, 2017) (Figure 1.3) to overlay, in the correct plane, a CT image with the direct view of the patient in a CT scanner to guide a needle to a target in the spine. In both the Stetten and Fichtinger cases, there are 2D images displayed on a monitor, whose reflections via a half-silvered mirror are arranged to coincide with the anatomical plane being imaged. Thus, the virtual (reflected) images are locked in space relative to the coordinate systems of the US probe or the CT scanner and can be observed by multiple viewers via the half-silvered mirrors, without the need for special eye ware. In Stetten’s US device, the imaged planes are shifted in 3D space by moving the US transducer. In the CT-based approach employed by Fichtinger, the imaged planes are shifted by moving the mirror-monitor frame while simultaneously updating the displayed image slice. Several researchers have since evaluated this approach in multiple scenarios (e.g., Fritz et al. 2012; Marker et al. 2017).

FIGURE 1.3 Schematic of the reflection technique used in both the “Sonic Flashlight” of Stetten and the CT-based AR system of Fichtinger. In both cases, an image generated by a tomographic imaging modality is displayed on the monitor and reflected to the appropriate plane within the patient via the half-silvered mirror. Liao’s “Integral Videography” (IV) approach employs a similar configuration, except that the 2D image is replaced by an IV display that consists of an array of hemispherical lenses matched to a high-resolution screen. This combination is able to produce a true 3D virtual image that is reflected onto the appropriate region of the patient. (Courtesy of Dr. G. Fichtinger, Queen’s University, Kingston, Canada.)

An elegant system that was tested in a neurosurgical operating room (OR) was presented by Edwards et al. (1999). They demonstrated a system that allowed surgeons to view objects extracted from preoperative radiological images by accurately registering and overlaying 3D stereoscopic images in the optical path of a surgical microscope. The objective of this system was to provide the surgeon with a view of structures, in the correct 3D positions, beneath the brain surface, which would normally be viewed through a microscope (Figure 1.4). In this case, patient registration to preoperative images was achieved using bone-implanted markers, and a dental splint equipped with optical tracking markers was used for patient tracking. The microscope also was tracked optically. An added feature (and complication) of their system was that the focus and zoom of the microscope optics had to be modeled. The accuracy of their system was in the order of 1 mm. However, there are several psychophysical issues with the system; for example, just because the virtual image is in the correct place geometrically, this does not guarantee that the human visual system perceives it to be in this position (see Section 1.4 Psychophysical Issues).

FIGURE 1.4 Edwards’s implementation of AR in a neurosurgery microscope as embodied in the “microscope-assisted guided interventions” (MAGI) system. Left: Surgeon using MAGI-equipped OR microscope; Right: Fused image of neuroma as seen through the microscope. (Courtesy of Dr. P. J. “Eddie” Edwards.)

Motivated by Edwards’s earlier work, Cheung et al. (2010) evaluated the role of AR for the resection of kidney tumors under US and laparoscopic guidance. To overcome the cognitive workload difficulties associated with the lack of cohesion between the endoscopic and US images that were displayed on separate screens, the researchers fused the US and laparoscopic images such that the latter was positioned spatially in its correct position and orientation. The goal of this work was to allow the surgeon to more accurately locate the tumor, establish safety margins, and, because speed is essential for patient safety during the procedure, resect the tumor more rapidly. Their intuitive visualization platform, which required the surgeon to observe a single fused image, was used to assess surgeon performance using both the standard of care approach as well as the AR system. Using a phantom study that mimicked the tumor resection of a partial nephrectomy, they achieved a registration error of around 2.5 mm. While faster planning time for the resection was achieved using their fusion visualization system, benefits in terms of the overall speed of the speed of the procedure were not obvious in this limited study. However, a real benefit was the more accurate specification of tumor resection margins.

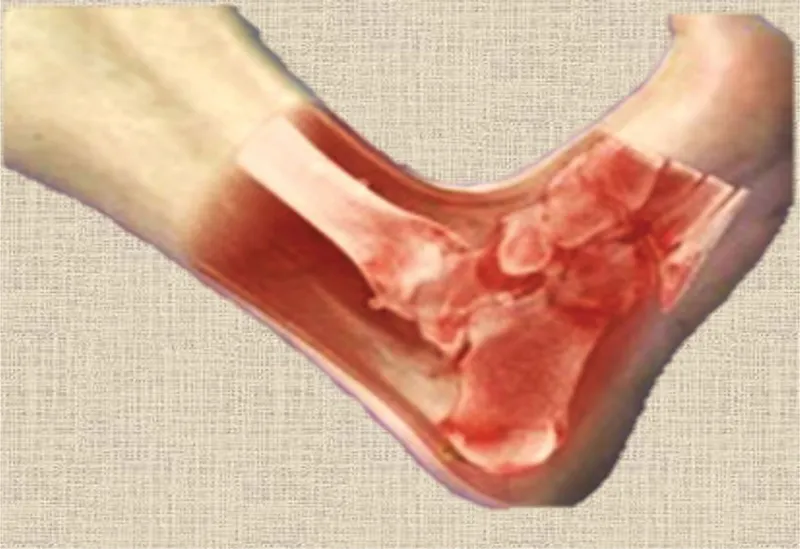

One research group that has arguably had more impact than any other on AR in medicine, particularly with respect to image-guided interventions, has been that of Nassir Navab at the Technical University of Munich. Beginning in 2006, this group published multiple seminal papers discussing perceptual issues in AR (Sielhorst et al. 2006); the development of AR-based platforms for orthopedic surgery (Traub et al. 2006); the fusion of ultrasound and gamma/beta images for cancer detection, (Wendler et al. 2006, 2007); the development of a “Virtual Mirror” (Bichlmeier et al. 2009) to provide a more intuitive user interface than direct image fusion; and an AR x-ray C-Arm (Navab et al. 2010; Fallavollita et al. 2016) that permits real-time fusion of x-ray and optical images. These applications represent just a sample of the many contributions of this group. A compelling example of their work is shown in Figure 1.5, which demonstrates the effective fusion of a CT image of an ankle with a direct view of the limb.

FIGURE 1.5 One of the many examples of compelling AR that has come from the Navab laboratory at the Technical University of Munich. A CT representation of an ankle fused with a real image in a manner that preserves realism. (Courtesy of Dr. Nassir Navab, Technical University of Munich, Munich, Germany.)

In the neurosurgery domain, Wang et al. (2011) developed an AR approach to combine a real environment with virtual models to plan epilepsy surgery. During such procedures, it is important for the surgeon to correlate preoperative cortical morphology (from preoperative images) with the actual surgical field. This team developed an alternate approach to providing enhanced visualization by fusing a direct (photographic) view of the surgical field with the 3D patient model during image-guided epilepsy surgery. To achieve this goal, they correlated the preoperative plan with the intraoperative surgical scene, first by a manual landmark-based registration and then by an intensity-based perspective 3D-2D registration for camera pose estimation. The 2D photographic image was then texture-mapped onto the standard 3D preoperative model created by an image-guidance platform using the calculated camera pose. This approach was validated clinically as part of a neuro-navigation system, and the efficacy of this alternative to sophisticated AR environments for assisting in epilepsy surgery was demonstrated. Requiring no specialized display equipment, the approach also requires minimal changes to existing systems and workflow, thus making it well suited to the OR environment.

An additional example was presented by Abhari et al. (2015), who exploited AR systems during neurosurgical planning. Planning surgical interventions is a complex task that demands a high degree of perceptual, cognitive, and sensorimotor skills to reduce intra- and postoperative complications. It also requires a great deal of spatial reasoning to coordinate between the preoperatively acquired medical images and patient reference frames. In the case of neurosurgical interventions, traditional approaches to planning tend to focus on ways to visualize preoperative images but rarely support transformation between different spatial reference frames. Consequently, surgeons usually rely on their previous experience and intuition to perform mental transformations. In the case of surgical trainees, this may lead to longer operation times or increased chances of error as a result of additional cognitive demands. To help this situation, Abhari et al. introduced ...