- 270 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

eBook - ePub

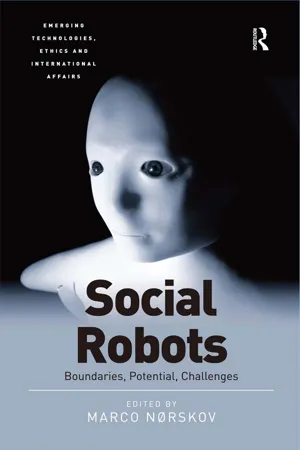

About this book

Social robotics is a cutting edge research area gathering researchers and stakeholders from various disciplines and organizations. The transformational potential that these machines, in the form of, for example, caregiving, entertainment or partner robots, pose to our societies and to us as individuals seems to be limited by our technical limitations and phantasy alone. This collection contributes to the field of social robotics by exploring its boundaries from a philosophically informed standpoint. It constructively outlines central potentials and challenges and thereby also provides a stable fundament for further research of empirical, qualitative or methodological nature.

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

PART I

Boundaries

Chapter 1

On the Significance of Understanding in Human–Robot Interaction

This chapter argues that in order to talk about human–robot interaction it is paramount to analyze how and whether humans and robots can understand each other. Deriving from different types of interactions and roles that are ascribed to the robot, this contribution will present different parameters and levels of understanding in regard to human perceptual experiences. It will be shown that understanding in a social interaction is guided by observations made and interpretations of a given situation as well as influenced by personal, cultural experiences of the observer. To this extent, the socio-phenomenological approach of Alfred Schütz on human–human interaction will shed light on the possibilities and problems of understanding in human–robot interaction.

Introduction

We assume that human interactions with robots in the future will be different from the way we use simple technological artifacts today due to technological advances in imitating or reproducing human behavior. While interaction originally referred to multi-agent systems, the use of the term shifted from a technical description of what happens within a system towards a social description of the relation between humans and robots. Talking about human–robot interaction is common practice in robotics. A very special form of ERI is social robotics focusing on social interaction. The idea of a social robot that assumes the role of a social partner or companion is associated with the belief that a robot will be generally accepted when it appears like a human (Kanda et al. 2007, Fong, Nourbakhsh, and Dautenhahn 2003) and “displays rich social behavior” (Breazeal 2004, p. 182). The long-term aim is to build “human-made autonomous entities that interact with humans in a humanlike way” (Zhao 2006, p. 405). Looking and behaving like a human is to create the impression that “[i]nteracting with the robot is like interacting with another socially responsive creature that cooperates with us as a partner” (Breazeal 2004, p. 182). Jutta Weber (2014)—in analogy to Searle’s distinction in the field of AI (see Searle 1980)—distinguishes between a strong and a weak approach of ERI. The weak approach in ERI follows the assumption that emotions, sociality, and aspects of being human that finally would require a theory of mind cannot be realized in robotics, but can only be imitated and made believe. The strong HRI approach, by contrast, is based on man’s ability to create robots that are social in their nature:

The strong approach in HRI aims to construct self-learning machines that can evolve, that can be educated and will develop real emotions and social behavior. Similar to humans, social robots are supposed to learn via the interaction with their environment, to make their own experiences and decisions, to develop their own categories, social behaviors and even purposes. … [T]he followers of the strong approach—such as Cynthia Breazeal and Rodney Brooks …—strive for true social robots, which do not fake but embody sociality. (Weber 2014, p. 192)

Apart from the possibility of robots emerging as a complete different species with their “own categories”, “embody sociality” (ibid.) indicates that robots will become social beings and, moreover, develop a mind equal to the human mind through emerging cognitive behavior and interaction. An important aspect implied is that the robot will be able to understand us. Without understanding, no interaction is possible, since social interaction between humans is a multifaceted phenomenon with various layers of verbal and non-verbal behavior that need to be understood and interpreted in the context of the situation. The significance of the observation of bodily movements for understanding a surface behavior at least will be discussed in this contribution.

The notion of understanding has a broad history and methodology in philosophy, especially hermeneutics, which is the philosophy of understanding. Alfred Schütz, whose concepts may be useful for analyzing the question of understanding between humans and artifacts, was an intellectual disciple of Husserl’s phenomenology. He wanted to put Weber’s interpretative sociology on a phenomenological foundation, but did not consider his work to be rooted in hermeneutics.1 Nevertheless, he avails himself of fundamental hermeneutic concepts (cf. Staudigl 2014, p. 3). Understanding is one of them. The process of understanding proceeds along the interaction with the alter ego and is not reduced to mere subjective acts of understanding. Two aspects make Schütz’s approach suitable for analyzing whether there can be an understanding between humans and robots at all: His conception of the construction of our social reality is pragmatic in nature and, hence, constituted of social action and interaction. And additionally, the interaction partner does not necessarily have to be—in the strict sense—a conscious being. Schütz comprehends the social world to be constituted of interaction processes in which the subject develops his thought, perception, and actions in correlation with the counterpart and reconciliation of existing sociocultural patterns. He focuses on the formation of meaningful action and asks how the formation processes of understanding of action and conduct emerge in daily life and how mutual understanding and relations develop in general (Grathoff 1989, p. 181).

Initially, I will give an overview of different approaches to and visions of human–robot interaction so as to characterize the role understanding plays in HRI. It is claimed that understanding should go beyond the mere observation of behavior. However, observations will be shown to proceed along different levels of understanding. During an interaction, the observation of behavior is always accompanied by anticipations of future actions and an interpretation of the situation at hand. This will be demonstrated with concepts based on the socio-phenomenological concepts of Alfred Schütz.

Interaction with Robots

In his studies of human–robot interaction while dancing a waltz, Kosuge distinguishes between high-level and low-level interactions:

The goal of our research is to reproduce the pHHI of waltz with pHRI, in which a mobile robot plays the follower’s role, being capable of estimating human leader’s next step (higher level) and adapting itself to the coupled body dynamics (lower level). (Wang and Kosuge 2012, p. 3134)

Couple dances are inherently social, with the waltz serving as a “typical example of demonstrating human’s capabilities in physical human–human interaction (pHHI)” (ibid.). Here, the low-level physical Human–Robot Interaction (pHRI) is viewed from a Newtonian perspective and considered to be a contact force, a force applied to each other. High-level interaction, which in Wang and Kosuge (2012) is second-tier, still needs the robot to have advanced motor skills or to “know” how to a) move by itself and b) estimate/anticipate the next move (ibid., see also Ikemoto, Minato, and Ishiguro 2008, p. 68). However, Kosuge acknowledges that:

[H]owever, since most of these robots have been developed for entertainment or mental healing, we could not utilize them for realizing complicated tasks based on the physical interaction between the humans and the robots. To realize complicated tasks, however, physical interaction between the robots and the humans would be required. (Kosuge and Hirata 2004, p. 10)

In the case of coordination among humans, each human would move based on the intention of other people, information from environments, the knowledge of executed tasks, etc. If the robots could move based on the information actively similar to the humans, we could execute various tasks effectively based on the physical interaction with the robots. (ibid.)

Kosuge and Hirata (2004) emphasize that physical interaction based on bodily movements is not only the execution of physical forces, but highly influenced by cognitive capacities, such as intentions, information gathered from the direct environment, and also the knowledge of how to apply or classify the information.

An even more basic approach is chosen by Ikemoto, Minato, and Ishiguro (2008), who go a step back and develop “a control system that allows physical interaction with a human, even before any motor learning has started” (ibid., p. 68): The success of the interaction, which consists of a human helping the robot CB2 to stand up, is measured by three categories of smoothness (smooth, non-smooth, failed; ibid., p. 69). Despite the basal, prerequisite approach to interaction, pHRI is an “extension to HRI” and in the long run “this research is to allow a humanoid robot to develop both motor skills and cognitive abilities in close physical interaction with a human teacher” (ibid., p. 72).

The reverse objective, which amplifies the close, even more dynamic interaction with a human teacher, is to deploy a robot as a teacher itself.

At this point, another area of HRI research is entered. It focuses on assigning specific roles to the robot. Scholtz (2003) created a taxonomy of roles the robot can assume in a work environment: Supervisor, operator, mechanic, peer, and bystander. All roles are defined by the amount of control exercised over the robot. Scholtz, unlike many others in HRI research, explains how he defines human–robot interaction:

I use the term “human–robot interaction” to refer to the overall research area of teams of humans and robots, including intervention on the part of the human or the robot. I use “interventions” to classify instances when the expected actions of the robot are not appropriate given the current situation and the user either revamps a plan; gives guidance about executing the plan; or gives more specific commands to the robot to modify behavior. (Scholtz 2003, sec. 2)

The quote demonstrates that, in this case, human–robot interaction is asymmetric and not designed to be social, but task- and goal-oriented in order to achieve joint results. Goodrich and Schultz (2007, pp. 233–4) extend the scope of the taxonomy by the roles of information consumer (“the human does not control the robot, but the human uses information coming from the robot”) and mentor (“the robot is in a teaching or leadership role for the human”). The roles still are asymmetric, which is due to the specific nature of these roles. Nevertheless, the robot is given a higher function by reversing the roles.2 Especially the roles of peer and mentor are appealing, because the robots can act as assistant robots in a wide range of implementations. Examples are Robovie in a classroom (Kanda et al. 2007, Chin, Wu, and Hong 2011), Robotinho—a mobile full-body humanoid museum guide (Faber et al. 2009, Nieuwenhuisen and Behnke 2013), or KASPAR, the social mediator for children with autism (Dautenhahn 2007, Iacono et al. 2011). By assigning the roles of a social mediator, mentor, or peer to a robot, the emphasis is placed on the social aspects of the interaction. It becomes obvious here that the question of what constitutes social does not seem to be relevant: Social interactions only seem to refer to the fact that the situation the human finds itself in is social in regard to an occurring encounter with another entity, in this case, a robot. The robot notices the human and can answer questions relating to its role. A social conduct, such as greetings and, in general, a social behavior seems to be an addition. Guiliani et al. (2013) even asked whether social behavior is necessary for a robot. To answer this question, they conducted an experiment to compare task-based behavior with socially intelligent behavior of a robotic bartender.3 The interesting aspect is that bartending is a service, but can also play a social role. Especially when it comes to role ascriptions, developing role-appropriate personas has recently become more relevant. Due to necessity—because collecting data on individual profiles was found to be difficult—personas represent a potential group of subjects. In the long run, the idea is that the robot already displays certain social behavior and can then adapt to the individual needs of its owners. Sekman and Challa, for example, outline:

A social robot needs to be able to learn the preferences and behaviors of the people with whom it interacts so that it can adapt its behaviors for more efficient and friendly interaction. (2013, p. 49)

However, Dautenhahn emphasizes:

However, it is still not generally accepted that a robot’s social skills are more than a necessary ‘add-on’ to human–robot interfaces in order to make the robot more ‘attractive’ to people interacting with it, but form an important part of a robot’s cognitive skills and the degree to which it exhibits intelligence. (2007, p. 682)

Increasing the robot’s attractiveness is supposed to be achieved by making the robot socially more intelligent without the human tendency of anthropomorphization (Dautenhahn 1998, p. 574). For this purpose, the “socially intelligent hypothesis”, which emanates from the idea that intelligence originally had a social function and later was used to solve abstract (e.g. mathematical) problems, should be applied to robotics (Dautenhahn 1998, 1995, 2007). In fact, Dautenhahn states that

… socially intelligent agents (SIAs) are understood to be agents that do not only from an observer point of view behave socially but that are able to recognize and identify other agents and establish and maintain relationships to other agents. (Dautenhahn 1998, p. 573)

Embodying social behavior goes beyond verbal interactions or the display of emotions, but includes developing an own personal narrative for an individualized interaction (“autobiographic agent” ibid., p. 585) and to enhance social understanding. Social understanding is not only grounded in a biographic context, it also needs “empathic resonance” (ibid.). These central aspects of social understanding parallel the paradigms for a safe social interaction between humans and robots introduced by Breazeal, namely, readability, believability, and understandability (Breazeal 2002, pp. 8–11).

The notion of “readability” is to guarantee that the robot provides social cues for the human to predict the behavior and the actions of the robot. This means that its modes of expression, such as facial expressions, eye gaze, etc., must be revealing and understood easily, so that

… the robot’s outwardly observable behavior must serve as an accurate window to its underlying computational processes, and these in turn must be well matched to the person’s social interpretations and expectations. (ibid., p. 10)

Thus, the computational processes must mirror the behavior in process. The underlying assumption is that humans are readable and that readability is an indicator of the social behavior. The underlying processes of human behavior, namely, human thinking acts are not readable for the human eye and moreover, readability might not be true for all social interactions. Most interactions rely on very subtle social cues, which are happening within seconds, e.g. micro-expressions. Those might not even be visible or consciously noticeable, but humans notice them subconsciously and they might determine our future interaction with the other human partner. Readability is important for the human to react to the robot’s behavior. The behavior must create the “illusion of life” (ibid., p. 8). Breazeal calls this believability. The “illusion of life” is made believable, not only because the robot appears to be alive, but also because it displays personality traits (ibid., p. 8, see also Dautenhahn 1995, 1998). The believability highly depends on the user: To be believable, an observer must be able and willing to apply sophisticated social-cognitive abilities to predict, understand, and explain the character’s observable behavior and inferred mental states in familiar social terms (Breazeal 2002, p. 8). Although social interactions always depend on the willingness of the participating parties, the robot must display a certain characteristic human-like behavior to increase the willingness. To interact with people in a human-like manner, sociable robots must perceive and understand the richness and complexity of natural human social behavior. Humans communicate with each oth...

Table of contents

- Cover

- Half Title

- Title Page

- Copyright Page

- Table of Contents

- Notes on Contributors

- List of Abbreviations

- Acknowledgments

- Editor’s Preface

- Part I Boundaries

- Part II Potential

- Part III Challenges

Frequently asked questions

Yes, you can cancel anytime from the Subscription tab in your account settings on the Perlego website. Your subscription will stay active until the end of your current billing period. Learn how to cancel your subscription

No, books cannot be downloaded as external files, such as PDFs, for use outside of Perlego. However, you can download books within the Perlego app for offline reading on mobile or tablet. Learn how to download books offline

Perlego offers two plans: Essential and Complete

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

We are an online textbook subscription service, where you can get access to an entire online library for less than the price of a single book per month. With over 1 million books across 990+ topics, we’ve got you covered! Learn about our mission

Look out for the read-aloud symbol on your next book to see if you can listen to it. The read-aloud tool reads text aloud for you, highlighting the text as it is being read. You can pause it, speed it up and slow it down. Learn more about Read Aloud

Yes! You can use the Perlego app on both iOS and Android devices to read anytime, anywhere — even offline. Perfect for commutes or when you’re on the go.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app

Yes, you can access Social Robots by Marco Nørskov in PDF and/or ePUB format, as well as other popular books in Philosophy & Politics. We have over one million books available in our catalogue for you to explore.