![]()

1 Dual process theory

Dual process theory: origins

The idea that the mind is functionally differentiated is not new. But after spending quite some time in hiding under the name of “faculty psychology” as one of philosophy’s many – many – shameful family secrets, it experienced a threefold revival in the final quarter of the 20th century, with psychology, economics, and philosophy for once converging on something. Nowadays, the name “two systems” or “dual process” theory has become common parlance. Its heyday culminated in the so-called Nobel Prize for Economics in 2002 and was closely followed, as if to grimly confirm its central predictions, by the inception of postalethic politics. No doubt when people first came up with the distinction between System I and System II, they did not expect that political parties would feel compelled to pick sides.

In psychology, many of the central insights of dual process theory were anticipated by studies showing how easily human judgment and agency is swayed by extraneous features of the situation, how prone people are to confabulation (Nisbett and Wilson 1977 and 1978, Hirstein 2005) and how much of their thinking goes on beneath the tip of the cognitive iceberg (Wilson 2002). Many of our intuitive judgments don’t hold up under closer scrutiny. And the errors we make aren’t just random, either: people’s thinking follows predictable patterns of error.1

Much of the work leading to dual process psychology has thus been about devising clever ways to trick people into revealing how feeble- minded they are. In behavioral economics, this art was perfected by Amos Tversky and Daniel Kahneman (1982; see also Kahneman 2003), who were able to show in just how many ways human beings deviate from standard models of rational choice. Though not originally the focal point of their work, Kahneman (2000) subsequently confirmed that “Tversky and I always thought of the heuristics and biases approach as a two-process theory” (682). Later, Kahneman would go on to write the instant classic Thinking, Fast and Slow, the title of which is plagiarized by this book.

For some reason, people stubbornly refuse to adhere to the norms of reasoning and decision-making postulated by economists. This is not to indict these norms as invalid; but as far as their descriptive adequacy is concerned, Kahneman and Tversky were able to show that they belong squarely in the Platonic realm. Take statistics and probability, for instance. People routinely confuse what is most likely with what they can most easily recall examples of (this is called the availability heuristic, Tversky and Kahneman 1973). Depending on context, they think that there are cases in which A + B is more likely than A (this is called the conjunction fallacy). And virtually everyone prefers driving to flying, which at least keeps the undertakers employed (Sunstein 2005a).

Many of Kahneman and Tversky’s insights were modeled in terms of their prospect theory (Kahneman 2003, 703ff.), according to which people’s utility function is an S-shaped curve, with a subtle kink in the origin. This entails that, unlike what is assumed in standard rational choice theory, both gains and losses have diminishing utility, and that losses hurt more. The first theorem is particularly striking. Think about it: if utility diminishes as it increases, how could disutility diminish as well? It’s hard to make sense of, until one accepts that what matters to people isn’t amounts of, but changes in utility. At (roughly) the same time, Richard Thaler conducted his first studies on the endowment effect (1980; see also Thaler 2015, 12ff.). He, too, found that people intuitively disvalue losses more than they value gains.

These mistakes are much like optical illusions. Even when their flaws are exposed, they remain compellingly misleading. But the interesting thing about them is that their flaws can be exposed: when the true answer to a question or the correct solution to a problem is pointed out to them, even laypeople often recognize that their intuition led them astray. This suggests that people are capable of two types of information processing, one swiftly yielding shoddy judgments on the basis of quick-and-dirty rules of thumb, the other occasionally yielding correct judgments on the basis of careful analytic thinking. Keith Stanovich came up with the deliberately non-descriptive System I/System II terminology to capture this distinction whilst avoiding the historical baggage of concepts such as “reason” or “intuition.”

Finally, philosophy made its own contribution, perhaps best exemplified by Jerry Fodor’s (1983) groundbreaking work on the “modularity of mind.” Though not strictly speaking Team Two Systems, Fodor argued that the mind comprises a surprisingly large amount of domain-specific modules that frequently carry out one, and only one, specific task, such as facial recognition or guessing the trajectory of falling objects. Fodor identified the following nine characteristics that give a type of processing a claim to modularity:

- domain-specificity

- mandatory operation

- limited accessibility

- quickness

- informational encapsulation

- simple outputs

- specific neural architecture

- characteristic patterns of breakdown

- characteristic pattern of ontogenetic development (38ff.)

System I processes are frequently dedicated to very specific functions, operate on the basis of shallow, inflexible rules and are neither consciously accessible (just how are you able to recognize your mother out of the 100 billion people that have ever lived?) nor cognitively penetrable. The good news is that they require virtually no effort, and can easily run in parallel.

The list of things we are bad at is ineffably long, and includes general domains such as probability, logic, exponential reasoning, large numbers and gauging risks as well as more specific bugs such as hindsight bias, confirmation bias, anchoring effects, framing effects, and so on. In all of these cases, System I routinely comes up with intuitively compelling but demonstrably incorrect pseudo solutions to a variety of problems. Most of the aforementioned authors are thus strongly in favor of relying on System II as much as possible.

System I and II processes can be distinguished along several dimensions: popular criteria for discriminating between intuitive and non-intuitive thinking have to do with whether an episode of information processing is automatic or controlled, quick or slow, perceptual or analytic, and so forth. It is useful to sort these features into four main “clusters” (Evans 2008, 257) (see Table 1.1).

Table 1.1 Features of Systems I & II: Four Clusters (adapted from Evans 2008, 257) | System I | System II |

| Cluster I (Consciousness) |

| Unconscious | Conscious |

| Implicit | Explicit |

| Automatic | Controlled |

| Low effort | High effort |

| Rapid | Slow |

| High capacity | Low capacity |

| Default process | Inhibitory |

| Holistic, perceptual | Analytic, reflective |

| Cluster II (Evolution) |

| Old | Recent |

| Evolutionary rationality | Individual rationality |

| Shared with animals | Uniquely human |

| Nonverbal | Language-dependent |

| Modular | Fluid |

| Cluster III (Functionality) |

| Associative | Rule-based |

| Domain-general | Domain-specific |

| Contextualized | Abstract |

| Pragmatic | Logical |

| Parallel | Sequential |

| Cluster IV (Individual differences) |

| Universal | Heritable |

| Independent of general intelligence | Linked to general intelligence |

| Independent of working memory | Limited by working memory capacity |

System I and II processes differ with regard to whether they require conscious control and draw on working memory (cluster 1), how evolutionarily old they are (cluster 2), whether they are implemented through symbolic, frequently language-based thought (cluster 3) and whether one can find strong across-the-board individual differences between them (cluster 4).

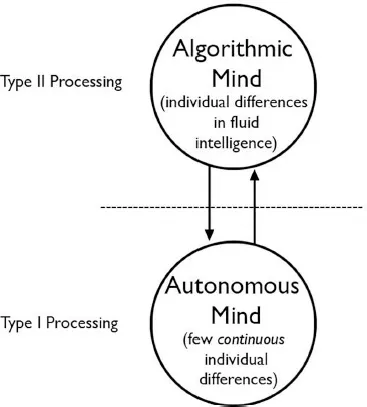

This leads to a straightforward account of the architecture of the mind according to which there are two basic types of information processing (see Figure 1.1).

How these two types of processing relate to each other and whether this account is sufficiently rich is the topic of this book. I will argue, perhaps unsurprisingly, that it is not.

Figure 1.1 Two Systems (adapted from Stanovich 2011, 122)

System I has an essentially economic rationale: it is about saving expensive and scarce cognitive resources. Through training and exercise, the mind is generally very good at allowing tasks that at first require effortful System II thinking to migrate into System I. When this happens, conscious thinking becomes intuitive via habituation. The modularity of the mind is essentially due to the same process, but on a phylogenetic level: mental modules that carry out important domain- specific tasks are evolutionarily accumulated cognitive capital. System II, then, has enabled us to create a specific niche for a Mängelwesen like us to thrive by supplementing the fossilized wisdom of previous generations with a flexible problem-solving mechanism preparing us for the unexpected.

Dual process theorists relish our cognitive shortcomings, but it would be misleading, or at the very least an overstatement, to suggest that our mind simply does not work. What the evidence shows is that our minds are vulnerable, and indeed exploitable; to a large extent, this is due to the fact that we hurled them into an environment they were never supposed to deal with. System I’s efficiency rationale entails that we cannot always think things through with due diligence and care. Most of the time, we cut corners. The way we do this is by deploying heuristics, rough-and-ready cognitive shortcuts that were tailor-made for an environment of evolutionary adaptedness we no longer inhabit: small groups of around 150 people whose members are strongly genetically related, with tight social cohesion and cooperation and, importantly, without the need for understanding the law of large numbers or the diamond/water paradox.

Our cognitive vulnerability stems from the fact that heuristics work on the basis of attribute substitution (Sinnott-Armstrong, Young and Cushman 2010). A given cognitive task implicitly specifies a target attribute, say, the number of deaths from domestic terrorist attacks per year vs. the number of gun-related deaths or how much money the state spends on developmental aid vs. the military. But this target attribute is difficult to access directly, so we resort to a heuristic attribute such as how easy it is to recall instances of the former (domestic terrorist attacks) compared to instances of the latter (e. g. people you know who have been murdered). Such cognitive proxies often work fine, but in many cases – such as this one – they can go horribly awry, and prompt intuitive judgments that are hopelessly off. In the case of moral judgment, people sometimes seem to substitute “emotionally revolts me” for “is morally wrong” (Schnall et al. 2008; see also Slovic et al. 2002) – with disastrous effects.2

But not everyone is this pessimistic about intuition. Some authors insist that our mind’s proneness to error is essentially an artifact created by overzealous trappers hell-bent on finding flaws in our thinking by exposing unsuspecting participants to ecologically invalid toy problems (Gigerenzer 2008), that is, psychologists. In real-world contexts, on the other hand, relying on intuition is not just inevitable, but frequently constitutes the most adaptive trade-off between efficiency and accuracy (Wilson 2002).

To a certain extent, friends and foes of System I are talking past each other. Some argue that System I performs well under ecologically valid conditions; some show that it performs badly when confronted with novel or unusual tasks. These two claims are only rhetorically at odds with each other, and reflect differences in emphasis more than in substance.

The evolutionary origins of our mind explain another of its important features, which is its essentially inhibitive character. There is a kernel of truth in sen...