![]()

Part 1

AVIATION SAFETY

![]()

1 Proactive safety culture: Do we need human factors?

Daniel E. Mauriño

International Civil Aviation Organization (ICAO)

Introduction

The “70% factor” statistic underlies most justifications for the integration of Human Factors knowledge into aviation operations. However, the cause-effect relationship this statistic suggests reflects views on human error prevailing some forty years ago and it ignores a significant body of recent research on the subject. It is suggested that its conventional rationale is no longer valid. The integration of Human Factors knowledge is essential to aviation not because most safety breakdowns are caused by lapses in human performance, but because error is a normal component of human behaviour. Error is the inevitable downside of human intelligence, it is the price humans pay for being able to “think on our feet”. Error is a “load shedding” mechanism, provided by human cognition, to allow humans to flexibly operate under demanding operational conditions for prolonged periods of time without draining their limited mental batteries (Amalberti, 1996).

Since it is impossible to avoid human error in operational contexts, the aim should then be trying to make the best out of its true nature. There is nothing inherently wrong with error itself as manifestation of human behaviour. The problem with error in aviation lies with its negative consequences in operational contexts. This is a fundamental point: an error which is trapped before it produces damage is operationally inconsequential. For practical purposes, such error does not exist. The implications in terms of developing a safety culture seem clear: safety processes should aim at error management rather than error avoidance.

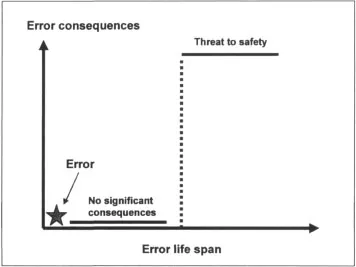

To err is human: Acceptable errors

The notion of acceptable errors underpins proactive error management. Simply put, flaws in human performance and the ubiquity of error are taken for granted, and rather than attempting to improve the human condition, the objective of prevention strategies becomes improving the context within which humans perform (Reason, 1997). Prevention aims - through design, certification, training, management, investigation, procedures and operational monitoring - at fostering operational contexts which introduce a buffer zone or time delay between the commission of errors and the point in which their consequences become a threat to safety (Airbus Industrie, 1997). This buffer zone/time delay allows us to recover the consequences of errors, and the better the quality of the buffer or the longer the time delay, the stronger the intrinsic resistance and tolerance of the operational context to the negative consequences of human error (Figure 1.1).

Figure 1.1

Consequences of human error

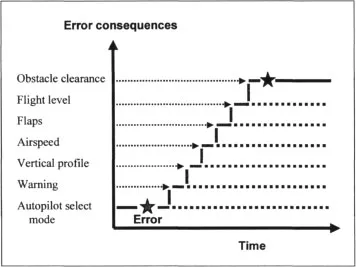

Operational contexts and, most importantly, systems design, should allow operational personnel second chances to recover the consequences of errors. Figure 1.2 provides an operational example: the crew make a wrong selection in the autopilot mode control panel and the warning to alert the crew about it is not conspicuous. This leads to a deviation in vertical profile, then to a speed excursion, then to exceed flaps placard speed, then an altitude is busted, then obstacle clearance altitude is lost. In this case, there is no engineered built-in device to contain the negative consequences of an erroneous autopilot mode selection. A combination of inconspicuous feedback and absence of built-in protection will make it likely that the crew will first learn about their error through the behaviour of the aircraft, when it may be too late.

Figure 1.2

Second chance for error recovery

Since humans are the most flexible component of the aviation system, it is tempting to try to adapt human behaviour - through training - to cope with less-than-optimum human-technology interface design (Helmreich & Merritt, in press). However, the demand in such case would be for “redline” human performance: levels of alertness, vigilance and cognition which humans can only sustain for brief periods. System design demanding redline human performance is incompatible with the notion of acceptable errors, and if in struggle, systemic flaws will inevitably defeat human performance.

As an aside, should the scenario result in an accident, history suggests that one likely recommendation of the investigation process would be to remind pilots that they must remain vigilant at all times, since they can (through redline performance) do it. One less likely recommendation would be that system design should be modified. In between these two extremes, as an interim measure, it might be recommended that existing procedures be revised to somehow introduce the buffer zone/time delay previously discussed. The first option (reminding pilots to be vigilant) is a “motherhood” recommendation which reactively and obliquely addresses human performance. The second option (system and/or procedures re-design) proposes clear safety action to proactively address well-known and documented limitations in human performance.

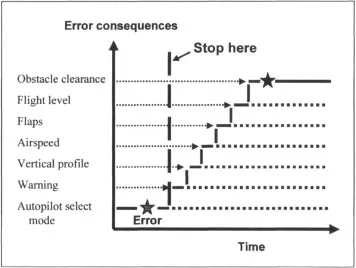

Figure 1.3 revisits the previous example, where the system has now an engineered device built-in which blocks, regardless of human intervention, the negative consequences of an erroneous autopilot mode selection.

Figure 1.3

A system-engineered solution

In this case, the mistaken selection in the autopilot mode control panel is an error which does not produce damaging consequences; therefore, for practical consequences, it does not exist. System demands in this operational context are compatible with “greenline” human performance: levels of alertness, vigilance and cognition well within the performance that properly selected, trained and motivated individuals are able to deliver. System design demanding greenline human performance is compatible with the notion of acceptable errors, and cooperative interfaces will inevitably enhance human performance.

The safety process

Historically, accident investigations have backtracked events under scrutiny until they found actions or inactions by people which generated outcomes different to those intended. At that point, human error was pronounced, the investigation closed and explanations were sought to understand why people were fatigued, confused, overloaded, situationally-unaware and so forth under the particular set of circumstances, and therefore made the error in question.

It is a matter of debate whether such information would contribute a prevention value in practical terms. Human performance explanations are valid if formulated with due consideration to the particular context within which performance takes place (Woods, Johannesen, Cook, & Sarter, 1994). It is hardly possible to replicate - and even less likely to extrapolate - operational contexts; the most it can be hoped for are similar scenarios. Workload, for example, is a concept about which generalisations can be extended; contextual circumstances with detrimental effects on workload are not. Sets of scenarios leading to workload might be anticipated, but not all Safety investigations aimed at the individual which neglect the context evaluate a demonstrated performance against redline human performance: what people did compared with what they could and should have done, given ideal conditions.

A fundamental perspective in the safety process is to consider human error as the starting point rather than the stop-rule (Woods, et al., 1994). When the investigation backtracking process arrives at the point in which error is considered to be of relevance to the event under examination, the objective should then become to identify the underlying contextual factors which fostered it. Error can be likened to fever: an indication of illness rather than its cause. Error is a marker announcing problems in the architecture of the aviation system. The obvious place to start looking for these problems is at the interfaces between human and system. This search should include the various human-organisation interfaces relevant to the event under scrutiny, to determine whether the interfaces provided for the buffer zone/time delay essential to allow for recovery from lapses in human performance. This approach would yield to comparing actual performance against greenline human performance, and it would produce information relevant to realistic prevention proposals regarding system and human performance.

A question of substance

The integrated approach to the consideration of organisational processes, management factors and individual human performance during the safety investigation process is the centre of a controversy over which the membership of the safety investigation profession seems to be taking sides, some being sceptical and doubtful, others assertive and convinced. In the final analysis, the controversy is totally irrelevant and a digression which must not obscure the real underlying question, one of principle and substance: what kind of safety investigation process does the industry need to sustain aviation safety in the year 2000 and beyond? (Maurino, 1998). Contemporary aviation is facing the dilemma of eliminating the residual risk inherent to near-perfect safety. This is no small feat. While there may not be a consensus of opinion as to what should be done, there must be no doubt as to what must not be done: the safety investigation process must not repeat strategies from the past. Regardless of anything else, innovative avenues of actions and fresh ideas are essential.

The tools

There are three possible tools to provide for “safety 2000”: accident investigation; incident investigation and normal process monitoring.

There are limitations inherent to the accident investigation process vis-à-vis the needs of “safety 2000”. Accident investigations frequently arrive when it is too late to exercise proactive prevention, since accidents are unique set of circumstances which belong in the past. They frequently aim at determining where, when and how deviation from rules has taken place, and then causally link such deviation with the safety breakdown under consideration. They frequently assume perfect system design, and that accidents are caused by individual error, sub-standard performance or, in extreme cases, misfits. They are frequently reactive because they work essentially backwards, exercise prevention by design and focus in the outcome at the expense of the processes. They have frequently been successful in identifying symptoms, but not so much causes, of safety breakdowns. This approach can be likened to a funereal protocol, where the objectives are to put losses behind, to reassert trust and faith in the system, to resume normal activities and to fulfil political objectives (Woods, Johannesen, Cook, & Sarter, 1994).

A step forward in the direction of “safety 2000” are incident reporting systems. It is accepted that incidents are precursors of accidents, and that N-number of incidents of one “kind” takes place before an accident of the same “kind” eventually occurs. There is plenty of common sense evidence to this effect, suggesting that incidents signal weaknesses within the system before the system breaks down (Johnston, 1996). Nevertheless, there are also limitations to the contribution of incident reporting systems to “safety 2000”.

First, incidents are reported in the language of aviation, and therefore capture only the external manifestations of errors: confused a frequency, “busted” an altitude, misunderstood a clearance and so forth. This requires considerable operational elaboration of the “raw data” before it becomes meaningful for prevention purposes. Second and most important, however, is what has been called “normalization of deviance” (Vaughan, 1996). It is an established fact that, over time, operational personnel develop informal group practices and shortcuts to circumvent deficiencies in equipment design, clumsy procedures or policies which complicate operational personnel tasks and are incompatible with operational realities. These informal practices are the product of the collective savoir-faire and expertise of a group and they eventually become normal practices. This does not, however, deny the fact that they are indeed deviations from norms established and sanctioned by the organisation. In most instances normalised deviance not only works but it is effective (at least, temporarily), but by force, like any shortcut of baseline procedures, normalised deviance carries potential for unanticipated “downsides” which might unexpectedly trigger human error. However, since they are “normal,” it stands to reason that neither these practices nor their downsides will be reported to, or captured by, incident reporting systems.

Normalised deviance is further compounded by the fact that even the most willing reporters may not be able to fully appreciate the significance of the factors to report. If operational personnel are continuously exposed to substandard managerial practices, poor working conditions or flawed equipment, they may not recognise such factors as reportable problems (Reason, 1997). Therefore, incident reporting - although certainly much better than accident investigation - is not enough to understand how the aviation system fails and the human contribution to these failures.

The ultimate tool to provide for “safety 200...