Chapter 1

Language Without Grammar

1. INTRODUCTION

The preeminent explanatory challenge for linguistics involves answering one simple question—how does language work? The answer remains elusive, but certain points of consensus have emerged. Foremost among these is the idea that the core properties of language can be explained by reference to principles of grammar. I believe that this may be wrong.

The purpose of this book is to offer a sketch of what linguistic theory might look like if there were no grammar. Two considerations make the enterprise worthwhile—it promises a better understanding of why language has the particular properties that it does, and it offers new insights into how those properties emerge in the course of the language acquisition process.

It is clear of course that a strong current runs in the opposite direction. Indeed, I acknowledge in advance that grammar-based work on language has yielded results that I will not be able to match here. Nonetheless, the possibilities that I wish to explore appear promising enough to warrant investigation. I will begin by trying to make the proposal that I have in mind more precise.

2. SOME PRELIMINARIES

The most intriguing and exciting aspect of grammar-based research on language lies in its commitment to the existence of Universal Grammar (UG), an inborn faculty specific grammatical system consisting of the categories and principles common in one form or another to all human languages. The best known versions of this idea have been formulated within the Principles and Parameters framework—first Government and Binding theory and more recently the Minimalist Program (e.g., Chomsky 1981, 1995). However, versions of UG are found in a variety of other frameworks as well, including most obviously Lexical Functional Grammar and Head-driven Phrase Structure Grammar. In all cases, the central thesis is the same: Universal Grammar makes human language what it is. I reject this idea.

Instead, I argue that the structure and use of language is shaped by more basic, nonlinguistic forces—an idea that has come to be known in recent years as the emergentist thesis (e.g., Elman 1999, MacWhinney 1999, Menn 2000).1 The here is that the core properties of sentences follow from the manner in which they are built. More specifically, I will be proposing that syntactic theory can and should be subsumed by the theory of sentence processing. As I see it, a simple processor, not Universal Grammar, lies at the heart of the human language faculty.

Architects and carpenters

A metaphor may help convey what I have in mind. Traditional syntactic theory focuses its attention on the architecture of sentence structure, which is claimed to comply with a complex grammatical blueprint. In Government and Binding theory, for instance, well-formed sentences have a deep structure that satisfies the X-bar Schema and the Theta Criterion; they have a surface structure that complies with the Case Filter and the Binding Principles; they have a logical form that satisfies the Bijection Principle; and so on (e.g., Chomsky 1981, Haegeman 1994). The question of how sentences with these properties are actually built in the course of speech and comprehension is left to a theory of ‘carpentry’ that includes a different set of mechanisms and principles (parsing strategies, for instance).

My view is different. Put simply, when it comes to sentences, there are no architects; there are only carpenters. They design as they build, limited only by the materials available to them and by the need to complete their work as quickly and efficiently as possible. Indeed, as I will show, efficiency is the driving force behind the design and operation of the computational system for human language. Once identified, its effects can be discerned in the form of syntactic representations, in constraints on coreference, control, agreement, extraction, and contraction, and in the operation of parsing strategies.

My first goal, pursued in the opening chapters of this book, will be to develop a theory of syntactic carpentry that offers satisfying answers to the questions traditionally posed in work on grammatical theory. The particular system that I develop builds and interprets sentences from ‘left to right’ (i.e., beginning to end), more or less one word at a time. In this respect, it obviously resembles a processor, but I will postpone discussion of its exact status until chapter nine. My focus in earlier chapters will be on the more basic problem of demonstrating that the proposed sentence-building system can meet the sorts of empirical challenges presented by the syntax of natural language.

A great deal of contemporary work in linguistic theory relies primarily on English to illustrate and test ideas and hypotheses. With a few exceptions, I will follow this practice here too, largely for practical reasons (I have a strict page limit). Even with a focus on English though, we can proceed with some confidence, as it is highly unlikely that just one language in the world could have its core properties determined by a processor rather than a grammar. If English (or any other language) works that way, then so must every language—even if it is not initially obvious how the details are to be filled in.

I will use the remainder of this first chapter to discuss in a very preliminary way the design of sentence structure, including the contribution of lexical properties. These ideas are fleshed out in additional detail in chapter two. Chapter three deals with pronominal coreference (binding), chapters four and five with the form and interpretation of infinitival clauses (control and raising), and chapter six with agreement. I turn to wh questions in chapter seven and to contraction in chapter eight. Chapters nine and ten examine processing and language acquisition from the perspective developed in the first portion of the book. Some general concluding remarks appear in chapter eleven. As noted in the preface, for those interested in a general exposition of the emergentist idea for syntax, the key chapters are one, three (sections 1 to 3), four, six (sections 1 to 3), eight (sections 1 & 2), and nine through eleven.

Throughout these chapters, my goal will be to measure the prospects of the emergentist approach against the phenomena themselves, and not (directly) against the UG-based approach. A systematic comparison of the two approaches is an entirely different sort of task, made difficult by the existence of many competing theories of Universal Grammar and calling for far more space than is available here. The priority for now lies in outlining and testing an emergentist theory capable of shedding light on the traditional problems of syntactic theory.

3. TWO SYSTEMS

In investigating sentence formation, it is common in linguistics, psychology, and even neurology to posit the existence of two quite different cognitive systems, one dealing primarily with words and the other with combinatorial operations (e.g., Pinker 1994:85, Chomsky 1995:173, Marcus 2001:4, Ullman 2001).2 Consistent with this tradition, I distinguish here between a conceptual-symbolic system and a computational system.

The conceptual-symbolic system is concerned with symbols (words and morphemes) and the notions that they express. Its most obvious manifestation is a lexicon, or mental dictionary. As such, it is associated with what is sometimes called declarative memory, which supports knowledge of facts and events in general (e.g., Ullman 2001:718).

The computational system provides a set of operations for combining lexical items, permitting speakers of a language to construct and understand an unlimited number of sentences, including some that are extraordinarily complex. It corresponds roughly to what we normally think of as syntax, and is arguably an instance of the sort of procedural cognition associated with various established motor and cognitive skills (Ullman ibid.). Don’t be misled by the term computational, which simply means that sentence formation involves the use of operations (such as combination) on symbols (such as words). I am not proposing a computer model of language, although I do believe that such models may be helpful. Nor am I suggesting that English is a ‘computer language’ in the sense deplored by Edelman (1992:243)—‘a set of strings of uninterpreted symbols.’

The conceptual-symbolic and computational systems work together closely. Language could not exist without computational operations, but it is the conceptualsymbolic system that ultimately makes them useful and worthwhile. A brief discussion of how these two systems interact is in order before proceeding.

3.1 The lexicon

A language’s lexicon is a repository of information about its symbols—including, on most proposals, information about their category and their combinatorial possibilities. I have no argument with this view,3 and I do not take it to contradict the central thesis of this book. As I will explain in more detail below, what I object to is the idea that the computational system incorporates a grammar—an entirely different matter.

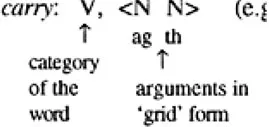

Turning now to a concrete example, let us assume that the verb carry has the type of meaning that implies the existence of an entity that does the carrying and of an entity that is carried, both of which are expressed as nominals. Traditional category labels and thematic roles offer a convenient way to represent these facts. (V=verbal; N=nominal; ag=agent; th=theme.)

(1) (e.g., Harry carried the package.) Carry thus contrasts with hop, which has the type of meaning that implies a single participant.

I will refer to the elements implied by a word’s meaning as its arguments and to the argument-taking category as a functor, following the terminological practice common in categorial grammar (e.g., Wood 1993). Hence carry is a functor that demands two arguments, while hop is a functor that requires a single argument. In accordance with the tradition in categorial grammar (e.g., Steedman 1996, 2000), I assume that functors are ‘directional’ in that they look either to the left or to the right for their arguments. In English, for example, a verb looks to the left for its first argument and to the right for subsequent arguments, a preposition looks rightward for its nominal argument, and so forth. We can capture these facts by extending a functor’s lexical properties as follows, with arrows indicating the direction in which it looks for each argument. (P=preposition; loc=locative.)

(3) a. (e.g., Harry carried the package.) Directionality properties such as these cannot account for all aspects of word order, as we will see in chapter seven. However, they suffice for now and permit us to illustrate in a preliminary way the functioning of the computational system.

3.2 The computational system

By definition, the computational system provides the combinatorial mechanisms that permit sentence formation. But what precisely is the nature of those mechanisms? The standard view is that they include principles of grammar that regulate phenomena such as structure building, coreference, control, agreement, extraction, and so forth. I disagree with this.

As I see it, the computational system contains no grammatical principles and does not even try to build linguistic structure per se. Rather, its primary task is simply to resolve the lexical requirements, or dependencies, associated with individual words. Thus, among other thin...