This section explores a set of critical issues in thinking about the role of technology, learning, and education in tertiary institutions. Each chapter explores a critical issue. Chapter 1 sets the stage by outlining basic trends in computing, telecommunications, software, and their implications for education. Topics such as changes in computing power, new generations of computing, optical networking, wireless telecommunications, Internet2, as well as new developments in software are explored. Then, some of the critical implications of these trends for the birth and death of universities, learning, and the role of human, organizational, and technological infrastructures for new learning environments are outlined.

Chapter 2 builds from these trends in technology to focus on strategic questions for universities. The analysis begins with an exploration of technology-enabled education in the past, present, and future. Next, the authors focus on a variety of forces changing the nature of tertiary education. These include the new private sector competitors, the decline of local monopolies, Internet2, the changing economics of education, and the drive for lifelong learning. All these forces require new forms of strategic decisions by universities to survive and enhance their positions.

Chapter 3 argues that learning, not technology, should be the driver of any educational innovation. Our basic focus here is on learning and what we know about how people learn. The next element is the learning task—what should the individual be able to do as a result of their experiences and what knowledge and skills must they acquire? A basic thesis is that new technological learning environments must be congruent with how individuals learn and the nature of the learning task.

Chapter 4 examines strategic decisions about designing the technological infrastructure. The audience for this chapter are people responsible for designing and implementing infrastructure, allocating resources for this infrastructure, or users. A hierarchical model of infrastructure, beginning with the basic physical infrastructure and including components such as facilities and operations, middleware, core applications, and specialized applications, is presented from both technological and social-political perspectives. The focus of analysis is to frame choices about design, planning, funding, and outsourcing.

Chapter 5 explores the role of the digital library as an integral part of the educational environment. A series of dilemmas is explored, including difficulties in defining user needs in digital libraries, lack of clarity in the role of digital libraries and the teaching-learning process, competing priorities for collection development and preservation, economics of information, copyright and fair use problems, monetary costs, and cultural implications of digital libraries. The identification and delineation of these dilemmas provides decision makers with a good guide for the design, funding, and implementation of digital libraries.

Finally, chapter 6 argues that the fundamental issue underlying all these topics is the capability of creating effective organizational change among the human, organizational, and technological components. There is a long history in the educational and noneducational sectors about ineffective implementation of technology-related change. Resistance to change from students, professors, administrators, and others all speak to ineffective organizational change. The focus in this analysis is on three fundamental change processes—planning, implementation and institutionalization, and factors that improve the probability of success in these processes and the entire change process. Extensive use of guides for effective changes and examples are also provided.

Chapter 1

Technology Trends and Implications for Learning in Tertiary Institutions

Raj Reddy

Paul S.Goodman

Carnegie Mellon University

Using technology to enable learning through the creation and communication of information is a time-honored tradition. More than 5,000 years ago, the invention of writing spurred the first information revolution, making it possible for one generation to accumulate information and communicate with the generations that followed it. When printing was invented about 500 years ago, the second information revolution began, marked by mass distribution of the printed word. Just 50 years ago, the invention of computers ushered in the third information revolution, making it possible to transform raw data into structured information, to transform that information into knowledge, and to transform knowledge into action using intelligent software agents and robots.

Whereas the use of computers to enhance learning dates back to the 1960s, these early efforts have not yet had a widespread systemic impact on education. In this chapter, we examine two questions: What are some current trends in computing and related technologies, and how might these trends influence the education system? This chapter begins with an exploration of technology trends in computing, telecommunications, and software. These classes of technology are highly interrelated in their impact on learning and education. Then we examine some of the implications of these trends for learning environments and educational institutions.

COMPUTING TRENDS

The third information revolution has already transformed the way we live, learn, work, and play, and these changes will likely continue. There are several important trends in computing. First is a dramatic growth in capacity that continues without signs of stopping, coupled with dropping prices. Seoond, computers continue to shrink in size; today, some prototypes are about the size of a couple of coins. At the same time, computing capabilities continue to become more integrated into everyday life, leading to situations of pervasive or even invisible computing in which access to technology is nearly constant as one moves from place to place.

More Power at Less Cost

The most amazing aspect of the technological revolution is its exponential growth. Over the past 30 years, the computing performance available at a given price has doubled every 18 months, leading to a hundred-fold improvement every 10 years. This means that 18 months from now, we will have produced (and consumed) as much computing power as was created during the past 50 years combined. These exponential increases have occurred in conjunction with a dramatic drop in price: While a supercomputer cost about $10 million in 1990, a machine with the same capabilities can be bought for less than $100,000 today.

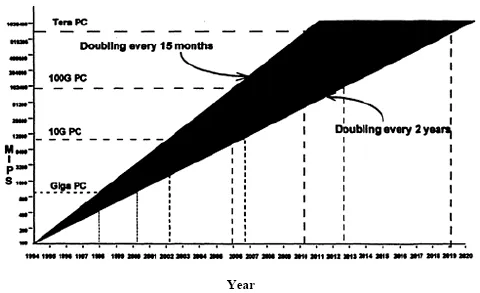

Figure 1.1 illustrates the exponential growth of computing capacity. The Y-axis measures millions of instructions per second (mips) as a function of time, starting from 1994, when this figure was first made. The band shows the expected range of performance in any given year. The lower bound is the performance to be expected if the performance doubled every 24 months, while the upper bound assumes that performance doubles every 15 months. In the year 2000, then, we can expect personal computers to process 800 to 1,600 mips. In the year 2010, we should see systems with the capacity to process more than 50,000 mips, and by 2020, we should see processors that can handle one trillion operations per second—all for the price of a PC today.

The expansion in secondary computer memory (disks and their equivalent) will be even more dramatic. While processor and memory technologies have been doubling every 18 months or so since the 1950s, disk densities have been doubling about every 12 months, leading to a thousand-fold improvement every 10 years. Four gigabytes of disk memory (which can be bought to day for about $50) will store up to 10,000 books, each averaging 500 pages—more than anyone can read in a lifetime. By the year 2010, we should be able to buy four terabytes for about the same price, enough for each of us to store a personal library of several million books and a lifetime collection of music and movies, all on a home computer.

If you wish, you would be able to capture every word you speak, from birth to your last breath, in a few terabytes. Everything you do and all you experience can be stored in full-color, three-dimensional high-definition video in under a petabyte. And if current trends continue, the necessary storage capacity to accomplish all of this will cost $100 or less by the year 2025.

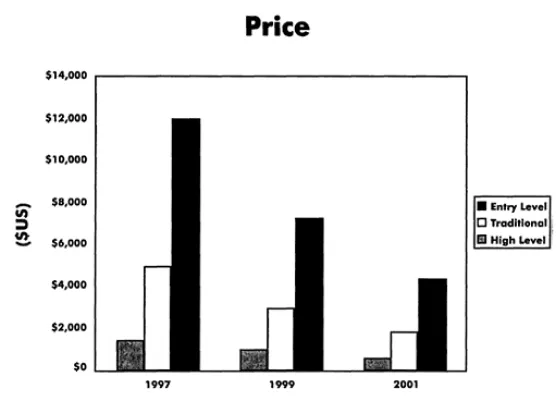

Figures 1.2 through 1.4 show a different visualization of exponential growth coupled with rapidly dropping prices. In a span of four years the power of entry-level PCs will have gone from 200 mips to 1,600 mips (Fig. 1.2), the memory capacity will have grown from 200 megabytes to more than a gigabyte (Fig. 1.3), and the cost (Fig. 1.4) will have tumbled from $l,500 to just $500.

FIG. 1.1. Exponential growth trends in computer performance.

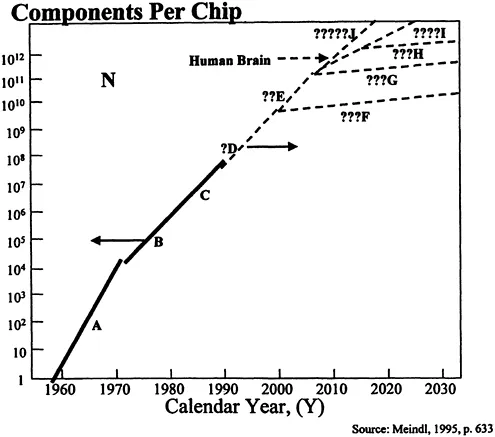

Figure 1.5 shows projections on the number of components per chip as a function of time. Assuming the same exponential trajectory by Year 2000, we should have a billion components per chip. This density is expected to grow to between 10 and 100 billion components per chip by the year 2010. The two key factors driving this growth are the average minimum feature size and the size of the chip. The feature size is expected to go from about 250 nanometers at present through under 100 nanometers by 2010. The size of the chip is expected to grow from about 20mm square to close to 100mm square by 2020.

Forms of Computing

As time goes on, the same computing power becomes available in smaller and smaller packages. Although it is not available commercially at this time, researchers have created a personal computer the size of two inch-square coins. Every feature of today’s desktop computer is in that tiny space. Conceivably, this computer could be carried in one’s pocket or worn on their body. This trend toward smaller, more portable computers is expected to continue for at least another decade or longer because of the expectation that computing power will continue to double every 18 to 36 months.

The issue, then, is if you have computers that are a thousand times more powerful and a thousand times smaller than the current PCs, will this change how we use computers? At one level, the implications will be minimal. At a different level, it will be significant. If the power is critical, this functionality will be the same whether it is a cubic foot or a cubic millimeter. On the other hand, having a mobile learning environment in your pocket gives you some degree of flexibility so that you could conceivable learn while you are on the move. Wearable computers are an example of how size plus other features can facilitate how we learn. Combining wireless technology (discussed in a later section) with size provides an alternative platform for work. We do not have to be in a specific office or location. We have increases in mobility, flexibility, and convenience.

FIG. 1.5. Components per chip. Data from “Low power microelectronics: Retrospect and prospect,” by J.C. Meindl, 1995, Proceedings of the IEEE, 83. Copyright © 1995 IEEE by the Institute of Electrical and Electronic Engineers. Adapted with permission.

I am an architecture student working at a building site. Using the wearable computer, I can access information that would inform my design work. Or I am an engineering student working at a factory and need to review information on new scheduling algorithms. This technology enables me to access information where and when I need it.

A basic idea in tracing these trends is to match the changes in technology with the form of the learning task. The combination of computing power, size, and wireless capabilities matched the requirements in the learning tasks for the architecture and engineering students. In these cases, people need information at a specific time and in a specific case. In other scenarios, place or time may not be important. The key idea is to think first about the task, and then relate the computing functionalities to the task. This is a basic theme in chapter 3—“Cooperation Between Educational Technology and Learning Theory to Advance Higher Education.”

New Generations of Computing

Although computers continue to shrink, people are working on the next generation of computing. Different people have different ideas about what form this next step will take. These ideas include ubiquitous computing, pervasive computing, and invisible computing.

Ubiquitous computing has primarily concentrated on collaborations in which geographically dispersed participants use technology—such as computerized writing surfaces—to share their ideas with one another in real time. In pervasive computing, users have access to computing power wherever they are, implying global access to personal information regardless of one’s location. This is already seen to some extent, for example, when a person traveling from Pittsburgh to San Francisco need only access a computer to connect to their e-mail account through the Internet, or to download lecture notes and slides stored on a server thousands of miles away. It is possible, now, simply to go to a new location with a web-based projection system, type in an Internet address, and access the information needed for the presentation.

Taking these concepts a step further, the basic premise of invisible computing is that as equipment gets smaller and smaller, the access to computation and information will be embedded in a universal infrastructure similar to the electrical system. Whereas you may not see a physical keyboard or screen when you walk into your room, the computers on your body will talk to computers in the wall, and they will figure out what kind of information you are likely to need or access or should be informed about immediately. If you need a screen, the painting on the wall might become one. If you need a keyboard, your palm-sized computer might turn into one. If you needed a lot of computational power, the high speed network will give you access to computer servers and memory servers and disk servers and other kinds of capabilities for which you would end up paying.

Many of the concepts summarized here will be realized in the next 10 years or so. That doesn’t mean the existing desktop computers will disappear—they will remain, because people will continue to use them for two or three decades in addition to the other, newer technologies. Note that other important research initiatives such as optical computing, DNA computing, and quantum computing are in very conceptual stages. Although we should pay attention to developments in these areas, their impacts on learning environments are very unlikely in the foreseeable future.

TELECOMMUNICATIONS TRENDS

Both of the major trends in telecommunications—optical networking and wireless communication—will have profound impacts on society. What remains to be seen is how quickly we can connect individual homes, rooms, and offices with the new technology, and at what cost.

Optical Networking

In optical networking, sometimes referred to as optical communication, information is shipped on a fiber-optic wire at one billion to one trillion bits per second. In this area, the trend is toward expanding bandwith, or the amount of data that can be sent over a wire. Through wavelength division multiplexing, for example, information is transmitted at a particular frequency that has been broken into small wavelengths, each one of them transmitting several gigabits of information. In the laboratory, people have been able to send more than a trillion bits of information on a single wire—up to 50 times more than all the telephone calls that happen in this country on a single day.

Unfortunately, although huge—almost unlimited—bandwidth can be achieved, you can only take advantage of it if there is a fiber coming to your room or office or home or learning environment, and that is expensive. This is known as the last mile problem. Several companies have already achieved a nationwide fiber-optic system, such as the one operated by Qwest Communications. Installing cable in a metropolis is somewhat more problematic, because you have to dig up the streets and run the risk of cutting into power lines and other incidents. Still, the new telecommunications technology is beginning to spread. In Pittsburgh, it has been announced that fiber soon will be available near homes and businesses, perhaps within two or three miles. To get the last leg of the connection, if you are the only one that wants it, the cost is around $100,000. But if 100 people in the same neighborhood joined together, the cost would be only $1,000 each. Even then, it will still cost $10 to $15 per month just to use the fiber, not including the infrastructure, computers and other needed equipment and connections. After taking those things into account, the unlimited bandwidth will cost about $100 a month.

Despite the current problems, this trend towards unlimited bandwidth is going to continue, because it’s a natural direction and the existing copper infrastructure is aging and will need to be replaced over the next 20 years or so. Otherwise, the number of repairs the phone company has to do in a month or a year will increase greatly, and the repairs will cost more than replacing the lines. At that time, it will make sense to replace the old infrastructure with a new fiber-optic one. The total cost of replacing the nation’s copper infrastructure is estimated at $160 billion. Because that is a large amount of money, some people are worried about the return on the investment. By the time the old infrastructure needs to be replaced, however, unlimited bandwidth should be available at an acceptable cost. Whether the penetration will be the same as cable or network television penetration is still anyone’s guess.

Wireless Telecommunications

The other trend in technology is toward wireless communication. Because of the last mile problem, many are wondering whether it would be less costly to send information by wireless means. Right now, the cost of wireless transmission and wireless transceivers is significantly higher than wired transmission for high bandwidths, but that may not be the case 5 to 10 years from now.

One issue with wireless communication is speed. For example, wireless Andrew, Carnegie Mellon University’s new wireless network, is based on the wireless ethernet, which is running at 10 to 11 megabits. Although this is relatively good for individual users, the ...