![]() PART I

PART I

Introduction![]()

CASE STUDY

Earthquakes in the Project Portfolio

Computer games are a serious business. A game for a current generation console (say an Xbox® or Playstation® 3) can cost $20 million or more to build. Even back in the mid-1990s, when my experience with formal project reviews began, a game could easily cost $2 million to develop. The company I worked for had about 60 such games under development at any one time.

My company, like most in the industry, had a problem. Projects slipped. They often slipped by months or even years. This didn’t do a lot to help our reputation with retailers, reviewers and customers. Perhaps even more critically for people who cared about things like making payroll, it made it impossible to predict cashflow. I was part of a team that was set up to bring predictability to project delivery.

Each member of the team was responsible for providing an independent view of the status of about ten projects in the portfolio. Each week we looked at what our projects were producing and tracked this against the original milestone schedules. We tracked the status of key risks. We read status reports. Above all, we talked with the project managers, discussing the issues they were dealing with and listening to their concerns. Sometimes we offered suggestions or put them in touch with other people who were dealing with similar issues, but often we just functioned as a sounding board to help them think through what was going on.

We also produced a weekly report for senior management – the Director of Development and the Chief Financial Officer (CFO). This consisted of a simple ordered listing of the projects under development, ranked by our assessment of their level of risk. We also wrote one or two sentences on each project, summarizing our reasons for its ranking. This report was openly published to everyone in the company, which gave everyone plenty of chance to tell us where we’d got it wrong…

(Interestingly, project managers generally reckoned their project was riskier than we’d assessed it. The project managers’ line management generally thought projects were less risky than we’d assessed them. Either way, people started to actively track the positioning of their project, and to tell us how our ranking of its status could be improved. By publishing our report openly, we created a very useful channel for this information.)

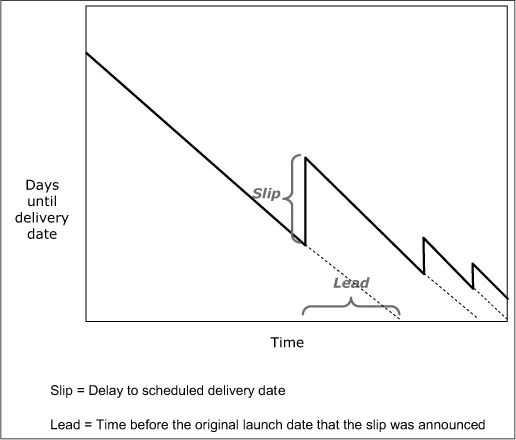

After we’d been working with our projects for a while, we began to recognize a pattern. Projects would go through a couple of fairly formal investment-approval reviews when they were set up. They’d then run quietly for six or 12 months. Then, about three months before the date they were due to be delivered into testing, they’d start to slip. Often they’d have a big slip initially, followed by a series of progressively smaller slips as they got closer to the end date (see Figure S1.1).

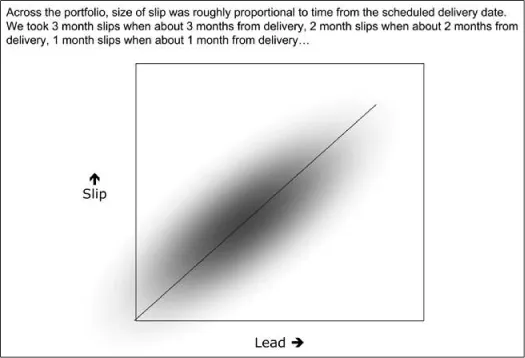

This pattern was remarkably consistent. Because we were working with a portfolio of 60 similar projects, we could draw graphs and start to see statistical trends. We found a strong correlation between the magnitude of each slip and the length of time left until the due date for delivery into testing (see Figure S1.2). For some reason, projects would remain stable for much of their development phase, then suddenly experience a large slip followed by a series of progressively smaller delays.

To me, with my original training in geophysics, this pattern looks a lot like an earthquake. Stress gradually builds up as tectonic plates move. Finally the rocks break, give off a loud bang, and settle into a less strained position. Then a series of aftershocks releases any residual stress. So it was with our projects. For a long time, people could ignore the small setbacks and minor slippages, perhaps hoping to make up the time later. Finally, as the end date loomed, they could no longer avoid reality. So they’d take a big slip to release all the built-up delay. Then the stress would build up again, and they’d take a series of smaller slips to release it.

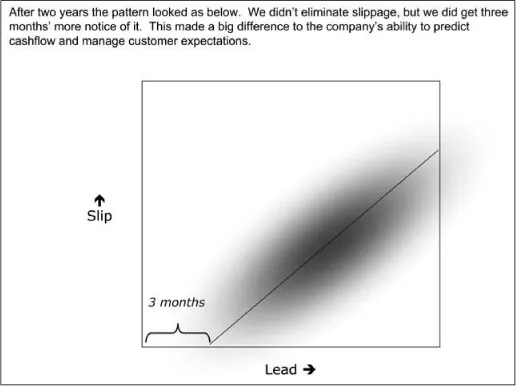

We monitored this pattern as we continued our reviews. After a couple of years, the pattern of slips looked like Figure S1.3. Projects were still slipping. The general pattern of those slips was still pretty much the same. But the slips were happening about three months earlier in the project lifecycle. There were several reasons for this: people were monitoring status more closely; project managers could use the review team to back their judgement as to when a slip was needed, so had confidence to make the call earlier; we’d got better at defining clear milestones. Overall, however, we were simply having a much more informed dialogue about our projects. This helped us to identify and relieve the stresses earlier. Which in turn meant that the CFO could be a little more confident about making payroll.

Figure S1.1 A typical project at the games company

Figure S1.2 Slip was roughly proportional to time from delivery

Figure S1.3 After two years we had three months’ more notice of slip

Of course, life’s never as simple as the case studies make out. In order to operate effectively, the review team needed to overcome a number of challenges. For example:

• Game development teams have a diverse skillset – programmers, graphic artists, musicians, and so on. It can be difficult for a reviewer to understand the status of everything these specialists are working on. By doing small, frequent reviews we could get a good feel for overall trends, but sometimes we needed to call on technical experts from other teams to help us understand the details.

• Reviewers could become isolated. They floated across many teams rather than belonging to any one project. Furthermore, they sometimes had to back their own judgement against the convictions of an entire project team. So we needed to build our own internal mentoring and support structure.

• Everyone wanted to be the first to see our reports. Project managers naturally wanted a chance to fix any issues on their projects before we reported them more widely. The project managers’ line management wanted to know what we were saying before their executive management heard it. And, of course, the executives we reported to wanted to hear of any issues as quickly as possible. We had to evolve clear communication protocols to win everyone’s buy in.

Over time, I’ve come to realize that not all of life is like games development. Industries have different drivers. Companies have different strategies and approaches. People differ in all sorts of ways. I believe project reviews can add value in virtually all circumstances, but you need to tailor them to the situation. This book contains what I’ve learnt about tailoring and conducting reviews.

![]()

CHAPTER 1

Project Success; Project Failure

Projects fail.

Our organizations invest in project management. We train project managers. We adopt project management processes. We look to organizations such as the Project Management Institute (PMI) and the UK-based Association for Project Management (APM) for support and guidance. A wealth of project management methods, bodies of knowledge, accreditations, maturity models, and so on, have been produced and eagerly taken up by practitioners and their employers and clients.

Yet projects still fail.

This is not because project managers are evil or lazy. It’s not because organizations don’t care about projects, nor because project teams don’t want to succeed. Projects are hard. By definition, projects are about non-routine activities. Many of them are large and complex. They may involve many people, often from different backgrounds and increasingly with different languages and cultures. Complex and rapidly changing technology may be critical to success. Budgets are never large enough. There is never enough time. In amongst all this, it is easy for people to get lost, to overlook important trends, to misunderstand each other. So projects fail.

Does this matter? A certain amount of failure is probably inevitable. After all, lack of failure is a sign of lack of ambition. The problem lies in the scale of the failure.

THE COST OF PROJECT FAILURE

The best known studies of project failure, at least in the information technology (IT) industry where I specialize, are the Chaos Reports. These have been produced by the Standish Group each year since 1994. The first report (Standish Group, 1995) estimated that 175,000 IT application development projects were undertaken in 1994, representing a total investment of US$250 billion. Only 16 per cent of those projects were considered fully successful. Of the remainder, 53 per cent ran over budget, on average spending about twice the originally budgeted cost. Even then they often delivered less than they’d planned. The remaining 31 per cent were cancelled before they’d completed.

That’s an awful lot of failure.

Things are at least improving. In the 2006 survey (Standish Group, 2006), only 19 per cent of projects were cancelled before they’d completed; 35 per cent succeeded. The average cost overrun on the remaining projects was only about 60 per cent. That’s better, but it still represents a vast cost to our organizations, both in wasted development funds and in foregone opportunities.

Outside the IT industry, the percentages may be different. They may even be better. However, every industry can find plenty of horror stories. Look at the construction of Wembley Stadium in London, or the Scottish Parliament, for example, or even the estimation skills of the typical builder. (The percentages may not be that different either. Consider the UK Channel 4 Grand Designs property makeover TV programme (Channel 4) on a dozen projects with an average budget of £225,000, the average cost overrun was 60 per cent.)

These statistics are subject to a number of challenges. Rigorously gathering survey data is expensive, so surveys often suffer from methodological weaknesses and selection biases. Many organizations are reluctant to wash their dirty linen in public, so we don’t hear about some failures. Conversely, newspapers tend to focus on the big failures, so we don’t hear about many successes. Analysts sell their reports by finding newsworthy results, exacerbating this latter bias.

Likewise, the statistics are very sensitive to the definition of success. Is the budget set when someone first sketches out a bright idea on the back of an envelope, or do you baseline from the (probably larger) figure that results from a few weeks of analysis? Say you set up a six month project with a budget of £1 million and projected benefits of £2 million. After a month of detailed analysis, your team comes back to you and says ‘We’ve found a way to generate £6 million of benefits, but the project is going to take twice as long and cost twice as much.’ By Standish’s definition, this is a failure: it’s 100 per cent over budget. But maybe from your perspective tripling your initial investment is better than doubling it? It all depends on how time-critical the end result is, and what cash you have available to invest.

Nonetheless, no-one really challenges the underlying message. Delayed, underperforming and failed projects represent a tremendous cost for many organizations. This cost comes in many guises. There is the money invested in cancelled projects. There is the cost of failing to bring new products to market. There is the cost of running inefficient operations while waiting for projects to deliver promised improvements to processes and systems. And so on.

These projects also create a tremendous personal cost for project team members, managers, sponsors and other stakeholders. People work long hours as they attempt to recover failing projects. They put other aspects of their lives on hold. Their careers are damaged by association with failure.

THE IMPORTANCE OF PROJECTS

Even if our success rate is improving, as some of the surveys suggest, it’s not obvious that these costs are declining. Many organizations are doing more projects.

It’s hard to gather clear statistics on this growth. Associations such as the PMI and APM can measure growth in their membership and in the number of accreditations they give out. Does this mean we’re doing more projects, or simply that we’ve become better at promoting project management as a profession? Probably a combination of the two. Likewise, the increasing number of articles on project management published in the business literature suggests that organizations see more need to manage projects effectively, as does the proliferation of organizational maturity frameworks such as the Portfolio, Programme and Project Management Maturity Model (P3M3 – Office of Government Commerce (OGC), 2006) and the Organizational Project Maturity Model (OPM3 – PMI, 2003). This is probably driven by the increasing amounts they are investing in projects. Methodologies such as PRINCE2 (OGC, 2005) also note that organizations are dealing with an accelerating rate of change and hence need to do more projects to handle it. Finally, studies such as Wheatley (2005) confirm the trend, but find it difficult to quantify.

Let’s take this on faith for now. Organizations are doing more, and more complex, projects. That certainly accords with my experience, and that of many people I’ve spoken to. A couple of factors lie at the heart of this.

For a start, projects are a way of implementing change. They help us build new processes, new structures, new products, and so on. In a rapidly changing world, the ability to change effectively is an increasingly important strategic capability. The ability to execute projects is part of this capability.

Second, we’ve done many of the easy projects. Where it used to be good enough simply to be able to schedule our fleet of trucks efficiently, we now need to coordinate a supply chain that spreads across many organizations and several continents. Where a simple customer database used to be all we desired, we now need a suite of analytical tools and increasingly flexible business processes to use their results. Where it used to be good enough to deliver individual projects on time and on budget, many organizations are finding that they need to optimize a complex portfolio of projects and programmes in order to compete.

If we are doing more projects, and these projects are increasingly complex, then it’s entirely feasible that the cost of failure is growing even as we get better. We need to get better even faster.

PROJECTS, PROGRAMMES, PORTFOLIOS

Before I go too much further, I need to address some points of terminology. First, does this discussion only apply to projects, or is it relevant to programmes too? What’s the difference between the two?

Here are some working definitions (from APM, 2006):

• A project is ‘a unique, transient endeavour undertaken to achieve a desired outcome’.

• A programme is ‘a group of related projects, which may include business-as-usual activities, that together achieve a beneficial change of a strategic nature for an organization’.

• A portfolio is ‘a grouping of projects, programmes and other activities carried out under the sponsorship of an organization’.

To some people, these definitions are contentious. Diff...