![]()

Chapter 1

Introduction

Developers are increasingly turning to managed languages and run-time systems for the many virtues they offer, from the increased security they bestow to code to the flexibility they provide by abstracting away from operating system and architecture. The benefits of managed code are widely accepted [Butters, 2007]. Because many services are provided by the virtual machine, programmers have less code to write. Code is safer if it is type-safe and if the run-time system verifies code as it is loaded, checks for resource access violations and the bounds of arrays and other collections, and manages memory automatically. Deployment costs are lower since it is easier to deploy applications to different platforms, even if the mantra ‘write once, run anywhere’ is over-optimistic. Consequently, programmers can spend a greater proportion of development time on the logic of their application.

Almost all modern programming languages make use of dynamic memory allocation. This allows objects to be allocated and deallocated even if their total size was not known at the time that the program was compiled, and if their lifetime may exceed that of the subroutine activation1 that allocated them. A dynamically allocated object is stored in a heap, rather than on the stack (in the activation record or stack frame of the procedure that allocated it) or statically (whereby the name of an object is bound to a storage location known at compile or link time). Heap allocation is particularly important because it allows the programmer:

• to choose dynamically the size of new objects (thus avoiding program failure through exceeding hard-coded limits on arrays);

• to define and use recursive data structures such as lists, trees and maps;

• to return newly created objects to the parent procedure (allowing, for example, factory methods);

• to return a function as the result of another function (for example, closures or suspensions in functional languages).

Heap allocated objects are accessed through references. Typically, a reference is a pointer to the object (that is, the address in memory of the object). However, a reference may alternatively refer to an object only indirectly, for instance through a handle which in turn points to the object. Handles offer the advantage of allowing an object to be relocated (updating its handle) without having to change every reference to that object/handle throughout the program.

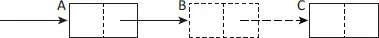

Figure 1.1: Premature deletion of an object may lead to errors. Here B has been freed. The live object A now contains a dangling pointer. The space occupied by C has leaked: C is not reachable but it cannot be freed.

1.1 Explicit deallocation

Any non-trivial program, running in a finite amount of memory, will need from time to time to recover the storage used by objects that are no longer needed by the computation. Memory used by heap objects can be reclaimed using explicit deallocation (for example, with C’s free or C++’s delete operator) or automatically by the run-time system, using reference counting [Collins, 1960] or a tracing garbage collector [McCarthy, 1960]. Manual reclamation risks programming errors; these may arise in two ways.

Memory may be freed prematurely, while there are still references to it. Such a reference is called a dangling pointer (see Figure 1.1). If the program subsequently follows a dangling pointer, the result is unpredictable. The application programmer has no control over what happens to deallocated memory, so the run-time system may choose, among other options, to clear (fill with zeroes) the space used by the deleted object, to allocate a new object in that space or to return that memory to the operating system. The best that the programmer can hope for is that the program crashes immediately. However, it is more likely that it will continue for millions of cycles before crashing (making debugging difficult) or simply run to completion but produce incorrect results (which might not even be easy to detect). One way to detect dangling references is to use fat pointers. These can be used to hold the version number of their target as well as the pointer itself. Operations such as dereferencing must then check that the version number stored in the pointer matches that stored in the object. However, this approach is mostly restricted to use with debugging tools because of its overhead, and it is not completely reliable.2

The second kind of error is that the programmer may fail to free an object no longer required by the program, leading to a memory leak. In small programs, leaks may be benign but in large programs they are likely to lead either to substantial performance degradation (as the memory manager struggles to satisfy new allocation requests) or to failure (if the program runs out of memory). Often a single incorrect deallocation may lead to both dangling pointers and memory leaks (as in Figure 1.1).

Programming errors of this kind are particularly prevalent in the presence of sharing, when two or more subroutines may hold references to an object. This is even more problematic for concurrent programming when two or more threads may reference an object. With the increasing ubiquity of multicore processors, considerable effort has gone into the construction of libraries of data structures that are thread-safe. Algorithms that access these structures need to guard against a number of problems, including deadlock, livelock and ABA errors.3 Automatic memory management eases the construction of concurrent algorithms significantly (for example, by eliminating certain ABA problems). Without this, programming solutions are much more complicated [Herlihy and Shavit, 2008].

The issue is more fundamental than simply being a matter of programmers needing to take more care. Difficulties of correct memory management are often inherent to the programming problem in question.4 More generally, safe deallocation of an object is complex because, as Wilson [1994] points out, “liveness is a global property”, whereas the decision to call free on a variable is a local one.

So how do programmers cope in languages not supported by automatic dynamic memory management? Considerable effort has been invested in resolving this dilemma. The key advice has been to be consistent in the way that they manage the ownership of objects [Belotsky, 2003; Cline and Lomow, 1995]. Belotsky [2003] and others offer several possible strategies for C++. First, programmers should avoid heap allocation altogether, wherever possible. For example, objects can be allocated on the stack instead. When the objects’ creating method returns, the popping of the stack will free these objects automatically. Secondly, programmers should pass and return objects by value, by copying the full contents of a parameter/result rather than by passing references. Clearly both of these approaches remove all allocation/deallocation errors but they do so at the cost of both increased memory pressure and the loss of sharing. In some circumstances it may be appropriate to use custom allocators, for example, that manage a pool of objects. At the end of a program phase, the entire pool can be freed as a whole.

C++ has seen several attempts to use special pointer classes and templates to improve memory management. These overload normal pointer operations in order to provide safe storage reclamation. However, such smart pointers have several limitations. The auto_ptr class template cannot be used with the Standard Template Library and will be deprecated in the expected next edition of the C++ standard [Boehm and Spertus, 2009].5 It will be replaced by an improved unique_ptr that provides strict ownership semantics that allow the target object to be deleted when the unique pointer is. The standard will also include a reference counted shared_ptr,6 but these also have limitations. Reference counted pointers are unable to manage self-referential (cyclic) data structures. Most smart pointers are provided as libraries, which restricts their applicability if efficiency is a concern. Possibly, they are most appropriately used to manage very large blocks, references to which are rarely assigned or passed, in which case they might be significantly cheaper than tracing collection. On the other hand, without the cooperation of the compiler and run-time system, reference counted pointers are not an efficient, general purpose solution to the management of small objects, especially if pointer manipulation is to be thread-safe.

The plethora of strategies for safe manual memory management throws up yet another problem. If it is essential for the programmer to manage object ownership consistently, which approach should she adopt? This is particularly problematic when using library code. Which approach does the library take? Do all the libraries used by the program use the same approach?

1.2 Automatic dynamic memory management

Automatic dynamic memory management resolves many of these issues. Garbage collection (GC) prevents dangling pointers being created: an object is reclaimed only when there is no pointer to it from a reachable object. Conversely, in principle all garbage is guaranteed to be freed — any object that is unreachable will eventually be reclaimed by the collector — with two caveats. The first is that tracing collection uses a definition of ‘garbage’ that is decidable and may not include all objects that will never be accessed again. The second is that in practice, as we shall see in later chapters, garbage collector implementations may choose for efficiency reasons not to reclaim some objects. Only the collector releases objects so the double-freeing problem cannot arise. All reclamation decisions are deferred to the collector, which has global knowledge of the structure of objects in the heap and the threads that can access them. The problems of explicit deallocation were largely due to the difficulty of making a global decision in a local context. Automatic dynamic memory management simply finesses this problem.

Above all, memory management is a software engineering issue. Well-designed programs are built from components (in the loosest sense of ...