- 362 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

About this book

Welcome to the second volume of Game Audio Programming: Principles and Practices – the first series of its kind dedicated to the art of game audio programming! This volume features more than 20 chapters containing advanced techniques from some of the top game audio programmers and sound designers in the industry. This book continues the tradition of collecting more knowledge and wisdom about game audio programming than any other volume in history.

Both audio programming beginners and seasoned veterans will find content in this book that is valuable, with topics ranging from extreme low-level mixing to high-level game integration. Each chapter contains techniques that were used in games that have shipped, and there is a plethora of code samples and diagrams. There are chapters on threading, DSP implementation, advanced middleware techniques in FMOD Studio and Audiokinetic Wwise, ambiences, mixing, music, and more.

This book has something for everyone who is programming audio for a game: programmers new to the art of audio programming, experienced audio programmers, and those souls who just got assigned the audio code. This book is for you!

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

CHAPTER 1

Life Cycle of Game Audio

CONTENTS

- 1.1 Preamble

- 1.2 Audio Life Cycle Overview

- 1.2.1 Audio Asset Naming Convention

- 1.2.2 Audio Asset Production

- 1.3 Preproduction

- 1.3.1 Concept and Discovery Phase—Let’s Keep Our Finger on the Pulse!

- 1.3.2 Audio Modules

- 1.3.3 The Audio Quality Bar

- 1.3.4 The Audio Prototypes

- 1.3.5 First Playable

- 1.3.6 Preproduction Summary

- 1.4 Production

- 1.4.1 Proof of Concept Phase—Let’s Build the Game!

- 1.4.2 The Alpha Milestone

- 1.4.3 Production Summary

- 1.5 Postproduction

- 1.5.1 Optimization, Beautification, and Mixing—Let’s Stay on Top of Things!

- 1.5.2 The Beta Milestone

- 1.5.3 Postproduction Summary

- 1.6 Conclusion

- 1.7 Postscript

- Reference

1.1 PREAMBLE

1.2 AUDIO LIFE CYCLE OVERVIEW

AudioEntity components, set GameConditions, and define GameParameters.- Preproduction → Milestone First Playable (Vertical Slice)

- Production → Milestone Alpha (Content Complete)

- Postproduction → Milestone Beta (Content Finalized)

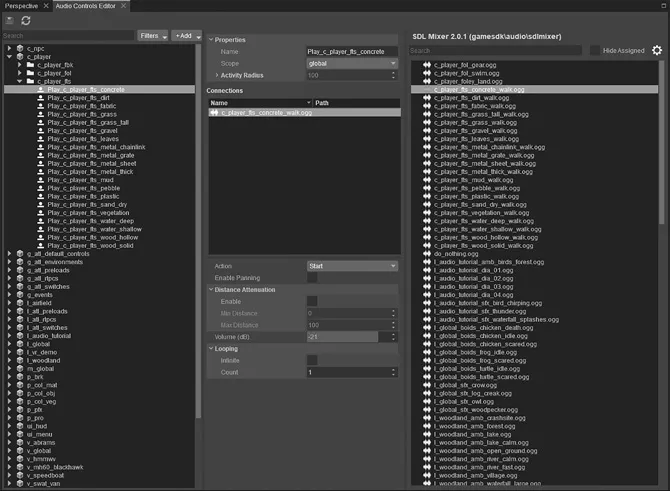

AudioEvent) which holds the specific AudioAssets and all relevant information about its playback behavior such as volume, positioning, pitch, or other parameters we would like to control in real time. This abstraction layer allows to change the event data (e.g., how a sound attenuates over distance) without touching the audio data (e.g., the actual audio asset) and vice versa. We talk about a data-driven system where the audio container provides all the necessary components to play back in the game engine. Figure 1.1 shows how this works with the audio controls editor in CryEngine.AudioControlsEditor, which functions like a patch bay where all parameters, actions, and events from the game are listed and communicated. Once we create a connection and wire the respective parameter, action, or event to the one on the audio middleware side, this established link can often remain for the rest of the production while we continue to tweak and change the underlying values and assets.

1.2.1 Audio Asset Naming Convention

AudioControls, several hundred AudioEvents, and thousands of containing AudioAssets requires a fair amount of discipline and a solid naming convention. We try to keep the naming consistent throughout the pipeline. So the name of the AudioTrigger represents the name of the AudioEvent (which in turn reflects the name of the AudioAssets it uses), along with the behavior (e.g., Start/Stop of an AudioEvent). With the use of one letter identifier (e.g., w_ for weapon) and abbreviation to keep the filename length in check (e.g., pro_ for projectile), we are able to keep a solid overview of the audio content of our projects.1.2.2 Audio Asset Production

- Create audio assetAn audio source recorded with a microphone or taken from an existing library, edited and processed in a DAW, and exported into the appropriate format (e.g.,

boss_music_stinger.wav, 24 bit, 48 kHz, mono). - Create audio eventAn exported audio asset implemented into the audio middleware in a way a game engine can execute it (e.g.,

Play_Boss_Music_Stinger) - Create audio triggerAn in-game entity which triggers the audio event (e.g., “Player enters area trigger of boss arena”).

1.3 PREPRODUCTION

1.3.1 Concept and Discovery Phase—Let’s Keep Our Finger on the Pulse!

1.3.2 Audio Modules

- Cast—characters and AI-related information

- Player—player-relevant information

- Levels—missions and the game world

- Environment—possible player interactions with the game world

- Equipment—tools and gadgets of the game characters

- Interface—HUD-related requirements

- Music—music-relevant information

- Mix—mixing-related information

1.3.3 The Audio Quality Bar

Table of contents

- Cover

- Halftitle Page

- Title Page

- Copyright Page

- Dedication Page

- Contents

- Preface

- Acknowledgments

- Editor

- Contributors

- Chapter 1 Life Cycle of Game Audio

- Chapter 2 A Rare Breed: The Audio Programmer

- Part I Low-Level Topics

- Part II Middleware

- Part III Game Integration

- Part IV Music

- Chapter 20 Note-Based Music Systems

- Chapter 21 Synchronizing Action-Based Gameplay to Music

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app