![]()

Microprocessing and the Nanolevel in the

Neurodynamics of Consciousness

![]()

8

Beyond Single Unit Recording: Characterizing Neural Information in Networks of Simultaneously Recorded Neurons

John K. Chapin and Miguel A. L. Nicolelis

Beyond Single Unit Recording: Characterizing Neural Information in Networks of Simultaneously Recorded Neurons

John K. Chapin1 and Miguel A. L. Nicolelis2

1 Dept. of Anatomy and Neurobiology,

Medical College of Pennsylvania and Hahnemann University

Broad and Vine Sts, Philadelphia, PA 19102

[email protected] 2 Dept. of Neurobiology, Box 3209

Duke University Medical Center

Durham, NC 27710

[email protected] INTRODUCTION

Over the past half century, a number of neurophysiological techniques, including electroencephalography and neuron recording, have been used to assess brain functions in awake humans or animals. While these have provided much useful information, they have not yet yielded the critical insights necessary to understand the mechanism of conscious experience. We argue here that this may be due to a general lack of appreciation in the neuroscience community for the importance of dynamic and distributed function in neuronal populations of the brain.

For more than 30 years, the accepted approach to studying functional brain circuits has been to record individual neurons, one at a time, usually in anesthetized animals. We maintain that this serial single neuron recording approach is not only inefficient but is intrinsically unsuited for characterizing mechanisms of information processing in neural circuits, especially in the conscious animal. Any investigator who has carried out single unit recording, especially at higher levels of the CNS, can attest to the fact that spiking activity of single neurons can be highly variable, approximating a near-Poisson noise spectrum [22, 28,29, 32]. Furthermore, the spiking rates of most brain neurons are too low (from 1-40Hz) to provide the necessary accuracy and dynamic range for fine coding of information on a real-time basis. For these reasons, recorded neuronal activity is typically averaged over large numbers of stimulus repetitions to obtain statistical validity. While some neurons may be shown to discharge significantly above baseline within single repetitions of a stimulus, this firing never contains sufficient information to code all features of the stimulus. Nonetheless, the brain as a whole is capable of extremely fine resolution of a wide range of stimulus information over very short time periods.

The above facts alone provide convincing evidence that the brain cannot depend on any single neuron to obtain its information and, therefore, must simultaneously utilize large populations of neurons. It is surprising that so little attention has been paid to the problem of information coding in real-time by populations of neurons. Instead, much current thinking about brain function tends to focus on the level of single neurons and not populations. As an example, recent explanations of the mechanisms underlying sleep spindles focus almost exclusively on the properties of calcium currents in certain neurons of the thalamus [38]. Though clear evidence exists that these phenomena depend on dynamically complex interactions between neurons in different nuclei [39], the mechanism of spindling at the level of neuronal networks remains little examined.

Similarly, current theories of somatosensory system function (the focus of this paper) are strongly biased by thinking based at the single cell level, rather on the level of neuronal populations. This field has tended to extrapolate the highly specific sensory properties of peripheral nerve fibers to function at higher levels of the somatosensory system. As a result, the dominant theory of this system still holds that the somatosensory cortex function does little more than represent, with high fidelity, a static map of the distribution of peripheral receptors [21]. Unfortunately, little effort has been directed toward understanding how sensory processing could be carried out in a system which merely replicates the organization of sensory receptors.

Our studies of the rat somatosensory system have repeatedly demonstrated a contrasting view: at thalamic and cortical levels receptive fields are not only remarkably large and spatiotemporally complex [8, 9, 23-26], but these fields are dynamically modified as a function of changes in behavioral state [12, 13, 34, 35] and balance of peripheral input [24]. Such findings are consistent with the known connectivity of this system, which is feedforward divergent and also includes profusely connecting feedback systems emanating from the cortex [1, 2, 11, 7]. The hypothesis to be developed here is that information in the somatosensory system is represented in a dynamic and distributed fashion, and that measuring information processing in such a system requires simultaneous recordings of large samples of single neurons distributed at multiple levels of the system.

The Case for Distributed Coding in the Brain

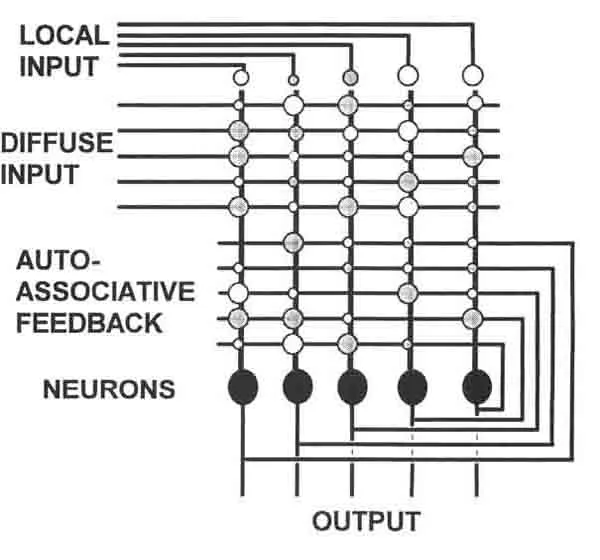

One possible explanation for the prevalence of static, locally coded models in neurophysiology is that they are simple and easily understood. Unfortunately, they have never been shown to be useful for information processing. Distributed processing, on the other hand, is relatively non-intuitive, but has been clearly demonstrated as an effective mode of information processing [15]. The emerging field of parallel distributed processing (PDP) in neural networks has provided a number of simple neural architectures which can perform distinct functions (see figure 1). These networks store information in a different manner than a digital computer, which contains a “local memory”, in which units of memory (i.e. binary bits) are stored in singular and unique storage locations on a memory chip. In contrast, a PDP system does not store memories as singular bits, but instead as patterns. A simple artificial neural network, for example, contains an array of neuron like elements, each of which receives a large number of “synaptic” inputs. The memory lies in the pattern of strengths of these synaptic contacts. A neural network can store several memory patterns, roughly in proportion to the number of neurons. Each memory involves the participation of all of the neurons. Loss of any one neuron or synapse does not destroy any one memory, but subtly degrades all memories. Thus, each neuron is involved to some extent in the storage and processing of all of the information contained within the whole network.

Both perceptrons and autoassociative nets can be used to classify input patterns. One begins with the assumption that certain distributed memories have been stored in the patterns of weightings in the network. Now if a certain input pattern is presented, for example a binary code representing the letter “A”, an appropriate previously learned bit pattern will be produced on the outputs, just as in the perceptron. A number of network architectures include recurrent feedback connections [5,6,17-19,40]. The autoassociative component of the network in figure 1 (also known as a Hopfield net) uses such feedback connections to “correct” flawed inputs. If, for example, the input pattern representing “A” has been partially corrupted the network will go into a series of interactions involving feedback from the outputs to make synaptic contact on the dendrites. Assuming most of the input pattern is intact, the erroneous bits will thus be pushed toward the correct configuration. Ideally, the iterations continue until all of the outputs have achieved the most appropriate memorized bit pattern. In a thermodynamic metaphor, the network tends towards a state of minimum energy, which is therefore most stable. Given any random input vector, the network will converge on one of a small number of such minimum energy states.

Of course, none of the artificial neural networks yet devised can compare to the complexity of real neuronal networks in the mammalian brain. Nevertheless, they provide clues as to non-intuitive modes by which the brain might process information. One can also use them as heuristic models for thinking about how one might analyze data obtained by monitoring the activity of real neural networks. For example, it is instructive to consider what a traditional neurophysiological analysis of such a network would reveal. For example, a traditional single unit analysis of the outputs of such a network would typically involve defining “receptive fields (RFs)” by averaging a single neural element’s response to multiple stimulations of only one input channel at a time. We have found that using this technique to define the “short latency RF” of an autoassociative network tends to resolve RFs consisting exclusively of single sharp centers. As these networks evolve over time, however, these RFs are observed to change over the time the network iterates toward a solution. Interestingly, we have observed such “dynamically shifting” RFs in neurons in the ventral posteromedial (VPM) thalamic nucleus of awake and anesthetized rats (described below). Unfortunately, this use of traditional techniques to understand such a network would completely miss its most important attribute: its input- output transformation is purely defined by its output patterns (or “population vector”). This information is thus only definable in terms of the relationship (i.e. covariance) between different output channels, which is a function of internal memories as well as inputs. Given the high likelihood that the brain also processes information in a parallel fashion, these observations suggest that resolution of this information will require large scale simultaneous recordings from functional networks of neurons.

FIGURE 1. Distributed processing in ne...