![]()

1

Semantic systems or system?

Neuropsychological evidence re-examined

M.J. Riddoch, * G.W. Humphreys, M. Coltheart, and E. Funnell

BIRKBECK COLLEGE, UNIVERSITY OF LONDON, LONDON, U.K.

Introduction

Access to a semantic system is necessary for comprehension to occur. Comprehension is more than mere recognition: it is possible to recognise that something has been encountered previously, without necessarily knowing how that item differs functionally from other similar items, how it is related to other items, or how it might be used. We take it that a semantic system specifies the kind of knowledge that allows decisions to be made concerning the functional and associative characteristics of things.

Our paper is concerned with the following issue: is there a single semantic system which is used for all comprehension tasks, regardless of the modality of the stimulus input or the nature of the semantic information required by the task? Or are there, instead, separate semantic systems associated with separate modalities of input or separate types of semantic information?

The second of these views is often expressed by distinguishing “visual semantics” from “verbal semantics”. However, the distinction can be interpreted in a variety of rather different ways. Clarification is therefore imperative here.

As a first point: the terms “visual” and “verbal” are slightly unfortunate, since all those who subscribe to the distinction between visual and verbal semantics would regard reading comprehension as involving access to the verbal semantic system even though the stimuli involved are visual. So it might be wise to adopt a slightly different terminology: one might refer to pictorial semantics (covering objects and pictures) and verbal semantics (covering both spoken and written words). However, to be consistent with previous work, we will continue to use the current terminology.

The two rather different interpretations of the distinction between visual semantics and verbal semantics extant in the literature are as follows.

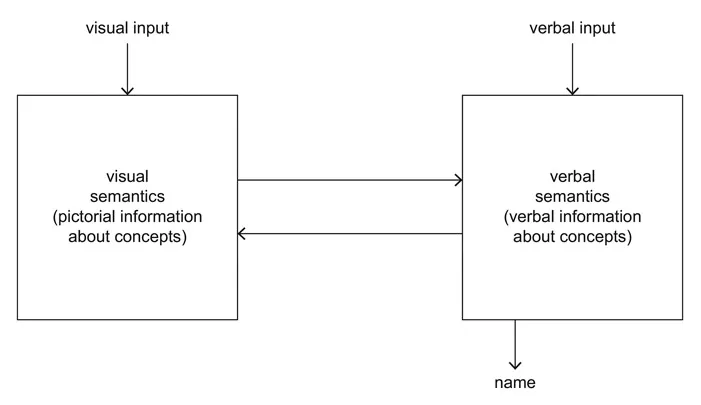

- 1 Modality of input. Comprehension of pictures or objects depends upon access to a visual semantic system. Comprehension of words depends upon access to a verbal semantic system. Analogously there will be a tactile semantic system (for comprehending touched or felt objects), an auditory semantic system (for comprehending nonverbal sounds), an olfactory semantic system, and so on. We will refer to this as the input account of the distinction between semantic systems. We take this to be the view held by Warrington (see, e.g., Warrington, 1975) and by Shallice (see, e.g., Shallice, 1987). One important implication of their views appears to be that semantic information is duplicated in the two systems. The fact that a cat drinks milk is represented in verbal semantics (which is how we know that milk-drinking is associated with the printed word cat) and also in visual semantics (which is how the property of milk-drinking can be evoked when we see a cat). It is because of this duplication that the same question (e.g. “Is it larger than a telephone directory?”) can be treated as a test of access to visual semantics (when the stimulus is a picture) and as a test of access to verbal semantics (when the stimulus is a word: see Warrington, 1975). 1 A processing framework consistent with this account is given in Figure 1.1.

Figure 1.1 The input account of the distinction between visual and verbal semantics.

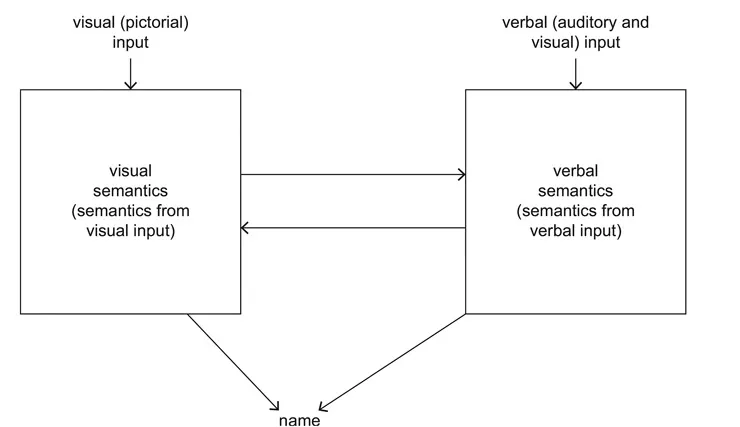

- 2 Modality of information. Access to information about visual/pictorial properties of a concept depends upon access to a pictorial semantic system in which all information about such properties is stored. In order to retrieve this information all stimuli would have to access the pictorial semantic system, irrespective of the modality of input. Access to information about verbal properties of a concept depends upon access to a verbal semantic system, again irrespective of the modality of stimulus input. We will refer to this as the representation account of the distinction between semantic systems. We take this to be the view held by Beauvois (1982; see also Beauvois & Saillant, 1985). Figure 1.2 gives a processing framework illustrating the representation account.

Figure 1.2 The representation account of the distinction between visual and verbal semantics.

To demonstrate the difference between the input and the representation accounts, consider how the two accounts explain how we answer a simple auditory question, such as “What is the colour of a strawberry?” According to the input account, we must access the verbal semantic system to answer this question because the information is presented auditorily. According to the representation account, we must access the visual semantic system to answer this question because information about the colour of a strawberry is part of our visual semantic knowledge.

Whether approach (1) or approach (2) is adopted, it is necessary to postulate bidirectional links between the two semantic systems. The input account needs such links to explain our ability to match pictures to words. The representation account needs such links to explain our ability to coordinate pictorial semantic information with verbal semantic information – our ability, for example, to answer such questions as “How large is the domestic animal which kills mice?”

There are thus two different accounts which assume that there exist separate semantic systems. We also need to incorporate the process of access to phonology for the purpose of naming. So an answer needs to be provided to this question: is it only the verbal semantic system (whether defined in sense [1] or in sense [2]) that can access the name of a concept directly, or are there direct links from representations in visual semantics (however defined) to names? This question does not arise, of course, for those who take the view that there is only a single semantic system, accessible from all modalities of input containing all forms of semantic information.

The relationship between output phonology and the different semantic systems is only explicit in the representation account of modality-specific semantic systems. For instance, in order to account for optic aphasia (a naming impairment specific to visual stimuli), Beauvois (1982; Beauvois & Saillant, 1985) posits that the bi-directional links between the visual and verbal semantic systems are disrupted, so that such patients are impaired at any tasks requiring mediation between the two semantic systems. It follows that, in order to account for a visual naming impairment in these terms, we must assume that there are no direct links between the visual semantic system and output phonology; stored visual attributes of objects can only be named via the verbal semantic system, and since the links from visual to verbal semantics are thought to be impaired, this will affect the naming of visually presented stimuli selectively. On the other hand, since such patients are often able to mime the gestures to the objects that they cannot name, it is posited that access to the visual semantic system is intact.

A rather different view comes from the work of Warrington (1975). Warrington argued that two patients, AB and EM, were selectively impaired in the representation of visual and verbal semantic knowledge, where the semantic knowledge systems were tested selectively by varying the input modality. EM, hypothesised to have an impaired verbal semantic system, was better at picture identification than AB, hypothesised to have an impaired visual semantic system (the patients scored 37/40 vs. 19/40 respectively on a picture recognition task). Accordingly, we may suggest the need for direct connections between the visual semantic system and the phonological output lexicon in order to explain EM’s superior naming and definitions of pictures. 2

In a recent discussion of the topic, Shallice (1987) has argued that there are at least three lines of neuropsychological evidence which support the existences of multiple semantic systems:

- 1 modality-specific aphasias;

- 2 modality-specific priming effects in access deficits to semantics; and

- 3 modality-specific aspects of semantic memory disorders.

Two questions may be raised: how strong is this evidence, and which variant of the multiple semantic system view is supported – the input or the representation account?

Modality-specific aphasias

Patients have been described with a naming impairment specific to a particular input modality; for example, impaired visual object naming relative to auditory and tactile naming (Lhermitte & Beauvois, 1973; Riddoch & Humphreys, 1987), impaired auditory relative to visual object naming (Denes & Semenza, 1975), and impaired tactile naming relative to visual object naming (Beauvois, Saillant, Meininger, & Lhermitte, 1978). Shallice (1985) suggests that: “the simplest explanation for these syndromes is that multiple semantic systems do exist but there is an impairment in the transmission of information from one of the modality specific semantic systems to verbal systems (including the verbal semantic system)”. Within the framework of the representation account (Fig. 1.2), Shallice’s argument would accord with the existence of a single lesion impairing connections between one of the nonverbal semantic systems and the verbal semantic system. Within the framework of the input account given in Fig. 1.1, there need to be two separate lesions, one separating the visual semantic system from the verbal semantic system and one separating the visual semantic system from output phonology.

Shallice’s argument rests on the assumption that modality-specific aphasias have been properly demonstrated and that, in such cases, there is normal access to semantic knowledge (of at least some form). One problem, though, is that in some cases the tests of naming presented in different modalities have not used the same stimuli. It is difficult to ensure that task difficulty was equated in such instances. For instance, the contrast between the naming of tactilely presented real objects and pictures of objects is not a valid test of a modality-specific naming deficit since less information will be available in pictures than when real objects are presented visually (cf. the discussion of patient JF by Lhermitte & Beauvois, 1973). Nevertheless, Riddoch and Humphreys (Note 3; in press) have shown poorer naming of the same objects presented visually than when they were presented tactilely in a patient (JB) who could also make accurate gestures to visually presented objects that he could not name. For instance, in a test of naming and gesturing to visually presented objects, JB was presented with 44 objects, each with a discriminably different gesture, JB named 20/44 (45%) of the objects correctly from their visual presentation: he made 33/44 (75%) correct gestures. There is a reliable difference between his naming and gesturing ability (McNemar’s test χ2 = 8.45, P < 0.005). We also showed that JB was impaired at accessing semantic (associative and functional) knowledge about objects from vision (see Humphreys, Riddoch, & Quinlan, this volume). From this work we take it that we cannot assume that a patient has intact access to semantic knowledge when correct gestures are made; it seems possible that gestures could be made on the basis of other nonsemantic forms of information (the perceptual attributes of objects or following access to stored structural knowledge). Thus access to semantic knowledge must always be tested directly.

A patient (PWD) with a selectively impaired ability to name meaningful nonverbal sounds in the presence of relatively good comprehension of nonverbal sounds has been reported by Denes and Semenza (1975). PWD demonstrated a gross impairment in both understanding and repeating spoken speech whereas his spontaneous speech was fluent, demonstrating normal prosody and articulation with no paraphasias. His naming of items presented visually, tactilely, or olfactorily was perfect but he was only able to name 4/20 (20%) of meaningful nonverbal sounds. PWD also carried out a sound-picture match task where four pictures were used for each target sound. The four pictures depicted:

- 1 the natural source of the sound;

- 2 an acoustically similar sound;

- 3 a source of sound from the same semantic category as the natural source; and,

- 4 an unrelated sound.

PWD scored 85% (17/20) correct on this test; such relatively good performance is difficult to account for if PWD’s semantic system for auditory nonverbal stimuli were impaired (cf. Shallice, 1985). Rather, it can be argued that PWD had at least relatively intact knowledge of the meaning and referents for sounds, enabling an improvement to occur in the picture-sound match task, when he could use pictures to derive associated sound information. Such an account would be inconsistent with the position that PWD was impaired at accessing semantic knowledge about nonverbal sounds.

Patient RG (Beauvois et al., 1978) is described as a case of tactile aphasia. This patient was impaired at naming tactilely presented objects, right hand 71% (71/100), left hand 64% (64/100), while no impairment was demonstrated in naming objects presented visually (96%, 96/100) or auditorily (98.8%, 79/80). Beauvois et al. suggest that RG is able to identify (and presumably access the correct semantics of) objects presented tactilely because he can handle perfectly objects that he cannot name. As with cases of optic aphasia, though, the inference of correct access to semantic knowledge because of correct gestures may not be valid. Gestures to tactile stimuli, similarly to gestures from vision, may be based either on perceptual information or on stored nonsemantic knowledge. Interestingly RG showed good performance on a tactile-visual matching task (100%, 100/100; where the patient had to decide whether an unseen object in his hand matched a picture that was shown to him), whereas he performed slightly worse on a tactile-verbal matching task (84%, 84/100), where the patient had to decide whether an unseen object in his hand matched a name spoken by the examiner). Correct interpretation of the relations between data from matching and naming tasks requires that we should correct the matching data to take out those responses which may be correct by chance. Since the chance level in the tactile-verbal matching task was 50%, correcting the tactile-verbal matching performance for chance indicates that the probability of correct tactile-verbal matching was 0.68. This is equal to the probability of correct tactile naming (0.68). It is therefore reasonable to claim that all of RG’s failures of tactile naming were due to failures of access to semantics, implying: (a) that no post-semantic naming difficulties were involved here; and (b) that RG did not have available a route from touch to naming that by-passes semantics. Shallice (1985) accounts for the difference between the tactile-visual and the tactile-verbal matching tasks by assuming that there is a deficit in the processes mediating communication between the tactile and verbal semantic systems but not between the tactile and visual semantic systems. However, on the basis of RG’s data alone we cannot distinguish between input and representation views of the semantic systems involved.

Modality-specific priming

Patient AR (Warrington & Shallice, 1979) is described as a semantic access dyslexic. AR was poor at naming words and objects, although his ability to name objects from an auditory description was better than his naming of the same objects from vision (11/15 vs. 1/15). AR was also reported as being able to describe objects by their function. However, his ability to name words (e.g. pyramid) was increased more by an auditory verbal prompt (such as Egypt) than by presenting a picture corresponding to the word.

Warrington and Shallice describe AR as having an access deficit because his naming of words was inconsistent in different test sessions. Shallice (1987) argues that his disorder is consistent with a theory in which modality-specific semantic systems are posited because of the differences in priming by auditory word and picture prompts. Accordingly he suggests that AR has a deficit in the processes transmitting information between the visual and verbal semantic systems, leading to the lack of picture priming. Nevertheless, access to visual semantics is thought to be intact on the basis of AR’s ability to give functional descriptions of objects. This account, t...

Figure 1.1 The input account of the distinction between visual and verbal semantics.

Figure 1.1 The input account of the distinction between visual and verbal semantics.