- 624 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

About this book

- The first book, by the leading experts, on this rapidly developing field with applications to security, smart homes, multimedia, and environmental monitoring- Comprehensive coverage of fundamentals, algorithms, design methodologies, system implementation issues, architectures, and applications- Presents in detail the latest developments in multi-camera calibration, active and heterogeneous camera networks, multi-camera object and event detection, tracking, coding, smart camera architecture and middleware This book is the definitive reference in multi-camera networks. It gives clear guidance on the conceptual and implementation issues involved in the design and operation of multi-camera networks, as well as presenting the state-of-the-art in hardware, algorithms and system development. The book is broad in scope, covering smart camera architectures, embedded processing, sensor fusion and middleware, calibration and topology, network-based detection and tracking, and applications in distributed and collaborative methods in camera networks. This book will be an ideal reference for university researchers, R&D engineers, computer engineers, and graduate students working in signal and video processing, computer vision, and sensor networks.Hamid Aghajan is a Professor of Electrical Engineering (consulting) at Stanford University. His research is on multi-camera networks for smart environments with application to smart homes, assisted living and well being, meeting rooms, and avatar-based communication and social interactions. He is Editor-in-Chief of Journal of Ambient Intelligence and Smart Environments, and was general chair of ACM/IEEE ICDSC 2008.Andrea Cavallaro is Reader (Associate Professor) at Queen Mary, University of London (QMUL). His research is on target tracking and audiovisual content analysis for advanced surveillance and multi-sensor systems. He serves as Associate Editor of the IEEE Signal Processing Magazine and the IEEE Trans. on Multimedia, and has been general chair of IEEE AVSS 2007, ACM/IEEE ICDSC 2009 and BMVC 2009.- The first book, by the leading experts, on this rapidly developing field with applications to security, smart homes, multimedia, and environmental monitoring- Comprehensive coverage of fundamentals, algorithms, design methodologies, system implementation issues, architectures, and applications- Presents in detail the latest developments in multi-camera calibration, active and heterogeneous camera networks, multi-camera object and event detection, tracking, coding, smart camera architecture and middleware

Tools to learn more effectively

Saving Books

Keyword Search

Annotating Text

Listen to it instead

Information

1.1 INTRODUCTION

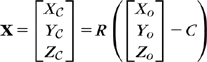

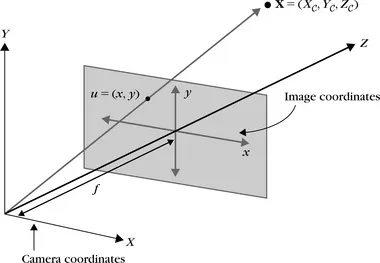

1.2 IMAGE FORMATION

1.2.1 Perspective Projection

Table of contents

- Cover image

- Title page

- Table of Contents

- Copyright

- Foreword

- Preface

- PART 1: MULTI-CAMERA CALIBRATION AND TOPOLOGY

- PART 2: ACTIVE AND HETEROGENEOUS CAMERA NETWORKS

- PART 3: MULTI-VIEW CODING

- PART 4: MULTI-CAMERA HUMAN DETECTION, TRACKING, POSE AND BEHAVIOR ANALYSIS

- PART 5: SMART CAMERA NETWORKS: ARCHITECTURE, MIDDLEWARE, AND APPLICATIONS

- Outlook

- Index

Frequently asked questions

- Essential is ideal for learners and professionals who enjoy exploring a wide range of subjects. Access the Essential Library with 800,000+ trusted titles and best-sellers across business, personal growth, and the humanities. Includes unlimited reading time and Standard Read Aloud voice.

- Complete: Perfect for advanced learners and researchers needing full, unrestricted access. Unlock 1.4M+ books across hundreds of subjects, including academic and specialized titles. The Complete Plan also includes advanced features like Premium Read Aloud and Research Assistant.

Please note we cannot support devices running on iOS 13 and Android 7 or earlier. Learn more about using the app