![]()

Chapter 1

General Principles for Assessing Higher-Order Thinking

Constructing an assessment always involves these basic principles:

- Specify clearly and exactly what it is you want to assess.

- Design tasks or test items that require students to demonstrate this knowledge or skill.

- Decide what you will take as evidence of the degree to which students have shown this knowledge or skill.

This general three-part process applies to all assessment, including assessment of higher-order thinking. Assessing higher-order thinking almost always involves three additional principles:

- Present something for students to think about, usually in the form of introductory text, visuals, scenarios, resource material, or problems of some sort.

- Use novel material—material that is new to the student, not covered in class and thus subject to recall.

- Distinguish between level of difficulty (easy versus hard) and level of thinking (lower-order thinking or recall versus higher-order thinking), and control for each separately.

The first part of this chapter briefly describes the general principles that apply to all assessment, because without those, assessment of anything, including higher-order thinking, fails. The second section expands on the three principles for assessing higher-order thinking. A third section deals with interpreting student responses when assessing higher-order thinking. Whether you are interpreting work for formative feedback and student improvement or scoring work for grading, you should look for qualities in the work that are signs of appropriate thinking.

Basic Assessment Principles

Begin by specifying clearly and exactly the kind of thinking, about what content, you wish to see evidence for. Check each learning goal you intend to assess to make sure that it specifies the relevant content clearly, and that it specifies what type of performance or task the student will be able to do with this content. If these are less than crystal clear, you have some clarifying to do.

This is more important than some teachers realize. It may seem like fussing with wording. After all, what's the difference between "the student understands what slope is" and "the student can solve multistep problems that involve identifying and calculating slope"? It's not just that one is wordier than the other. The second one specifies what students are able to do, specifically, that is both the target for learning and the way you will organize your assessment evidence.

If your target is just a topic, and you share it with students in a statement like "This week we're going to study slope," you are operating with the first kind of goal ("the student understands what slope is"). Arguably, one assessment method would be for you to ask students at the end of the week, "Do you understand slope now?" And, of course, they would all say, "Yes, we do."

Even with a less cynical approach, suppose you were going to give an end-of-week assessment to see what students knew about slope. What would you put on it? How would you know whether to write test items or performance tasks? One teacher might put together a test with 20 questions asking students to calculate slope using the point-slope formula. Another teacher might ask students to come up with their own problem situation in which finding the slope of a line is a major part of the solution, write it up as a small project, and include a class demonstration. These divergent approaches would probably result in different appraisals of students' achievement. Which teacher has evidence that the goal was met? As you have figured out by now, I hope, the point here is that you can't tell, because the target wasn't specified clearly enough.

Even with the better, clearer target—"The student can solve multistep problems that involve identifying and calculating slope"—you still have a target that's clear to only the teacher. Students are the ones who have to aim their thinking and their work toward the target. Before studying slope, most students would not know what a "multistep problem that involves identifying and calculating slope" looks like. To really have a clear target, you need to describe the nature of the achievement clearly for students, so they can aim for it.

In this case you might start with some examples of the kinds of problems that require knowing the rate of increase or decrease of some value with respect to the range of some other value. For example, suppose some physicians wanted to know whether and at what rate the expected life span for U.S. residents has changed since 1900. What data would they need? What would the math look like? Show students a few examples and ask them to come up with other scenarios of the same type until everyone is clear what kinds of thinking they should be able to do once they learn about slope.

Design performance tasks or test items that require students to use the targeted thinking and content knowledge. The next step is making sure the assessment really does call forth from students the desired knowledge and thinking skills. This requires that individual items and tasks tap intended learning, and that together as a set, the items or tasks on the assessment represent the whole domain of desired knowledge and thinking skills in a reasonable way.

Here's a simple example of an assessment item that does not tap intended learning. A teacher's unit on poetry stated the goal that students would be able to interpret poems. Her assessment consisted of a section of questions matching poems with their authors, a section requiring the identification of rhyme and meter schemes in selected excerpts from poems, and a section asking students to write an original poem. She saw these sections, rightly, as respectively tapping the new Bloom's taxonomy levels of Remember, Apply, and Create in the content area (poetry), and thought her assessment was a good one that included higher-order thinking. It is true that higher-order thinking was required. However, if you think about it, none of these items or tasks directly tapped students' ability to interpret poems.

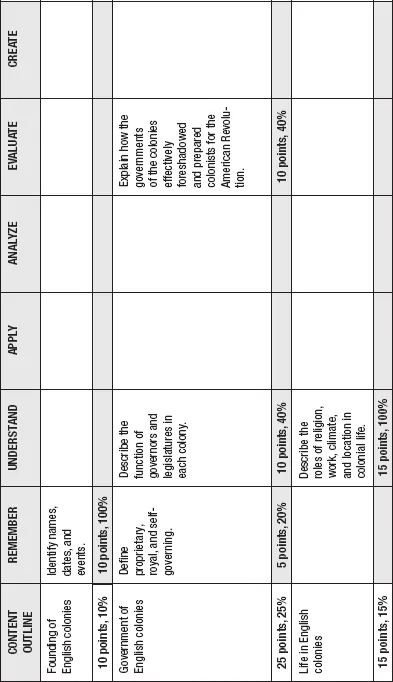

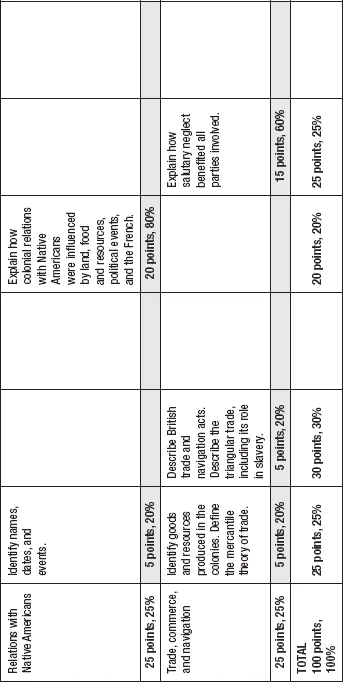

Plan the balance of content and thinking with an assessment blueprint. Some sort of planning tool is needed to ensure that a set of assessment items or tasks represents the breadth and depth of knowledge and skills intended in your learning target or targets. The most common tool for this is an assessment blueprint. An assessment blueprint is simply a plan that indicates the balance of content knowledge and thinking skills covered by a set of assessment items or tasks. A blueprint allows your assessment to achieve the desired emphasis and balance among aspects of content and among levels of thinking. Figure 1.1 shows a blueprint for a high school history assessment on the English colonies.

Figure 1.1 Blueprint for a High School Assessment on the English Colonies, 1607-1750

The first column (Content Outline) lists the major topics the assessment will cover. The outline can be as simple or as detailed as you need to describe the content domain for your learning goals. The column headings across the top list the classifications in the Cognitive domain of the revised Bloom's taxonomy. Any other taxonomy of thinking (see Chapter 2) could be used as well.

The cells in the blueprint can list the specific learning targets and the points allocated for each, as this one does, or simply indicate the number of points allocated, depending on how comprehensive the content outline is. You can also use simpler blueprints, for example, a content-by-cognitive-level matrix without the learning targets listed. The points you select for each cell should reflect your learning target and your instruction. The example in Figure 1.1 shows a 100-point assessment to make the math easy. Each time you do your own blueprint, use the intended total points for that test as the basis for figuring percents; it will not often be exactly 100 points.

Notice that the blueprint allows you to fully describe the composition and emphasis of the assessment as a whole, so you can interpret it accurately. You can also use the blueprint to identify places where you need to add material. It is not necessary for every cell to be filled. The important thing is that the cells that are filled reflect your learning goals. Note also that the points in each cell do not all have to be 1-point test items. For example, the 10 points in the cell for explaining how colonial governments helped prepare citizens for participation in the American Revolution could be one 10-point essay, two 5-point essays, or any combination that totals 10 points.

A blueprint helps ensure that your assessment and the information about student achievement that comes from it have the emphasis you intend. In the assessment diagrammed in Figure 1.1, three topic areas (government, relations with Native Americans, and trade—25 percent each) have more weight than colonial life (15 percent) or the founding of the colonies (10 percent). You can plan what percentage of each topic area is allocated to what level of thinking from the points and percentages within the rows. And the total at the bottom tells you the distribution of kinds of thinking across the whole assessment. In Figure 1.1, 55 percent of the assessment is allocated to recall and comprehension (25 percent plus 30 percent), and 45 percent is allocated to higher-order thinking (20 percent plus 25 percent). If the emphases don't come out the way you intend, it's a lot easier to change the values in the cells at the planning stage than to rewrite parts of the assessment later on.

In fact, blueprints simplify the task of writing an assessment. The blueprint tells you exactly what kind of tasks and items you need. You might, when seeing a blueprint like this, decide that you would rather remove one of the higher-order thinking objectives and use a project, paper, or other performance assessment for that portion of your learning goals for the unit, and a test to cover the rest of the learning goals. So, for example, you might split off the question in the cell Analyze/Native Americans and assign a paper or project for that. You could recalculate the test emphases to reflect an 80-point test, and combine the project score with the test score for the final grade for the unit.

Plan the balance of content and thinking for units. You can also use this blueprint approach for planning sets of assessments (in a unit, for example). Cross all the content for a unit with cognitive levels, then use the cells to plan how all the assessments fit together. Information about student knowledge, skills, and thinking from both tests and performance assessments can then be balanced across the unit.

Plan the balance of content and thinking for rubrics. And while we're on the subject of balance, use blueprint-style thinking to examine any rubrics you use. Decide on the balance of points you want for each criterion, taking into account the cognitive level required for each, and make sure the whole that they create does indeed reflect your intentions for teaching, learning, and assessing. For example, a common rubric format for written projects in many subjects assesses content completeness and accuracy, organization/communication, and writing conventions. If each criterion is weighted equally, only one-third of the project's score reflects content. Evaluating such a rubric for balance might lead you to decide to weight the content criterion double. Or it might lead you to decide there was too much emphasis on facts and not enough on interpretation, and you might change the criteria to content completeness and accuracy, soundness of thesis and reasoning, and writing conventions. You might then weight the first two criteria double, leading to a score that reflects 80 percent content (40 percent each for factual information and for higher-order thinking) and 20 percent writing.

Decide what you will take as evidence that the student has, in fact, exhibited this kind of thinking about the appropriate content. After students have responded to your assessments, then what? You need a plan for interpreting their work as evidence of the specific learning you intended. If your assessment was formative (that is, it was for learning, not for grading), then you need to know how to interpret student responses and give feedback. The criteria you use as the basis for giving students feedback should reflect that clear learning target and vision of good work that you shared with the students.

If your assessment was summative (for grading), then you need to design a scheme to score student responses in such a way that the scores reflect degrees of achievement in a meaningful way. We will return to the matter of interpreting or scoring student work after we present some specific principles for assessing higher-order thinking. It will be easier to describe how to interpret or score work once we have more completely described how to prepare the tasks that will elicit that work.

Principles for Assessing Higher-Order Thinking

Put yourself in the position of a student attempting to answer a test question or do a performance assessment task. Asking "How would I (the student) have to think to answer this question or do this task?" should help you figure out what thinking skills are required for an assessment task. Asking "What would I (the student) have to think about to answer the question or do the task?" should help you figure out what content knowledge is required for an assessment task. As for any assessment, both should match the knowledge and skills the assessment is intended to tap. This book focuses on the first question, the question about student thinking, but it is worth mentioning that both are important and must be considered together in assessment design.

As the beginning of this chapter foreshadowed, using three principles when you write assessment items or tasks will help ensure you assess higher-order thinking: (1) use introductory material or allow access to resource material, (2) use novel material, and (3) attend separately to cognitive complexity and difficulty. In the next sections, each of these principles is discussed in more detail.

Use introductory material. Using introductory material—or allowing students to use resource materials—gives students something to think about. For example, student performance on a test question about Moby Dick that does not allow students to refer to the book might say more about whether students can recall details from Moby Dick than how they can think about them.

You can use introductory material with many different types of test items and performance assessment tasks. Context-dependent multiple-choice item sets, sometimes called interpretive exercises, offer introductory material and then one or several multiple-choice items based on the material. Constructed-response (essay) questions with introductory material are similar, except students must write their own answers to the questions. Performance assessments —including various kinds of papers and projects—require students to make or do something more extended than answering a test question, and can assess higher-order thinking, especially if they ask students to support their choices or thesis, explain their reasoning, or show their work. In this book, we will look at examples of each of these three assessment types.

Use novel material. Novel material means material students have not worked with already as part of classroom instruction. Using novel material means students have to actually think, not merely recall material covered in class. For example, a seemingly higher-order-thinking essay question about how Herman Melville used the white whale as a symbol is merely recall if there was a class discussion on the question "What does the white whale symbolize in Moby Dick?" From the students' perspective, that essay question becomes "Summarize what we said in class last Thursday."

This principle about novel material can cause problems for classroom teachers in reg...