![]()

CHAPTER 1

Enter the quantum

Until the 20th century it was assumed that matter was much the same on whatever scale you looked at it. When back in Ancient Greek times a group of philosophers imagined what would happen if you cut something up into smaller and smaller pieces until you reached a piece that was uncuttable (atomos), they envisaged that atoms would be just smaller versions of what we observe. A cheese atom, for instance, would be no different, except in scale, to a block of cheese. But quantum theory turned our view on its head. As we explore the world of the very small, such as photons of light, electrons and our modern understanding of atoms, they behave like nothing we can directly experience with our senses.

A paradigm shift

Realising the very different reality at the quantum level was what historians of science like to give the pompous term a ‘paradigm shift’. Suddenly, the way that scientists looked at the world became different. Before the quantum revolution it was assumed that atoms (if they existed at all – many scientists didn’t really believe in them before the 20th century) were just like tiny little balls of the stuff they made up. Quantum physics showed that they behaved so weirdly that an atom of, say, carbon has to be treated as if it is something totally different to a piece of graphite or diamond – and yet all that is inside that lump of graphite or diamond is a collection of these carbon atoms. The behaviour of quantum particles is strange indeed, but that does not mean that it is unapproachable without a doctorate in physics. I quite happily teach the basics of quantum theory to ten-year-olds. Not the maths, but you don’t need mathematics to appreciate what’s going on. You just need the ability to suspend your disbelief. Because quantum particles refuse to behave the way you’d expect.

As the great 20th-century quantum physicist Richard Feynman (we’ll meet him again in detail before long) said in a public lecture: ‘[Y]ou think I’m going to explain it to you so you can understand it? No, you’re not going to be able to understand it. Why, then, am I going to bother you with all this? Why are you going to sit here all this time, when you won’t be able to understand what I am going to say? It is my task to persuade you not to turn away because you don’t understand it. You see, my physics students don’t understand it either. This is because I don’t understand it. Nobody does.’

It might seem that Feynman had found a good way to turn off his audience before he had started by telling them that they wouldn’t understand his talk. And surely it’s ridiculous for me to suggest I can teach this stuff to ten-year-olds when the great Feynman said he didn’t understand it? But he went on to explain what he meant. It’s not that his audience wouldn’t be able to understand what took place, what quantum physics described. It’s just that no one knows why it happens the way it does. And because what it does defies common sense, this can cause us problems. In fact quantum theory is arguably easier for ten-year-olds to accept than adults, which is one of the reasons I think that it (and relativity) should be taught in junior school. But that’s the subject of a different book.

As Feynman went on to say: ‘I’m going to describe to you how Nature is – and if you don’t like it, that’s going to get in the way of your understanding it … The theory of quantum electrodynamics [the theory governing the interaction of light and matter] describes Nature as absurd from the point of view of common sense. And it agrees fully with experiment. So I hope you can accept Nature as she is – absurd.’ We need to accept and embrace the viewpoint of an unlikely enthusiast for the subject, the novelist D.H. Lawrence, who commented that he liked quantum theory because he didn’t understand it.

The shock of the new

Part of the reason that quantum physics proved such a shocking, seismic shift is that around the start of the 20th century, scientists were, to be honest, rather smug about their level of understanding – an attitude they had probably never had before, and certainly should never have had since (though you can see it creeping in with some modern scientists). The hubris of the scientific establishment is probably best summed up by the words of a leading physicist of the time, William Thomson, Lord Kelvin. In 1900 he commented, no doubt in rounded, selfsatisfied tones: ‘There is nothing new to be discovered in physics. All that remains is more and more precise measurement.’ As a remark that he would come to bitterly regret this is surely up there with the famous clanger of Thomas J. Watson Snr, who as chairman of IBM made the impressively non-prophetic remark in 1943: ‘I think there is a world market for maybe five computers.’

Within months of Kelvin’s pronouncement, his certainty was being undermined by a German physicist called Max Planck. Planck was trying to iron out a small irritant to Kelvin’s supposed ‘nothing new’ – a technical problem that was given the impressive nickname ‘the ultraviolet catastrophe’. We have all seen how things give off light when they are heated up. For instance, take a piece of iron and put it in a furnace and it will first glow red, then yellow, before getting to white heat that will become tinged with blue. The ‘catastrophe’ that the physics of the day predicted was that the power of the light emitted by a hot body should be proportional to the square of the frequency of that light. This meant that even at room temperature, everything should be glowing blue and blasting out even more ultraviolet light. This was both evidently not happening and impossible.

To fix the problem, Planck cheated. He imagined that light could not be given off in whatever-sized amounts you like, as you would expect if it were a wave. Waves could come in any size or wavelength – they were infinitely variable, rather than being broken into discrete components. (And everyone knew that light was a wave, just as you were taught at school in the Victorian science we still impose on our children.)

Instead, Planck thought, what if the light could come out only in fixed-sized chunks? This sorted out the problem. Limit light to chunks and plug it into the maths and you didn’t get the runaway effect. Planck was very clear – he didn’t think light actually did come in chunks (or ‘quanta’ as he called them, the plural of the Latin quantum which roughly means ‘how much’), but it was a useful trick to make the maths work. Why this was the case, he had no idea, as he knew that light was a wave because there were plenty of experiments to prove it.

Mr Young’s experiment

Perhaps the best-known example of these experiments, and one we will come back to a number of times, is Young’s slits, the masterpiece of polymath Thomas Young (1773–1829). This well-off medical doctor and amateur scientist was obviously remarkable from an early age. He taught himself to read when he was two, something his parents discovered only when he asked for help with some of the longer words in the Bible. By the time he was thirteen he was a fluent reader in Greek, Latin, Hebrew, Italian and French. This was a natural precursor to one of Young’s impressive claims to fame – he made the first partial translation of Egyptian hieroglyphs. But his language abilities don’t reflect the breadth of his interests, from discovering the concept of elasticity in engineering to producing mortality tables to help insurance companies set their premiums.

His big breakthrough in understanding light came while studying the effect of temperature on the formation of dewdrops – there really was nothing in nature that didn’t interest this man. While watching the effect of candlelight on a fine mist of water droplets he discovered that they produced a series of coloured rings when the light then fell on a white screen. Young suspected that this effect was caused by interactions between waves of light, proving the wave nature that Christiaan Huygens had proclaimed back in Newton’s time. By 1801, Young was ready to prove this with an experiment that has been the definitive demonstration that light is a wave ever since.

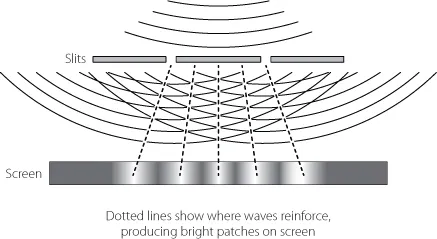

Young produced a sharp beam of light using a slit in a piece of card and shone this light onto two parallel slits, close together in another piece of card, finally letting the result fall on a screen behind. You might expect that each slit would project a bright line on the screen, but what Young observed was a series of alternating dark and light bands. To Young this was clear evidence that light was a wave. The waves from the two slits were interfering with each other. When the side-to-side ripples in both waves were heading in the same direction – say both up – at the point they met the screen, the result was a bright band. If the wave ripples were heading in opposite directions, one up and one down, they would cancel each other out and produce a dark band. A similar effect can be spotted if you drop two stones into still water near to each other and watch how the ripples interact – some waves reinforce, some cancel out. It is natural wave behaviour.

Fig. 1. Young’s slits.

It was this kind of demonstration that persuaded Planck that his quanta were nothing more than a workaround to make the calculations match what was observed, because light simply had to be a wave – but he was to be proved wrong by a man who was less worried about convention than the older Planck, Albert Einstein. Einstein was to show that Planck’s idea was far closer to reality than Planck would ever accept. This discrepancy in viewpoint was glaringly obvious when Planck recommended Einstein for the Prussian Academy of Sciences in 1913. Planck requested the academy to overlook the fact that Einstein sometimes ‘missed the target in his speculations, as for example, in his theory of light quanta …’.

The Einstein touch

That ‘speculation’ was made by Einstein in 1905 when he was a young man of 26 (forget the white-haired icon we all know: this was a dapper young man-about-town). For Einstein, 1905 was a remarkable year in which the budding scientist, who was yet to achieve a doctorate and was technically an amateur, came up with the concept of special relativity,1 showed how Brownian motion2 could be explained, making it clear that atoms really did exist, and devised an explanation for the photoelectric effect (see page 13) that turned Planck’s useful calculating method into a model of reality.

Einstein was never one to worry too much about fitting expectations. As a boy he struggled with the rigid nature of German schooling, getting himself a reputation for being lazy and uncooperative. By the time he was sixteen, when most students had little more on their mind than getting through their exams and getting on with the opposite sex, he decided that he could no longer tolerate being a German citizen. (Not that young Albert was the classic geek in finding it difficult to get on with the girls – quite the reverse.) Hoping to become a Swiss citizen, Einstein applied to the exclusive Federal Institute of Technology, the Eidgenössische Technische Hochschule or ETH, in Zürich. Certain of his own abilities in the sciences, Einstein took the entrance exam – and failed.

His problem was a combination of youth and very tightly focused interests. Einstein had not seen the point of spending much time on subjects outside the sciences, but the ETH examination was designed to pick out allrounders. However, the principal of the school was impressed by young Albert and recommended he spent a year in a Swiss secondary school to gain a more appropriate education. Next year, Einstein applied again and got through. The ETH certainly allowed Einstein more flexibility to follow his dreams than the rigid German schools, though his headstrong approach made the head of the physics department, Heinrich Weber, comment to his student: ‘You’re a very clever boy, but you have one big fault. You will never allow yourself to be told anything.’

After graduating, Einstein tried to get a post by writing to famous scientists, asking them to take him on as an assistant. When this unlikely strategy failed, he took a position as a teacher, primarily to be able to gain Swiss citizenship, as he had already renounced his German nationality, so was technically stateless. Soon, though, he would get another job, one that would give him plenty of time to think. Einstein successfully applied for the post of Patent Officer (third class) in the Swiss Patent Office in Bern.

Electricity from light

It was while working there in 1905 that Einstein turned Planck’s useful trick into the real foundation of quantum theory, writing the paper that would win him the Nobel Prize. The subject was the photoelectric effect, the science behind the solar cells we see all over the place these days producing electricity from sunlight. By the early 1900s, scientists and engineers were well aware of this effect, although at the time it was studied only in metals, rather than the semiconductors that have made modern photoelectric cells viable. That the photoelectric effect occurred was no big surprise. It was known that light had an electrical component, so it seemed reasonable that it might be able to give a push to electrons3 in a piece of metal and produce a small current. But there was something odd about the way this happened.

A couple of years earlier, the Hungarian Philipp Lenard had experimented widely with the effect and found that it didn’t matter how bright the light was that was shone on the metal – the electrons freed from the metal by light of a particular colour always had the same energy. If you moved down the spectrum of light, you would eventually reach a colour where no electrons flowed at all, however bright the light was. But this didn’t make any sense if light was a wave. It was as if the sea could only wash something away if the waves came very frequently, while vast, towering waves with a low frequency could not move a single grain of sand.

Einstein realised that Planck’s quanta, his imaginary packets of light, would provide an explanation. If light were made up of a series of particles, rather than a wave, it would produce the effects that were seen. An individual particle of light4 could knock out an electron only if it had enough energy to do so, and as far as light was concerned, higher energy corresponded to being further up the spectrum. But the outcome had no connection with the number of photons present – the brightness of the light – as the effect was produced by an interaction between a single photon and an electron.

Einstein had not only turned Planck’s useful mathematical cheat into a description of reality and explained the photoelectric effect, he had set the foundation for the whole of quantum physics, a theory that, ironically, he would spend much of his working life challenging. In less than a decade, Einstein’s concept of the ‘real’ quantum would be picked up by the young Danish physicist Niels Bohr to explain a serious problem with the atom. Because atoms really shouldn’t be stable.

Uncuttable matter

As we have seen, the idea of atoms goes all the way back to the Ancient Greeks. It was picked up by British chemist John Dalton (1766–1844) as an explanation for the nature of elements, but it was only in the early 20th century (encouraged by another of Einstein’s 1905 papers, the one on Brownian motion) that the concept of the atom was taken seriously as a real thing, rather than a metaphorical concept. The original idea of an atom was that it was the ultimate division of matter – that Greek word for uncuttable, atomos – but the British physicist Joseph John Thomson (usually known as J.J.) had discovered in 1897 that atoms could give off a smaller particle he called an electron, which seemed to be the same whatever kind of atom produced it. He deduced that the electron was a component of atoms – that atoms were cuttable after all.

The electron is negatively charged, while atoms have no electrical charge, so there had to be something else in there, something positive to balance it out. Thomson dreamed up what would become known as the ‘plum pudding model’ of the atom. In this, a collection of electrons (the plums in the pudding) are suspended in a sort of jelly of positive charge. Originally Thomson thought that all the mass of the atom came from the electrons – which meant that even the lightest atom, hydrogen, should contain well over a thousand electrons – but later work suggested that there was mass in the positive part of the atom too, and hydrogen, for example, had only the single electron we recognise today.

Bohr’s voyage of discovery

When 25-year-old physicist Niels Bohr won a scholarship to spend a year studying atoms away from his native Denmark he had no doubt where he wanted to go – to work on atoms with the great Thomson. And so in 1911 he came to Cambridge, armed with a copy of Dickens’ The Pickwick Papers and a dictionary in an attempt to improve his limited English. Unfortunately he got off to a bad start by telling Thomson at their first meeting that a calculation in one of the great man’s books was wrong. Rather than collaborating with Thomson as he had imagined, Bohr hardly saw the then star of Cambridge physics, spending most of his time allocated to his least favourite activity, undertaking experiments.

Towards the end of 1911, though, two chance meetings changed Bohr’s future and paved the way for the development of quantum theory. First, on a visit to a family friend in Manchester, and again at a ten-course dinner in Cambridge, Bohr met the imposing New Zealand physicist Ernest Rutherford, then working at Manchester University. Rutherford had recently overthrown the plum pudding model by showing that most of the atom’s mass was concentrated in a positive-charged lump occupying a tiny nucleus at the heart of the atom. Rutherford seemed a much more attractive person to work for than Thomson, and Bohr was soon heading for Manchester.

There Bohr put together his first ideas that would form the basis of the quantum atom. It might seem natural to assume that an atom with a (relat...