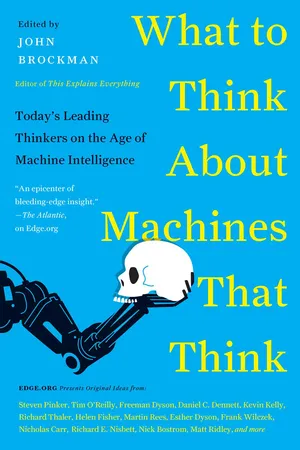

What to Think About Machines That Think

Today's Leading Thinkers on the Age of Machine Intelligence

- 576 pages

- English

- ePUB (mobile friendly)

- Available on iOS & Android

What to Think About Machines That Think

Today's Leading Thinkers on the Age of Machine Intelligence

About This Book

Weighing in from the cutting-edge frontiers of science, today's most forward-thinking minds explore the rise of "machines that think."

Stephen Hawking recently made headlines by noting, "The development of full artificial intelligence could spell the end of the human race." Others, conversely, have trumpeted a new age of "superintelligence" in which smart devices will exponentially extend human capacities. No longer just a matter of science-fiction fantasy ( 2001, Blade Runner, The Terminator, Her, etc.), it is time to seriously consider the reality of intelligent technology, many forms of which are already being integrated into our daily lives. In that spirit, John Brockman, publisher of Edge. org ("the world's smartest website" – The Guardian ), asked the world's most influential scientists, philosophers, and artists one of today's most consequential questions: What do you think about machines that think?

Frequently asked questions

Information

Table of contents

- Dedication

- Contents

- Acknowledgments

- Preface: The 2015 Edge Question

- Murray Shanahan: Consciousness in Human-Level AI

- Steven Pinker: Thinking Does Not Imply Subjugating

- Martin Rees: Organic Intelligence Has No Long-Term Future

- Steve Omohundro: A Turning Point in Artificial Intelligence

- Dimitar D. Sasselov: AI Is I

- Frank Tipler: If You Can’t Beat ’em, Join ’em

- Mario Livio: Intelligent Machines on Earth and Beyond

- Antony Garrett Lisi: I, for One, Welcome Our Machine Overlords

- John Markoff: Our Masters, Slaves, or Partners?

- Paul Davies: Designed Intelligence

- Kevin P. Hand: The Superintelligent Loner

- John C. Mather: It’s Going to Be a Wild Ride

- David Christian: Is Anyone in Charge of This Thing?

- Timo Hannay: Witness to the Universe

- Max Tegmark: Let’s Get Prepared!

- Tomaso Poggio: “Turing+” Questions

- Pamela Mccorduck: An Epochal Human Event

- Marcelo Gleiser: Welcome to Your Transhuman Self

- Sean Carroll: We Are All Machines That Think

- Nicholas G. Carr: The Control Crisis

- Jon Kleinberg & Sendhil Mullainathan: We Built Them, but We Don’t Understand Them

- Jaan Tallinn: We Need to Do Our Homework

- George Church: What Do You Care What Other Machines Think?

- Arnold Trehub: Machines Cannot Think

- Roy Baumeister: No “I” and No Capacity for Malice

- Keith Devlin: Leveraging Human Intelligence

- Emanuel Derman: A Machine Is a “Matter” Thing

- Freeman Dyson: I Could Be Wrong

- David Gelernter: Why Can’t “Being” or “Happiness” Be Computed?

- Leo M. Chalupa: No Machine Thinks About the Eternal Questions

- Daniel C. Dennett: The Singularity—an Urban Legend?

- W. Tecumseh Fitch: Nano-Intentionality

- Irene Pepperberg: A Beautiful (Visionary) Mind

- Nicholas Humphrey: The Colossus Is a BFG

- Rolf Dobelli: Self-Aware AI? Not in 1,000 Years!

- Cesar Hidalgo: Machines Don’t Think, but Neither Do People

- James J. O’Donnell: Tangled Up in the Question

- Rodney A. Brooks: Mistaking Performance for Competence

- Terrence J. Sejnowski: AI Will Make You Smarter

- Seth Lloyd: Shallow Learning

- Carlo Rovelli: Natural Creatures of a Natural World

- Frank Wilczek: Three Observations on Artificial Intelligence

- John Naughton: When I Say “Bruno Latour,” I Don’t Mean “Banana Till”

- Nick Bostrom: It’s Still Early Days

- Donald D. Hoffman: Evolving AI

- Roger Schank: Machines That Think Are in the Movies

- Juan Enriquez: Head Transplants?

- Esther Dyson: AI/AL

- Tom Griffiths: Brains and Other Thinking Machines

- Mark Pagel: They’ll Do More Good Than Harm

- Robert Provine: Keeping Them on a Leash

- Susan Blackmore: The Next Replicator

- Tim O’Reilly: What If We’re the Microbiome of the Silicon AI?

- Andy Clark: You Are What You Eat

- Moshe Hoffman: AI’s System of Rights and Government

- Brian Knutson: The Robot with a Hidden Agenda

- William Poundstone: Can Submarines Swim?

- Gregory Benford: Fear Not the AI

- Lawrence M. Krauss: What, Me Worry?

- Peter Norvig: Design Machines to Deal with the World’s Complexity

- Jonathan Gottschall: The Rise of Storytelling Machines

- Michael Shermer: Think Protopia, Not Utopia or Dystopia

- Chris Dibona: The Limits of Biological Intelligence

- Joscha Bach: Every Society Gets the AI It Deserves

- Quentin Hardy: The Beasts of AI Island

- Clifford Pickover: We Will Become One

- Ernst Pöppel: An Extraterrestrial Observation on Human Hubris

- Ross Anderson: He Who Pays the AI Calls the Tune

- W. Daniel Hillis: I Think, Therefore AI

- Paul Saffo: What Will the Place of Humans Be?

- Dylan Evans: The Great AI Swindle

- Anthony Aguirre: The Odds on AI

- Eric J. Topol: A New Wisdom of the Body

- Roger Highfield: From Regular-I to AI

- Gordon Kane: We Need More Than Thought

- Scott Atran: Are We Going in the Wrong Direction?

- Stanislas Dehaene: Two Cognitive Functions Machines Still Lack

- Matt Ridley: Among the Machines, Not Within the Machines

- Stephen M. Kosslyn: Another Kind of Diversity

- Luca De Biase: Narratives and Our Civilization

- Margaret Levi: Human Responsibility

- D. A. Wallach: Amplifiers/Implementers of Human Choices

- Rory Sutherland: Make the Thing Impossible to Hate

- Bruce Sterling: Actress Machines

- Kevin Kelly: Call Them Artificial Aliens

- Martin Seligman: Do Machines Do?

- Timothy Taylor: Denkraumverlust

- George Dyson: Analog, the Revolution That Dares Not Speak Its Name

- S. Abbas Raza: The Values of Artificial Intelligence

- Bruce Parker: Artificial Selection and Our Grandchildren

- Neil Gershenfeld: Really Good Hacks

- Daniel L. Everett: The Airbus and the Eagle

- Douglas Coupland: Humanness

- Josh Bongard: Manipulators and Manipulanda

- Ziyad Marar: Are We Thinking More Like Machines?

- Brian Eno: Just a New Fractal Detail in the Big Picture

- Marti Hearst: eGaia, a Distributed Technical-Social Mental System

- Chris Anderson: The Hive Mind

- Alex (Sandy) Pentland: The Global Artificial Intelligence Is Here

- Randolph Nesse: Will Computers Become Like Thinking, Talking Dogs?

- Richard E. Nisbett: Thinking Machines and Ennui

- Samuel Arbesman: Naches from Our Machines

- Gerald Smallberg: No Shared Theory of Mind

- Eldar Shafir: Blind to the Core of Human Experience

- Christopher Chabris: An Intuitive Theory of Machine

- Ursula Martin: Thinking Saltmarshes

- Kurt Gray: Killer Thinking Machines Keep Our Conscience Clean

- Bruce Schneier: When Thinking Machines Break the Law

- Rebecca Mackinnon: Electric Brains

- Gerd Gigerenzer: Robodoctors

- Alison Gopnik: Can Machines Ever Be As Smart As Three-Year-Olds?

- Kevin Slavin: Tic-Tac-Toe Chicken

- Alun Anderson: AI Will Make Us Smart and Robots Afraid

- Mary Catherine Bateson: When Thinking Machines Are Not a Boon

- Steve Fuller: Justice for Machines in an Organicist World

- Tania Lombrozo: Don’t Be a Chauvinist About Thinking

- Virginia Heffernan: This Sounds Like Heaven

- Barbara Strauch: Machines That Work Until They Don’t

- Sheizaf Rafaeli: The Moving Goalposts

- Edward Slingerland: Directionless Intelligence

- Nicholas A. Christakis: Human Culture As the First AI

- Joichi Ito: Beyond the Uncanny Valley

- Douglas Rushkoff: The Figure or the Ground?

- Helen Fisher: Fast, Accurate, and Stupid

- Stuart Russell: Will They Make Us Better People?

- Eliezer S. Yudkowsky: The Value-Loading Problem

- Kate Jeffery: In Our Image

- Maria Popova: The Umwelt of the Unanswerable

- Jessica L. Tracy & Kristin Laurin: Will They Think About Themselves?

- June Gruber & Raul Saucedo: Organic Versus Artifactual Thinking

- Paul Dolan: Context Surely Matters

- Thomas G. Dietterich: How to Prevent an Intelligence Explosion

- Matthew D. Lieberman: Thinking from the Inside or the Outside?

- Michael Vassar: Soft Authoritarianism

- Gregory Paul: What Will AIs Think About Us?

- Andrian Kreye: A John Henry Moment

- N. J. Enfield: Machines Aren’t into Relationships

- Nina Jablonski: The Next Phase of Human Evolution

- Gary Klein: Domination Versus Domestication

- Gary Marcus: Machines Won’t Be Thinking Anytime Soon

- Sam Harris: Can We Avoid a Digital Apocalypse?

- Molly Crockett: Could Thinking Machines Bridge the Empathy Gap?

- Abigail Marsh: Caring Machines

- Alexander Wissner-Gross: Engines of Freedom

- Sarah Demers: Any Questions?

- Bart Kosko: Thinking Machines = Old Algorithms on Faster Computers

- Julia Clarke: The Disadvantages of Metaphor

- Michael Mccullough: A Universal Basis for Human Dignity

- Haim Harari: Thinking About People Who Think Like Machines

- Hans Halvorson: Metathinking

- Christine Finn: The Value of Anticipation

- Dirk Helbing: An Ecosystem of Ideas

- John Tooby: The Iron Law of Intelligence

- Maximilian Schich: Thought-Stealing Machines

- Satyajit Das: Unintended Consequences

- Robert Sapolsky: It Depends

- Athena Vouloumanos: Will Machines Do Our Thinking for Us?

- Brian Christian: Sorry to Bother You

- Benjamin K. Bergen: Moral Machines

- Laurence C. Smith: After the Plug Is Pulled

- Giulio Boccaletti: Monitoring and Managing the Planet

- Ian Bogost: Panexperientialism

- Aubrey De Grey: When Is a Minion Not a Minion?

- Michael I. Norton: Not Buggy Enough

- Thomas A. Bass: More Funk, More Soul, More Poetry and Art

- Hans Ulrich Obrist: The Future Is Blocked to Us

- Koo Jeong-A: An Immaterial Thinkable Machine

- Richard Foreman: Baffled and Obsessed

- Richard H. Thaler: Who’s Afraid of Artificial Intelligence?

- Scott Draves: I See a Symbiosis Developing

- Matthew Ritchie: Reimagining the Self in a Distributed World

- Raphael Bousso: It’s Easy to Predict the Future

- James Croak: Fear of a God, Redux

- Andrés Roemer: Tulips on My Robot’s Tomb

- Lee Smolin: Toward a Naturalistic Account of Mind

- Stuart A. Kauffman: Machines That Think? Nuts!

- Melanie Swan: The Future Possibility-Space of Intelligence

- Tor Nørretranders: Love

- Kai Krause: An Uncanny Three-Ring Test for Machina sapiens

- Georg Diez: Free from Us

- Eduardo Salcedo-Albarán: Flawless AI Seems Like Science Fiction

- Maria Spiropulu: Emergent Hybrid Human/Machine Chimeras

- Thomas Metzinger: What If They Need to Suffer?

- Beatrice Golomb: Will We Recognize It When It Happens?

- Noga Arikha: Metarepresentation

- Demis Hassabis, Shane Legg & Mustafa Suleyman: Envoi: A Short Distance Ahead—and Plenty to Be Done

- Notes

- About the Author

- Also by John Brockman

- Credits

- Back Ads

- Copyright

- About the Publisher