![]()

Chapter 1

Introduction

1.1 What is Quantum Computing?

In quantum computers we exploit quantum effects to compute in ways that are faster or more efficient than, or even impossible, on conventional computers. Quantum computers use a specific physical implementation to gain a computational advantage over conventional computers. Properties called superposition and entanglement may, in some cases, allow an exponential amount of parallelism. Also, special purpose machines like quantum cryptographic devices use entanglement and other peculiarities like quantum uncertainty.

Quantum computing combines quantum mechanics, information theory, and aspects of computer science [31]. The field is a relatively new one that promises secure data transfer, dramatic computing speed increases, and may take component miniaturisation to its fundamental limit.

This text describes some of the introductory aspects of quantum computing. We'll examine some basic quantum mechanics, elementary quantum computing topics like qubits, quantum algorithms, physical realisations of those algorithms, basic concepts from computer science (like complexity theory, Turing machines, and linear algebra), information theory, and more.

1.2 Why Another Quantum Computing Tutorial?

Most of the books or papers on quantum computing require (or assume) prior knowledge of certain areas like linear algebra or physics so the majority of the current literature is hard to understand for the average computer enthusiast or interested layman. This text attempts to teach basic quantum computing from the ground up in an easily readable way. It contains a lot of the background in mathematics, physics, and computer science that you will need, although it is assumed that you know a little about computer programming.

At certain places in this document, topics that could make interesting research topics have been identified. These topics are presented in the following format:

Question The topic is presented in bold-italics.

1.2.1 Quantum Computation and Quantum Information

By far the most complete book available for quantum computing is Quantum Computation and Quantum Information by Michael A. Nielsen and Isaac L. Chuang, which we'll abbreviate to QCQI. The main references for this work are QCQI and a great set of lecture notes, also written by Nielsen. Nielsen's lecture notes are currently available at http://www.qinfo.org/people/nielsen/qicss.html. An honourable mention goes out to Vadim V. Bulitko who has managed to condense a large part of QCQI into fourteen pages!

QCQI may be a little hard to get into at first, particularly for those without a strong background in mathematics. So this tutorial is, in part, a collection of worked examples from various web sites, sets of lecture notes, journal entries, papers, and books which may aid in understanding of some of the concepts in QCQI.

![]()

Chapter 2

Computer Science

2.1 Introduction

The special properties of quantum computers force us to rethink some of the most fundamental aspects of computer science. In this chapter we'll see how quantum effects can give us a new kind of Turing machine, new kinds of circuits, and new kinds of complexity classes. This is important as it was thought that these things were not affected by what a computer is built from, but it turns out that they are.

A distinction has been made between computer science and information theory. Although information theory can be seen as a part of computer science it is treated separately in this text with its own dedicated chapter. This is because the quantum aspects of information theory require some of the concepts introduced in the chapters that follow this one.

There's also a little mathematics and notation used in this chapter which is presented in the first few sections of chapter 3 and some basic C and Javascript code for which you may need an external reference.

2.2 History

The origins of computer science can be traced back to the invention of algorithms like Euclid's Algorithm (c. 300 BC), which is an algorithm for finding the greatest common divisor of two numbers. There are also much older sources like early Babylonian cuneiform tablets (c. 2000–1700 BC) that contain clear evidence of algorithmic processes [22]. Up until the 19th century it's difficult to separate computer science from other sciences like mathematics and engineering so we'll say here that computer science began as a separate science in the 19th century.

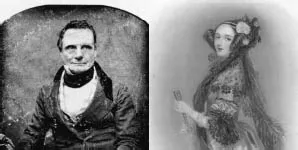

Fig. 2.1 Charles Babbage and Ada Byron.

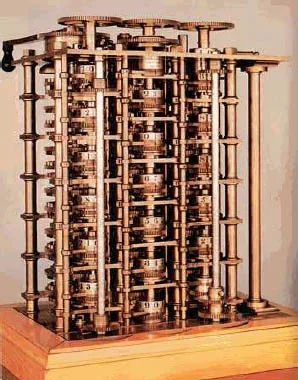

An important precursor to early computing machines was the punched card. The Jacquard loom, which was invented by Joseph Marie Jacquard (1752–1834) in 1801 [35] made use of complex patterns stored on punched cards to control a sequence of operations. In the mid 19th century Charles Babbage, 1791–1871 (figure 2.1) designed and partially built several programmable computing machines (see figure 2.4 for the difference engine built in 1822). These machines had many of the features of modern computers. One of these machines called the analytical engine had removable programs on punch cards based on those used in the Jacquard loom. Bab-bage's friend, Ada Augusta King, Countess of Lovelace, 1815–1852 (figure 2.1), the daughter of Lord Byron, is considered by some as the first programmer for her writings on the Analytical engine. Sadly Babbage's work was largely forgotten until the 1930s and the advent of modern computer science. Modern computer science can be said to have started in 1936 when logician Alan Turing, 1912–1954 (figure 2.2) wrote a paper which contained the notion of a universal computer.

Fig. 2.2 Alan Turing and Alonzo Church.

The first electronic computers were developed in the 1940s and led Jon Von Neumann, 1903–1957 (figure 2.3) to develop a generic architecture on which many modern computers are based. Von Neumann architecture specifies an Arithmetic Logic Unit (ALU), control unit, memory, input/output (IO), a bus, and a computing process. The architecture originated in 1945 in the first draft of a report on EDVAC [10].

Fig. 2.3 Jon Von Neumann.

Computers increased in power and versatility rapidly over the next sixty years, partly due to the development of the transistor in 1947, integrated circuits in 1959, and increasingly intuitive user interfaces. Gordon Moore proposed Moore's law in 1965, the current version of which states that processor complexity will double every eighteen months with respect to cost (in reality it's more like two years). This law still holds but is starting to falter, as components are getting smaller. Soon they will be so small, only being made up of a few atoms [4], that quantum effects will become unavoidable, possibly ending Moore's law.

There are ways in which we can use quantum effects to our advantage in a classical sense, but by fully utilising those effects we can achieve much more. This approach is the basis for quantum computing.

2.3 Turing Machines

In 1928 David Hilbert, 1862–1943 (figure 2.5) asked if there was a universal algorithmic process to decide whether any mathematical proposition was true. His intuition suggested yes, then, in 1930 he went as far as claiming that there were no unsolvable problems in mathematics. This was promptly refuted by Kurt Gödel, 1908–1976 (figure 2.5) in 1931 by way of his incompleteness theorem which can be roughly summed up as follows:

Fig. 2.4 Babbage's difference engine.

You might be able to prove every conceivable statement about numbers within a system by going outside the system in order to come up with new rules and axioms, but by doing so you'll only create a larger system with its own unprovable statements [24].

Then, in 1936 Alan Turing and Alonzo Church, 1903–1995 (figure 2.2) independently came up with models of computation, aimed at resolving whether or not mathematics contained problems that were uncomputable. These were problems for which there were no algorithmic solut...