Hands-On Neural Networks with TensorFlow 2.0

Understand TensorFlow, from static graph to eager execution, and design neural networks

Paolo Galeone

- 358 pagine

- English

- ePUB (disponibile sull'app)

- Disponibile su iOS e Android

Hands-On Neural Networks with TensorFlow 2.0

Understand TensorFlow, from static graph to eager execution, and design neural networks

Paolo Galeone

Informazioni sul libro

A comprehensive guide to developing neural network-based solutions using TensorFlow 2.0

Key Features

- Understand the basics of machine learning and discover the power of neural networks and deep learning

- Explore the structure of the TensorFlow framework and understand how to transition to TF 2.0

- Solve any deep learning problem by developing neural network-based solutions using TF 2.0

Book Description

TensorFlow, the most popular and widely used machine learning framework, has made it possible for almost anyone to develop machine learning solutions with ease. With TensorFlow (TF) 2.0, you'll explore a revamped framework structure, offering a wide variety of new features aimed at improving productivity and ease of use for developers.

This book covers machine learning with a focus on developing neural network-based solutions. You'll start by getting familiar with the concepts and techniques required to build solutions to deep learning problems. As you advance, you'll learn how to create classifiers, build object detection and semantic segmentation networks, train generative models, and speed up the development process using TF 2.0 tools such as TensorFlow Datasets and TensorFlow Hub.

By the end of this TensorFlow book, you'll be ready to solve any machine learning problem by developing solutions using TF 2.0 and putting them into production.

What you will learn

- Grasp machine learning and neural network techniques to solve challenging tasks

- Apply the new features of TF 2.0 to speed up development

- Use TensorFlow Datasets (tfds) and the tf.data API to build high-efficiency data input pipelines

- Perform transfer learning and fine-tuning with TensorFlow Hub

- Define and train networks to solve object detection and semantic segmentation problems

- Train Generative Adversarial Networks (GANs) to generate images and data distributions

- Use the SavedModel file format to put a model, or a generic computational graph, into production

Who this book is for

If you're a developer who wants to get started with machine learning and TensorFlow, or a data scientist interested in developing neural network solutions in TF 2.0, this book is for you. Experienced machine learning engineers who want to master the new features of the TensorFlow framework will also find this book useful.

Basic knowledge of calculus and a strong understanding of Python programming will help you grasp the topics covered in this book.

Domande frequenti

Informazioni

Section 1: Neural Network Fundamentals

- Chapter 1, What is Machine Learning?

- Chapter 2, Neural Networks and Deep Learning

What is Machine Learning?

- Supervised learning

- Unsupervised learning

- Semi-supervised learning

- The importance of the dataset

- Supervised learning

- Unsupervised learning

- Semi-supervised learning

The importance of the dataset

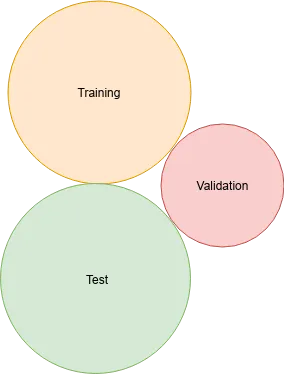

- Training set: The subset to use to train the model.

- Validation set: The subset to measure the model's performance during the training and also to perform hyperparameter tuning/searches.

- Test set: The subset to never touch during the training or validation phases. This is used only to run the final performance evaluation.

Indice dei contenuti

- Title Page

- Copyright and Credits

- About Packt

- Contributors

- Preface

- Section 1: Neural Network Fundamentals

- What is Machine Learning?

- Neural Networks and Deep Learning

- Section 2: TensorFlow Fundamentals

- TensorFlow Graph Architecture

- TensorFlow 2.0 Architecture

- Efficient Data Input Pipelines and Estimator API

- Section 3: The Application of Neural Networks

- Image Classification Using TensorFlow Hub

- Introduction to Object Detection

- Semantic Segmentation and Custom Dataset Builder

- Generative Adversarial Networks

- Bringing a Model to Production

- Other Books You May Enjoy